View table: PEPrerequisites

Table structure:

- productshort - String

- Role - String

- DisplayName - String

- TocName - String

- Dimension - String

- Context - Wikitext

- Product - String

- Manual - String

- UseCase - String

- PEPageType - String

- LimitationsText - Wikitext

- HelmText - Wikitext

- ThirdPartyText - Wikitext

- StorageText - Wikitext

- NetworkText - Wikitext

- BrowserText - Wikitext

- DependenciesText - Wikitext

- GDPRText - Wikitext

- IncludedServiceId - String

This table has 55 rows altogether.

| Page | productshort | Role | DisplayName | TocName | Dimension | Context | Product | Manual | UseCase | PEPageType | LimitationsText | HelmText | ThirdPartyText | StorageText | NetworkText | BrowserText | DependenciesText | GDPRText | IncludedServiceId | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| AUTH/Current/AuthPEGuide/Planning | AUTH | Before you begin | Find out what to do before deploying Genesys Authentication. | Genesys Authentication | AuthPEGuide | bf21dc7c-597d-4bbe-8df2-a2a64bd3f167 | Genesys Authentication in Genesys Multicloud CX private edition is made up of three containers, one for each of its components:

The service also includes a Helm chart, which you must deploy to install all three containers for Genesys Authentication:

See Helm charts and containers for Authentication, Login, and SSO for the Helm chart version you must download for your release. To download the Helm chart, navigate to the gauth folder in the JFrog repository. See Downloading your Genesys Multicloud CX containers for details. |

Install the prerequisite dependencies listed in the Third-party services table before you deploy Genesys Authentication. | Genesys Authentication uses PostgreSQL to store key/value pairs for the Authentication API and Environment API services. It uses Redis to cache data for the Authentication API service. | IngressGenesys Authentication supports both internal and external ingress with two ingress objects that are configured with the ingress and internal_ingress settings in the values.yaml file. See Configure Genesys Authentication for details about overriding Helm chart values.

These ingress objects support Transport Layer Security (TLS) version 1.2. TLS is enabled by default and you can configure it by overriding the ingress.tls and internal_ingress.tls settings in values.yaml. For example: ?'"`UNIQ--source-0000000F-QINU`"'? In the example above:

CookiesGenesys Authentication components use cookies to identify HTTP/HTTPS user sessions. |

The Authentication UI supports the web browsers listed in the Browsers table. | Genesys Authentication must be deployed before other Genesys Multicloud CX private edition services. To complete provisioning the service, you must first deploy Web Services and Applications and the Tenant Service. For a look at the high-level deployment order, see Order of services deployment. | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| ContentAdmin/Internal/Small/Test5 | ContentAdmin | Test Prereq page for PE | Intro statement summarizing this page. | Internal Content Administration | Small | bf21dc7c-597d-4bbe-8df2-a2a64bd3f167 |

|

Intro to third-party section | Unstructured chunk | Unstructured chunk | Intro text for browser section. | Unstructured chunk | Intro text for GDPR section. | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| DES/Current/DESPEGuide/Planning | DES | Before you begin | Find out what to do before deploying Designer. | Designer | DESPEGuide | bf21dc7c-597d-4bbe-8df2-a2a64bd3f167 | Designer currently supports multi-tenancy provided by the tenant Configuration Server. That is, each tenant should have a dedicated Configuration Server, and Designer can be shared across the multiple tenants. Before you begin:

After you complete the above mandatory procedures, return to this document to complete deployment of Designer and DAS as a service in a K8s cluster. Important Designer applications cannot be used to handle default routed calls or voice interactions. IRD applications should be used for such scenarios until Designer adds support for handling default routed calls or voice interactions. |

Download the Designer related Docker containers and Helm charts from the JFrog repository. See Helm charts and containers for Designer for the Helm chart and container versions you must download for your release. For more information on JFrog, refer to the Downloading your Genesys Multicloud CX containers topic in the Setting up Genesys Multicloud CX private edition document. |

The following section lists the third-party prerequisites for Designer.

For information about setting up your Genesys Multicloud CX private edition platform, including Kubernetes, Helm, and other prerequisites, see Software requirements. |

The following storage requirements are mandatory prerequisites:

|

|

Unless otherwise noted, Designer supports the latest versions of the following browsers:

Internet Explorer (all versions) is not supported. Important For Google Chrome, Designer supports the n-1 version of the browser, i.e. the version prior to the latest release.Minimum display resolutionThe minimum display resolution supported by Designer is 1920 x 1080. Third-party cookiesSome features in Designer require the use of third-party cookies. Browsers must allow third-party cookies to be stored for Designer to work properly. |

The following Genesys dependencies are mandatory prerequisites:

For the order in which the Genesys services must be deployed, refer to the Order of services deployment topic in the Setting up Genesys Multicloud CX private edition document. |

Designer supports the European Union's General Data Protection Regulation (GDPR) requirements and provides customers the ability to export or delete sensitive data using ElasticSearch APIs and other third-party tools. For the purposes of GDPR compliance, Genesys is a data processor on behalf of customers who use Designer. Customers are the data controllers of the personal data that they collect from their end customers, that is, the data subjects. Designer Analytics can potentially store data collected from end users in ElasticSearch. This data can be queried by certain fields that are relevant to GDPR. Once identified, the data can be exported or deleted using ElasticSearch APIs and other third-party tools that customers find suitable for their needs. In particular, the following SDR fields may contain PII or sensitive data that customers can choose to delete or export as required:

?'"`UNIQ--source-0000000B-QINU`"'?

Important It is the customer's responsibility to remove any PII or sensitive data within 21 days or less, if required by General Data Protection Regulation (GDPR) standards. |

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Draft:AUTH/Current/AuthPEGuide/Planning | Draft:AUTH | Before you begin | Find out what to do before deploying Genesys Authentication. | Genesys Authentication | AuthPEGuide | bf21dc7c-597d-4bbe-8df2-a2a64bd3f167 | Genesys Authentication in Genesys Multicloud CX private edition is made up of three containers, one for each of its components:

The service also includes a Helm chart, which you must deploy to install all three containers for Genesys Authentication:

See Helm charts and containers for Authentication, Login, and SSO for the Helm chart version you must download for your release. To download the Helm chart, navigate to the gauth folder in the JFrog repository. See Downloading your Genesys Multicloud CX containers for details. |

Install the prerequisite dependencies listed in the Third-party services table before you deploy Genesys Authentication. | Genesys Authentication uses PostgreSQL to store key-value pairs for the Authentication API and Environment API services. It uses Redis to cache data for the Authentication API service.

Create a PostgreSQL database and userBefore deploying Genesys Authentication, you must create a PostgreSQL database and a user with superuser permissions. This example creates the "gauth_master" user and "gauth_db" database, but you can use any names that makes sense for your organization: ?'"`UNIQ--syntaxhighlight-00000012-QINU`"'?Note: Make note of the user name, password, and database to configure the postgres settings in the values.yaml file. |

IngressGenesys Authentication supports both internal and external ingress with two ingress objects that are configured with the ingress and internal_ingress settings in the values.yaml file. See Configure Genesys Authentication for details about overriding Helm chart values.

These ingress objects support Transport Layer Security (TLS) version 1.2. TLS is enabled by default and you can configure it by overriding the ingress.tls and internal_ingress.tls settings in values.yaml. For example: ?'"`UNIQ--source-00000014-QINU`"'? In the example above:

CookiesGenesys Authentication components use cookies to identify HTTP/HTTPS user sessions. |

The Authentication UI supports the web browsers listed in the Browsers table. | Genesys Authentication must be deployed before other Genesys Multicloud CX private edition services. To complete provisioning the service, you must first deploy Web Services and Applications and the Tenant Service. For a look at the high-level deployment order, see Order of services deployment. | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Draft:ContentAdmin/Boilerplate/PEGuide/Planning | Draft:ContentAdmin | Before you begin | Find out what to do before deploying <service_name>. | Internal Content Administration | PEGuide | bf21dc7c-597d-4bbe-8df2-a2a64bd3f167 | List any limitations or assumptions related to the deployment. |

List the containers and the <service_names> they include. Provide any specific information about the container and its Helm charts. Link to the "suite-level" doc for common information about how to download the Helm charts in Jfrog Edge: Downloading your Genesys Multicloud CX containers See Helm charts and containers for <service_name> for the Helm chart version you must download for your release. For information about how to download the Helm charts, see Downloading your Genesys Multicloud CX containers. |

List any third-party services that are required (both common across Genesys Multicloud CX private edition and specific to <service_name>). |

Describe storage requirements, including:

|

Describe network requirements, including:

|

List supported browsers/versions for the UI, if applicable. |

Describe any dependencies <service_name> has on other Genesys services. Include a link to the "suite-level" documentation for the order in which services must be deployed. For example, the Auth and GWS services must be deployed and running before deploying the WWE service. Order of services deployment |

Provide information about GDPR support. Include a link to the "suite-level" documentation. Link to come |

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Draft:ContentAdmin/Internal/Small/Test5 | Draft:ContentAdmin | Test Prereq page for PE | Intro statement summarizing this page. | Internal Content Administration | Small | bf21dc7c-597d-4bbe-8df2-a2a64bd3f167 |

|

Intro to third-party section | Unstructured chunk | Unstructured chunk | Intro text for browser section. | Unstructured chunk | Intro text for GDPR section. | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Draft:DES/Current/DESPEGuide/Planning | Draft:DES | Before you begin | Find out what to do before deploying Designer. | Designer | DESPEGuide | bf21dc7c-597d-4bbe-8df2-a2a64bd3f167 | Designer currently supports multi-tenancy provided by the tenant Configuration Server. That is, each tenant should have a dedicated Configuration Server, and Designer can be shared across the multiple tenants. Before you begin:

After you complete the above mandatory procedures, return to this document to complete deployment of Designer and DAS as a service in a K8s cluster. Important Designer applications cannot be used to handle default routed calls or voice interactions. IRD applications should be used for such scenarios until Designer adds support for handling default routed calls or voice interactions. |

Download the Designer related Docker containers and Helm charts from the JFrog repository. See Helm charts and containers for Designer for the Helm chart and container versions you must download for your release. For more information on JFrog, refer to the Downloading your Genesys Multicloud CX containers topic in the Setting up Genesys Multicloud CX private edition document. |

The following section lists the third-party prerequisites for Designer.

For information about setting up your Genesys Multicloud CX private edition platform, including Kubernetes, Helm, and other prerequisites, see Software requirements. |

The following storage requirements are mandatory prerequisites:

|

|

Unless otherwise noted, Designer supports the latest versions of the following browsers:

Internet Explorer (all versions) is not supported. Important For Google Chrome, Designer supports the n-1 version of the browser, i.e. the version prior to the latest release.Minimum display resolutionThe minimum display resolution supported by Designer is 1920 x 1080. Third-party cookiesSome features in Designer require the use of third-party cookies. Browsers must allow third-party cookies to be stored for Designer to work properly. |

The following Genesys dependencies are mandatory prerequisites:

For the order in which the Genesys services must be deployed, refer to the Order of services deployment topic in the Setting up Genesys Multicloud CX private edition document. |

Designer supports the European Union's General Data Protection Regulation (GDPR) requirements and provides customers the ability to export or delete sensitive data using ElasticSearch APIs and other third-party tools. For the purposes of GDPR compliance, Genesys is a data processor on behalf of customers who use Designer. Customers are the data controllers of the personal data that they collect from their end customers, that is, the data subjects. Designer Analytics can potentially store data collected from end users in ElasticSearch. This data can be queried by certain fields that are relevant to GDPR. Once identified, the data can be exported or deleted using ElasticSearch APIs and other third-party tools that customers find suitable for their needs. In particular, the following SDR fields may contain PII or sensitive data that customers can choose to delete or export as required:

?'"`UNIQ--source-0000000B-QINU`"'?

Important It is the customer's responsibility to remove any PII or sensitive data within 21 days or less, if required by General Data Protection Regulation (GDPR) standards. |

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Draft:GAWFM/Current/GAWFMPEGuide/Planning | Draft:GAWFM | Before you begin | Find out what to do before deploying Gplus Adapter for WFM. | Gplus Adapter for WFM | GAWFMPEGuide | bf21dc7c-597d-4bbe-8df2-a2a64bd3f167 | Persistent storage access to recovery logs. | For information about how to download the Helm charts, see Downloading your Genesys Multicloud CX containers.Containers:The GPlus Adapter for WFM has the following containers:

Helm charts:

|

List any third-party services that are required (both common across Genesys Multicloud CX private edition and specific to <service_name>). |

Resource recommendations

Storage sizing calculatorThe adapter requires storage of recovery logs for 1 week. The recovery logging for the adapter stores the full data for every event it receives from Configuration Server, Interaction Server, and TServer. Customers need to provide enough storage to handle 7 days of TServer and Interaction Server event data. In addition, the adapter downloads a fresh copy of all CME data daily from Configuration Server. A rough approximation for how much storage is required can be expressed with the following formula: Storage = 7 days × (EventSize × #Events + CMESize) × Compression × Padding Storage: The amount of storage required by the adapter. EventSize: The average size of TServer/Interaction Server events in Bytes. This depends on the business rules of the contact center and how much user data is being attached to events. A typical value would be in the range of 1-5KB. #Events: The average number of TServer/Interaction Server events processed in a typical day. This can vary widely depending on the complexity and size of the contact center as well as call volume. CMESize: The aggregate size of all CME data being monitored by the adapter. The amount of memory taken up by the Configuration Server application can be used as an approximation. Compression: A multiplier representing the amount of compression of the gzip log files, typically 0.05-0.10. Padding: A multiplier to provide extra space in case of periods of high activity or unexpected restarts. A value of 2 is recommended. For example: To calculate the amount of storage required by a contact center with an average EventSize of 3KB and 5 million events per day, with a CME Size of 1GB then: Storage = 7 × (3KB × 5,000,000 + 1GB) × 0.10 × 2 = 21GB per week Other storage requirementsThe speed and throughput requirements for recovery log storage depend on the amount of logging calculated in the previous section. If the adapter writes 21GB per week then it writes 3GB per day and if most of the logging takes place during the 16 busiest hours of the day, then the adapter will need to write at a rate of: Rate = 3GB ÷ 16hr = 56 MB/s This kind of throughput can be handled by most disk storage providers. |

CNI for Direct Pod RoutingThe GPlus Adapter does not have any special requirements for CNI networking. IngressThe GPlus Adapter does not expose any http/https endpoints for ingress. Subnet sizingThe GPlus Adapter does not support deployments with multiple replicas and so requires only one IP address per instance. External ConnectionsThe GPlus WFM Adapter requires connections to Genesys Configuration Server, TServer and Interaction Server in order to monitor state information for interactions, agents, and other configuration objects. The adapter may also have optional connections to WFM FTP servers for transferring reports. For RTA streaming, the adapter may either act as a server for the various WFM RTA clients to connect to (applies to vendors Teleopti, Nice-IEX, Verint), or as a client in the case of Aspect. Prometheus metrics are exposed on the /metrics http endpoint set up on the adapter, although this endpoint is not exposed to the internet.

|

N/A | Describe any dependencies <service_name> has on other Genesys services. Include a link to the "suite-level" documentation for the order in which services must be deployed. For example, the Auth and GWS services must be deployed and running before deploying the WWE service. Order of services deployment

|

Provide information about GDPR support. Include a link to the "suite-level" documentation. Link to come |

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Draft:GVP/Current/GVPPEGuide/Planning | Draft:GVP | Before you begin | Find out what to do before deploying Genesys Voice Platform. | Genesys Voice Platform | GVPPEGuide | bf21dc7c-597d-4bbe-8df2-a2a64bd3f167 |

|

You will have to download the GVP related Docker containers and Helm charts from the JFrog repository. For docker container and helm chart versions, refer to Helm charts and containers for Genesys Voice Platform. For more information on JFrog, refer to the Downloading your Genesys Multicloud CX containers topic in the Setting up Genesys Multicloud CX private edition document. |

Media Control PlatformStorage requirement for production (min)

Storage requirements for Sandbox

Resource ManagerStorage requirement for production (min)

Storage requirements for Sandbox

Service DiscoveryNot applicable Reporting ServerStorage requirement for production (min)

Storage requirement for Sandbox

GVP Configuration ServerNot applicable |

Media Control PlatformIngressNot applicable HA/DRMCP is deployed with autoscaling in all regions. For more details, see the section Auto-scaling. Calls are routed to active MCPs from GVP Resource Manager (RM) and in case of a MCP instance terminating, the calls are then routed to a different MCP instance. Cross-region bandwidthMCPs are not expected to be doing cross-region requests in normal mode of operation. External connectionsNot applicable Pod Security PolicyAll containers running as genesys user (500) and non-root user. ?'"`UNIQ--source-0000001A-QINU`"'? SMTP SettingsNot applicable TLS/SSL Certificates configurationsNot applicable Resource ManagerIngressNot applicable HA/DRResource Manager is deployed as the Active and Active pair. Cross-region bandwidthResource Manager is deployed per region. There is no cross region deployment. External connectionsNot applicable Pod Security PolicyAll containers running as genesys user (500) and non-root user. ?'"`UNIQ--source-0000001C-QINU`"'? SMTP SettingsNot applicable TLS/SSL Certificates configurationsNot applicable Service DiscoveryIngressNot applicable HA/DRService Discovery is a singleton service which will be restarted if it shuts down unexpectedly or becomes unavailable. Cross-region bandwidthService Discovery is not expected to be doing cross-region requests in normal mode of operation. External connectionsNot applicable Pod Security PolicyAll containers running as genesys user (500) and non-root user. ?'"`UNIQ--source-0000001E-QINU`"'? SMTP SettingsNot applicable TLS/SSL Certificates configurationsNot applicable Reporting ServerIngressNot applicable HA/DRReporting Server is deployed as a single pod service. Cross-region bandwidthReporting Server is deployed per region. There is no cross region deployment. External connectionsNot applicable Pod Security PolicyAll containers running as genesys user (500) and non-root user. ?'"`UNIQ--source-00000020-QINU`"'? SMTP SettingNot applicable TLS/SSL Certificates configurationsNot applicable GVP Configuration ServerIngressNot applicable HA/DRGVP Configuration Server is deployed as a singleton. If the GVP Configuration Server crashes, a new pod will be created. The GVP services will continue to service calls if the GVP Configuration Server is unavailable and only new configuration changes, such as new MCP pods, will not be available. Cross-region bandwidthGVP Configuration Server is not expected to be doing cross-region requests in normal mode of operation. External connections

Pod Security PolicyAll containers running as genesys user (500) and non-root user. ?'"`UNIQ--source-00000022-QINU`"'? SMTP SettingsNot applicable TLS/SSL Certificates configurationsNot applicable |

N/A | Media Control Platform

Resource Manager

Service Discovery

Reporting Server

GVP Configuration ServerN/A |

This section describes product-specific aspects of Genesys Voice Platform support for the European Union's General Data Protection Regulation (GDPR) in premise deployments. For general information about Genesys support for GDPR compliance, see General Data Protection Regulation. Warning Disclaimer: The information contained here is not considered final. This document will be updated with additional technical information. Data Retention PoliciesGVP has configurable retention policies that allow expiration of data. GVP allows aggregating data for items like peak and call volume reporting. The aggregated data is anonymous. Detailed call detail records include DNIS and ANI data. The Voice Application Reporter (VAR) data could potentially have personal data, and would have to be deleted when requested. The log data files would have sensitive information (possibly masked), but requires the data to be rotated/expired frequently to meet the needs of GDPR. Configuration SettingsMedia Server Media Server is capable of storing data and sending alarms which can potentially contain sensitive information, but by default, the data will typically be automatically cleansed (by the log rollover process) within 40 days. The location of these files can be configured in the GVP Media Control Platform Configuration [default paths are shown below]:

The location of these files can be configured in the GVP Media Control Platform Configuration [default paths are shown below]:

Note: Changing default values is not really supported in the initial Private Edition release for any of the above MCP options. Also, additional sinks are available where alarms and potentially sensitive information can be captured. See Table 6 and Appendix H of the Genesys Voice Platform User’s Guide for more information. The metrics can be configured in the GVP Media Control Platform configuration:

GVP Resource Manager Resource Manager is capable of storing data and sending alarms and potentially sensitive information, but by default, the data will typically be automatically cleansed (by the log rollover process) within 40 days. Customers are advised to understand the GVP logging (for all components) and understand the sinks (destinations) for information which the platform can potentially capture. See Table 6 and Appendix H of the Genesys Voice Platform User’s Guide for more information. GVP Reporting Server The Reporting Server is capable of storing/sending alarms and potentially sensitive information, but by default, these components process but do not store consumer PII. Customers are advised to understand the GVP logging (for all components) and understand the sinks (destinations) for information which the platform can potentially capture. See Table 6 and Appendix H of the Genesys Voice Platform User’s Guide for more information. By default, Reporting Server is designed to collect statistics and other user information. Retention period of this information is configurable, with most data stored for less than 40 days. Customers should work with their application designers to understand what information is captured as part of the application, and, whether or not the data could be considered sensitive. These settings could be changed by the customer as per their need by using a Helm chart override values.yaml. Data Retention Specific Settings

Identifying Sensitive Information for Processing The following example demonstrates how to find this information in the Reporting Server database – for the example where ‘Session_ID’ is considered sensitive:

An example of a SQL query which might be used to understand if specific information is sensitive: ?'"`UNIQ--source-00000024-QINU`"'? |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Draft:GWS/Current/GWSPEGuide/Planning | Draft:GWS | Before you begin | Find out what to do before deploying Genesys Web Services and Applications. | Genesys Web Services and Applications | GWSPEGuide | bf21dc7c-597d-4bbe-8df2-a2a64bd3f167 | Genesys Web Services and Applications (GWS) in Genesys Multicloud CX private edition is made up of multiple containers and Helm charts. The pages in this "Configure and deploy" chapter walk you through how to deploy the following Helm charts:

GWS also includes a Helm chart for Nginx (wwe-nginx) for Workspace Web Edition - see the Workspace Web Edition Private Edition Guide for details about how to deploy this chart. See Helm charts and containers for Genesys Web Services and Applications for the Helm chart versions you must download for your release. For information about downloading Helm charts from JFrog Edge, see Downloading your Genesys Multicloud CX containers. |

Install the prerequisite dependencies listed in the Third-party services table before you deploy Genesys Web Services and Applications. See Software requirements for a full list of prerequisites and third-party services required by all Genesys Multicloud CX private edition services. | GWS uses PostgreSQL to store tenant information, Redis to cache session data, and Elasticsearch to store monitored statistics for fast access. If you set up any of these services as dedicated services for GWS, they have the following minimal requirements:PostgreSQL

Redis

Elasticsearch

|

GWS ingress objects support Transport Layer Security (TLS) version 1.2 for a secure connection between Kubernetes cluster ingress and GWS ingress. TLS is disabled by default, but you can configure it for internal and external ingress by overriding the entryPoints.internal.ingress.tls and entryPoints.external.ingress.tls sections of the GWS ingress Helm chart. For example: entryPoints:

external:

ingress:

tls:

- secretName: gws-secret-ext

hosts:

- gws.genesys.com

In the example above:

CookiesGWS components use cookies for following purposes:

|

JM: Are these browsers are supported for Agent Setup? Browser support for WWE is documented in the WWE guid for private edition: Before you begin. Also, can we align with the versions that are supposed to be supported for cloud/private edition in all products? Those versions are:

|

Genesys Web Services and Applications must be deployed after Genesys Authentication. For a look at the high-level deployment order, see Order of services deployment in the Setting up Genesys Multicloud CX Private Edition guide. |

?'"`UNIQ--nowiki-0000000D-QINU`"'? | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Draft:IXN/Current/IXNPEGuide/Planning | Draft:IXN | Before you begin | Find out what to do before deploying Interaction Server. | Interaction Server | IXNPEGuide | bf21dc7c-597d-4bbe-8df2-a2a64bd3f167 | The current version of IXN Server:

|

Available IXN containers can be found by the following names in registry:

Available helm charts can be found by the name ixn-<version> For information about downloading Genesys containers and Helm charts from JFrog Edge, see the suite-level documentation: Downloading your Genesys Multicloud CX containers. |

The following are the minimum versions supported by IXN Server:

|

In case logging into files is configured for IXN Server, it requires a volume storage mounted to IXN Server container. The storage must be capable to write up to 100 MB/min and 10 MB/s for 2 minutes in peak. The storage size depends on logging configuration. Regarding storage characteristics for IXN Server database, refer to PostgreSQL documentation. Contact your account representative if you need assistance with sizing calculations. |

Not applicable | Not applicable | Tenant service. For more information, refer to the Tenant Service Private Edition Guide.

|

Provide information about GDPR support. Include a link to the "suite-level" documentation. Link to come |

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Draft:PEC-AD/Current/WWEPEGuide/Planning | Draft:PEC-AD | Before you begin | Find out what to do before deploying Workspace Web Edition. | Agent Desktop | WWEPEGuide | bf21dc7c-597d-4bbe-8df2-a2a64bd3f167 | There are no limitations or assumptions related to the deployment. | The Workspace Web Edition Helm charts are included in the Genesys Web Services (GWS) Helm charts. You can access them when you download the GWS Helm charts from JFrog using your credentials. See Helm charts and containers for Genesys Web Services and Applications for the Helm chart version you must download for your release. For information about downloading Genesys Helm charts from JFrog Edge, refer to this article: Downloading your Genesys Multicloud CX containers. |

There are no specific storage requirements for Workspace Web Edition. | Network requirements include:

|

You can use any of the supported browsers to run Agent Workspace on the client side. | Mandatory DependenciesThe following services must be deployed and running before deploying the WWE service. For more information, refer to Order of services deployment.

Optional DependenciesDepending on the deployed architecture, the following services must be deployed and running before deploying the WWE service:

Miscellaneous desktop-side optional dependenciesThe following software must or might be deployed on agent workstations to allow agents to leverage the WWE service:

|

Workspace Web Edition does not have specific GDPR support. | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Draft:PEC-CAB/Current/CABPEGuide/Planning | Draft:PEC-CAB | Before you begin | Find out what to do before deploying Genesys Callback. | Callback | CABPEGuide | bf21dc7c-597d-4bbe-8df2-a2a64bd3f167 | Genesys Engagement Service (GES) is the only service that runs in the GES Docker container. The Helm charts included with the GES release provision GES and any Kubernetes infrastructure necessary for GES to run, such as load balancing, autoscaling, ingress control, and monitoring integration. For information about how to download the Helm charts, see Downloading your Genesys Multicloud CX containers. See Helm charts and containers for Callback for the Helm chart version you must download for your release. |

For information about setting up your Genesys Multicloud CX private edition platform, see Software requirements. | The primary contributor to the size of a callback record is the amount of user data that is attached to a callback. Since this is an open-ended field, and the composition will differ from customer to customer, it is difficult to state the precise storage requirements of GES for a given deployment. To assist you, the following table lists the results of testing done in an internal Genesys development environment and shows the impact that user data has when it comes to the storage requirements for both Redis and Postgres.

Note: This is 100k of randomized string in a single field in the user data. Hardware requirementsGenesys strongly recommends the following hardware requirements to run GES with a single tenant. The requirements are based on running GES in a multi-tenanted environment and scaled down accordingly. Use these guidelines, coupled with the callback storage information listed above, to gauge the precise requirements needed to ensure that GES runs smoothly in your deployment. GES(Based on t3.medium)

Redis(Based on cache.r5.large) Redis is essential to GES service availability. Deploy two Redis caches in a cluster; the second cache acts as a replica of the first. For more information, see Architecture. Callback data is stored in Redis memory.

PostgreSQL(Based on db.t3.medium)

Sizing calculatorThe information in this section is provided to help you determine what hardware you need to run GES and third-party components. The information and formulas are based on an analysis of database disk storage and Redis memory usage requirements for callback data. The numbers provided here include only storage and memory usage for callbacks. Additional storage and memory is required for configuration data and basic operations. Requirements per callbackEach callback record (excluding user data) requires approximately 6.5 to 7.0 kB of database disk storage, plus additional disk storage for the user data. Each kB of user data consumes approximately 3.0 kB of disk storage. Each callback record (excluding user data) requires approximately 4.5 to 5.5 kB of Redis memory, plus an additional 1.25 kB for each kB of user data. Use the following formulas to estimate disk storage and Redis memory requirements:

For example, if a tenant has an average of 100,000 callbacks per day with 1kB user data in each callback:

NOTE: Each callback record is stored for 14 days. If you average about 10k scheduled callbacks every day, and the scheduled callbacks are all booked as far out as possible (that is, 14 days in the future), the number of callbacks to use in storage and memory calculations is 28 days × 10k callbacks per day = 280k callbacks. Redis operationsThe Redis operations primarily update the connectivity status to other services such as Tenant Service (specifically ORS and URS) and Genesys Web Services and Applications (GWS). When GES is idle (zero callbacks in the past, no active callback sessions, no scheduled callbacks), GES generates about 50 Redis operations per second per GES node per tenant. Each Immediate callback generates approximately 110 Redis operations from its creation to the end of the ORS session. For Scheduled callbacks, assuming each callback generates 110 Redis operations when the ORS session is active (based on Immediate callback numbers), there is 1 additional Redis operation for each minute that a callback is scheduled. For example, if a callback is scheduled for 1 hour from the time it was created, the number of Redis operations is approximately 60 + 110 = 170. For a callback scheduled for 1 day from the time it was created, it generates approximately 60 × 24 + 110 = 1550 Redis operations, using the following formula for the number of Redis operations per callback: Because the longevity of a callback ORS session depends on the estimated wait time (EWT), the total number of Redis operations performed by GES per minute varies, based on both the number of callbacks in the system and the EWT of the callbacks. Use the following formula to estimate the number of Redis operations performed per minute: Where:

For example, let's say we have the following scenario:

Using the preceding formulas, estimate the Redis operations per minute:

Redis keysEach callback creates three additional Redis keys. Given the preceding calculations for Redis memory requirements for each callback, the formula for the average key size is: |

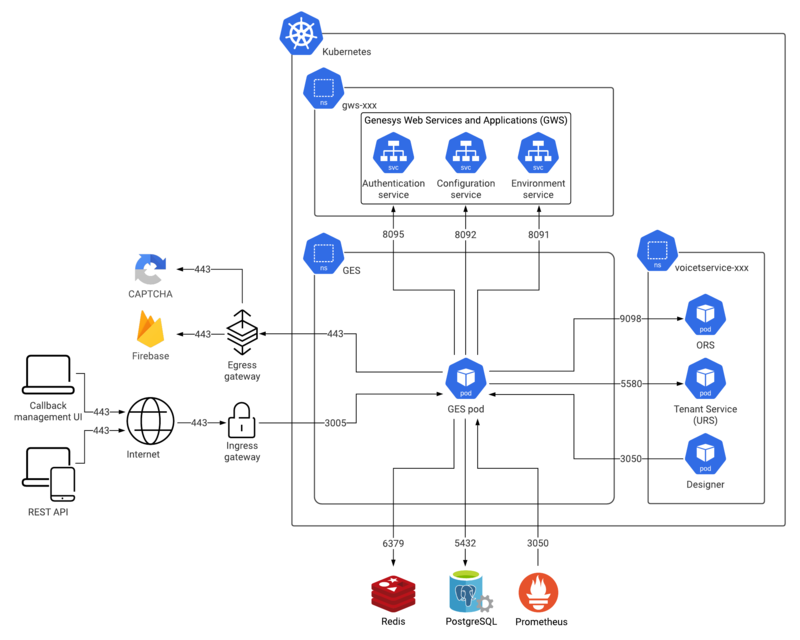

Incoming connections to the GES deployment are handled either through the UI or through the external API. For information about how to use the external API, see the Genesys Multicloud CX Developer Center.Connection topologyThe diagram below shows the incoming and outgoing connections amongst GES and other Genesys and third-party software such as Redis, PostgreSQL, and Prometheus. In the diagram, Prometheus is shown as being part of the broader Kubernetes deployment, although this is not a requirement. What's important is that Prometheus is able to reach the internal load balancer for GES. The other important thing to note is that, depending on the use case, GES might communicate with Firebase and CAPTCHA over the open internet. This is not part of the default callback offering, but if you use Push Notifications with your callback service, then GES must be able to connect to Firebase over TLS. The use of Push Notifications or CAPTCHA is optional and not necessary for the basic callback scenarios. Web application firewall rulesInformation in the following sections is based on NGINX configuration used by GES in an Azure cloud environment. Cookies and session requirementsWhen interacting with the UI, GES and GWS ensure that the user's browser has the appropriate session cookies. By default, UI sessions time out after 20 minutes of inactivity. The external Engagement API does not require session management or the use of cookies, but it is important that the GES API key be provided in the request headers in the X-API-Key field. For ingress to GES, allow requests to only the following paths to be forwarded to GES: - /ges/ In addition to allowing connections to only these paths, ensure that the ccid or ContactCenterID headers on any incoming requests are empty. This enhances security of the GES deployment; it prevents the use of external APIs by an actor who has only the CCID of the contact center. TLS/SSL certificate configurationThere are no special TLS certificate requirements for the GES/Genesys Callback web-based UI. Subnet requirementsThere are no special requirements for sizing or creating an IP subnet for GES above and beyond the demands of the broader Kubernetes cluster. |

The Genesys Callback user interface is supported in the following browsers. | GES has dependencies on several other Genesys services. You must deploy the services on which GES depends and verify that each is working as expected before you provision and configure GES. If you follow this advice, then – if any issues arise during the provisioning of GES – you can be reasonably assured that the fault lies in how GES is provisioned, rather than in a downstream program. GES/Callback requires your environment to contain supported releases of the following Genesys services, which must be deployed before you deploy Callback:

For detailed information about the correct order of services deployment, see Order of services deployment. |

Callback records are stored for 14 days. The 14-day TTL setting starts at the Desired Callback Time. The Callback TTL (seconds) setting in the CALLBACK_SETTINGS data table has no effect on callback record storage duration; 14 days is a fixed value for all callback records. | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Draft:PEC-DC/Current/DCPEGuide/Planning | Draft:PEC-DC | Before you begin | Find out what to do before deploying Digital Channels. | Digital Channels | DCPEGuide | bf21dc7c-597d-4bbe-8df2-a2a64bd3f167 | Digital Channels for private edition has the following limitations:

|

Digital Channels in Genesys Multicloud CX private edition includes the following containers:

The service also includes a Helm chart, which you must deploy to install all the containers for Digital Channels:

See Helm charts and containers for Digital Channels for the Helm chart version you must download for your release. To download the Helm chart, navigate to the nexus folder in the JFrog repository. For information about how to download the Helm charts, see Downloading your Genesys Multicloud CX containers.

|

Install the prerequisite dependencies listed in the Third-party services table before you deploy Digital Channels. | Digital Channels uses PostgreSQL and Redis to store all data.

|

For general network requirements, review the information on the suite-level Network settings page. | Digital Channels has dependencies on the following Genesys services:

For detailed information about the correct order of services deployment, see Order of services deployment. |

JM: Does Digital Channels support GDPR? | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Draft:PEC-DC/Current/DCPEGuide/PlanningAIConnector | Draft:PEC-DC | Before you begin | Find out what to do before deploying AI Connector. | Digital Channels | DCPEGuide | bf21dc7c-597d-4bbe-8df2-a2a64bd3f167 | AI Connector for private edition has the following limitation:

|

AI Connector in Genesys Multicloud CX private edition includes the following container:

The service also includes a Helm chart, which you must deploy to install all the containers for AI Connector:

See Helm charts and containers for Digital Channels for the Helm chart version you must download for your release. To download the Helm chart, navigate to the athena folder in the JFrog repository. For information about how to download the Helm chart, see Downloading your Genesys Multicloud CX containers.

|

Install the prerequisite dependencies listed in the Third-party services table before you deploy AI Connector. | AI Connector uses PostgreSQL and Redis to store all data.

|

For general network requirements, review the information on the suite-level Network settings page. | AI Connector has dependencies on the following Genesys service:

For detailed information about the correct order of services deployment, see Order of services deployment. |

d4d81735-166a-401c-abfb-0957cbbaef56 | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Draft:PEC-Email/Current/EmailPEGuide/Planning | Draft:PEC-Email | Before you begin | Find out what to do before deploying Email. | EmailPEGuide | bf21dc7c-597d-4bbe-8df2-a2a64bd3f167 | The current version of Email supports single-region model of deployment only. | Email in Genesys Multicloud CX private edition includes the following containers:

The service also includes a Helm chart, which you must deploy to install the required containers for Email:

See Helm Chart and Containers for Email for the Helm chart version you must download for your release. To download the Helm chart, navigate to the iwdem folder in the JFrog repository. For information about how to download the Helm charts, see Downloading your Genesys Multicloud CX containers. |

All data is stored in IWD, UCS-X, and Digital Channels which are external to the Email service. | External Connections: IMAP, SMTP, Gmail, GRAPH | Not applicable | The following Genesys services are required:

For the order in which the Genesys services must be deployed, refer to the Order of services deployment topic in the Setting up Genesys Multicloud CX private edition document. |

Content coming soon | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Draft:PEC-GPA/Current/GPAPEGuide/Planning | Draft:PEC-GPA | Before you begin | Find out what to do before deploying Gplus Adapter for Salesforce. | Gplus Adapter for Salesforce | GPAPEGuide | bf21dc7c-597d-4bbe-8df2-a2a64bd3f167 | There are no limitations or assumptions related to the deployment. | You can access the Gplus Adapter for Salesforce Helm charts when you download them from JFrog using your credentials. See [[Draft:ReleaseNotes/Current/GenesysEngage-cloud/GPAHelm|]] for the Helm chart version you must download for your release. For information about downloading Genesys Helm charts from JFrog Edge, refer to this article: Downloading your Genesys Multicloud CX containers. |

Salesforce Lightning or Salesforce Classic. | There are no specific storage requirements for Gplus Adapter for Salesforce.

|

Network requirements include:

|

You can use any of the supported browsers to run Gplus Adapter for Salesforce and Agent Workspace on the client side. | Mandatory DependenciesThe following services must be deployed and running before deploying the GPA service. For more information, refer to Order of services deployment.

Optional DependenciesDepending on the deployed architecture, the following services must be deployed and running before deploying the WWE service:

Miscellaneous desktop-side optional dependenciesThe following software must or might be deployed on agent workstations to allow agents to leverage the WWE service:

|

Gplus Adapter for Salesforce does not have specific GDPR support. | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Draft:PEC-IWD/Current/IWDDMPEGuide/Planning | Draft:PEC-IWD | Before you begin | Find out what to do before deploying IWD Data Mart. | Intelligent Workload Distribution | IWDDMPEGuide | bf21dc7c-597d-4bbe-8df2-a2a64bd3f167 | The current version of IWD Data Mart:

IWD Data Mart is a short-living job, so Prometheus metrics cannot be pulled. Therefore, it requires a standalone Pushgateway service for monitoring. |

IWD Data Mart in Genesys Multicloud CX private edition includes the following containers:

The service also includes a Helm chart, which you must deploy to install the required containers for IWD Data Mart:

See Helm Charts and Containers for IWD and IWD Data Mart for the Helm chart version you must download for your release. To download the Helm chart, navigate to the iwddm-cronjob folder in the JFrog repository. For information about how to download the Helm charts, see Downloading your Genesys Multicloud CX containers. |

All data is stored in PostgreSQL, which is external to the IWD Data Mart. | Not applicable | Not applicable | Intelligent Workload Distribution (IWD) with a provisioned tenant. For the order in which the Genesys services must be deployed, refer to the Order of services deployment topic in the Setting up Genesys Multicloud CX private edition document. |

Content coming soon | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Draft:PEC-IWD/Current/IWDPEGuide/Planning | Draft:PEC-IWD | Before you begin | Find out what to do before deploying IWD. | Intelligent Workload Distribution | IWDPEGuide | bf21dc7c-597d-4bbe-8df2-a2a64bd3f167 | The current version of IWD:

|

IWD in Genesys Multicloud CX private edition includes the following containers:

The service also includes a Helm chart, which you must deploy to install the required containers for IWD:

See Helm Charts and Containers for IWD and IWD Data Mart for the Helm chart version you must download for your release. To download the Helm chart, navigate to the iwd folder in the JFrog repository. For information about how to download the Helm charts, see Downloading your Genesys Multicloud CX containers. |

All data is stored in the PostgreSQL, Elasticsearch, and Digital Channels which are external to IWD. Sizing of Elasticsearch depends on the load. Allow on average 15 KB per work item, 50 KB per email. This can be adjusted depending on the size of items processed. |

External Connections: IWD allows customer to configure webhooks. If configured, this establishes an HTTP or HTTPS connection to the configured host or port. | Not applicable | The following Genesys services are required:

For the order in which the Genesys services must be deployed, refer to the Order of services deployment topic in the Setting up Genesys Multicloud CX private edition document. |

Content coming soon | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Draft:PEC-OU/Current/CXCPEGuide/Planning | Draft:PEC-OU | Before you begin | Find out what to do before deploying CX Contact. | Outbound (CX Contact) | CXCPEGuide | bf21dc7c-597d-4bbe-8df2-a2a64bd3f167 | There are no limitations. Before you begin deploying the CX Contact service, it is assumed that the following prerequisites and optional task, if needed, are completed: Prerequisites

Optional tasks

After you've completed the mandatory tasks, check the Third-party prerequisites. |

For information about how to download the Helm charts, see Downloading your Genesys Multicloud CX containers. See Helm charts and containers for CX Contact for the Helm chart version you must download for your release. CX Contact is the only service that runs in the CX Contact Docker container. The Helm charts included with the CX Contact release provision CX Contact and any Kubernetes infrastructure necessary for CX Contact to run. |

Set up Elasticsearch and Redis services as standalone services or installed in a single Kubernetes cluster. For information about setting up your Genesys Multicloud CX private edition platform, see Software requirements. |

CX Contact requires shared persistent storage and an associated storage class created by the cluster administrator. The Helm chart creates the ReadWriteMany (RWX) Persistent Volume Claim (PVC) that is used to store and share data with multiple CX Contact components. The minimal recommended PVC size is 100GB. |

This topic describes network requirements and recommendations for CX Contact in private edition deployments:Single namespaceDeploy CX Contact in a single namespace to prevent ingress/egress traffic from going through additional hops, due to firewalls, load balancers, or other network layers that introduce network latencies and overhead. Do not hardcode the namespace. You can override it by using the Helm file/values (provided during the Helm install command standard --namespace= argument), if necessary. External connectionsFor information about external connections from the Kubernetes cluster to other systems, see Architecture. External connections also include:

IngressThe CX Contact UI requires Session Stickiness. Use ingress-nginx as the ingress controller (see github.com). Important The CX Contact Helm chart contains default annotations for session stickiness only for ingress-nginx. If you are using a different ingress controller, refer to its documentation for session stickiness configuration.Ingress SSLIf you are using Chrome 80 or later, the SameSite cookie must have the Secure flag (see Chromium Blog). Therefore, Genesys recommends that you configure a valid SSL certificate on ingress. LoggingLog rotation is required so that logs do not consume all of the available storage on the node. Kubernetes is currently not responsible for rotating logs. Log rotation can be handled by the docker json-file log driver by setting the max-file and max-size options. For effective troubleshooting, the engineering team should provide stdout logs of the pods (using the command kubectl logs). As a result, log retention is not very aggressive (see JSON file logging driver). For example: ?'"`UNIQ--source-00000013-QINU`"'? For on-site debugging purposes, CX Contact logs can be collected and stored in Elasticsearch. (For example, EFK stack. See medium.com). MonitoringCX Contact provides metrics that can be consumed by Prometheus and Grafana. It is recommended to have the Prometheus Operator (see githum.com) installed in the cluster. CX Contact Helm chart supports the creation of CustomResourceDefinitions that can be consumed by the Prometheus Operator. For more information about monitoring, see Observability in Outbound (CX Contact). |

CX Contact components operate with Genesys core services (v8.5 or v8.1) in the back end. All voice-processing components (Voice Microservice and shared services, such as GVP), and the GWS and Genesys Authentication services (mentioned below) must deployed and running before deploying the CX Contact service. See Order of services deployment. The following Genesys services and components are required:

Nexus is optional.

|

CX Contact does not support GDPR. | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Draft:PEC-REP/Current/GCXIPEGuide/Planning | Draft:PEC-REP | Before you begin deploying GCXI | Find out what to do before deploying Genesys Customer Experience Insights (GCXI). | Reporting | GCXIPEGuide | bf21dc7c-597d-4bbe-8df2-a2a64bd3f167 | GCXI can provide meaningful reports only if Genesys Info Mart and Reporting and Analytics Aggregates (RAA) are deployed and available. Deploy GCXI only after Genesys Info Mart and RAA. | For more information about how to download the Helm charts in Jfrog Edge, see the suite-level documentation: Downloading your Genesys Multicloud CX containers To learn what Helm chart version you must download for your release, see Helm charts and containers for Genesys Customer Experience Insights GCXI Containers

GCXI Helm Chart Download the latest yaml files from the repository, or examine the attached files: Sample GCXI yaml files |

For more information about setting up your Genesys Multicloud CX private edition platform, including Kubernetes, Helm, and other prerequisites, see Software requirements.

|

GCXI installation requires a set of local Persistent Volumes (PVs). Kubernetes local volumes are directories on the host with specific properties: https://kubernetes.io/docs/concepts/storage/volumes/#local Example usage: https://zhimin-wen.medium.com/local-volume-provision-242affd5efe2 Kubernetes provides a powerful volume plugin system, which enables Kubernetes workloads to use a wide variety of block and file storage to persist data. You can use the GCXI Helm chart to set up your own PVs, or you can configure PV Dynamic Provisioning in your cluster so that Kubernetes automatically creates PVs. Volumes DesignGCXI installation uses the following PVC:

Preparing the environmentTo prepare your environment, complete the following steps:

|

IngressIngress annotations are supported in the values.yaml file (see line 317). Genesys recommends session stickiness, to improve user experience. ?'"`UNIQ--source-0000003F-QINU`"'? Allowlisting is required for GCXI. WAF RulesWAF rules are defined in the variables.tf file (see line 245). SMTPThe GCXI container and Helm chart support the environment variable TLSThe GCXI container does not serve TLS natively. Ensure that your environment is configured to use proxy with HTTPS offload. |

MicroStrategy Web is the user interface most often used for accessing, managing, and running the Genesys CX Insights reports. MicroStrategy Web certifies the latest versions, at the time of release, for the following web browsers:

To view updated information about supported browsers, see the MicroStrategy ReadMe. |

GCXI requires the following services:

|

GCXI can store Personal Identifiable Information (PII) in logs, history files, and in reports (in scenarios where customers include PII data in reports). Genesys recommends that you do not capture PII in reports. If you do capture PII, it is your responsibility to remove any such report data within 21 days or less, if required by General Data Protection Regulation (GDPR) standards. For more information and relevant procedures, see: Genesys CX Insights Support for GDPR and the suite-level Link to come documentation. |

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Draft:PEC-REP/Current/GCXIPEGuide/PlanningRAA | Draft:PEC-REP | Before you begin deploying RAA | Find out what to do before deploying Reporting and Analytics Aggregates (RAA). | Reporting | GCXIPEGuide | The RAA container works with the Genesys Info Mart database; deploy RAA only after you have deployed Genesys Info Mart. The Genesys Info Mart database schema must correspond to a compatible Genesys Info Mart version. Execute the following command to discover the required Genesys Info Mart release: ?'"`UNIQ--source-00000025-QINU`"'? RAA container runs RAA on Java 11, and is supplied with the following of JDBC drivers:

Genesys recommends that you verify whether the provided driver is compatible with your database, and if it is not, you can override the JDBC driver by copying an updated driver file to the folder lib\jdbc_driver_<RDBMS> within the mounted config volume, or by creating a co-named link within the folder lib\jdbc_driver_<RDBMS>, which points to a driver file stored on another volume (where <RDBMS> is the RDBMS used in your environment). This is possible because RAA is launched in a config folder, which is mounted in a container. |

To learn what Helm chart version you must download for your release, see Helm charts and containers for Genesys Customer Experience Insights. You can download the gcxi helm charts from the following repository:?'"`UNIQ--source-00000027-QINU`"'? For more information about downloading containers, see: Downloading your Genesys Multicloud CX containers. |

For information about setting up your Genesys Multicloud CX private edition platform, including Kubernetes, Helm, and other prerequisites, see Software requirements. | This section describes the storage requirements for various volumes.GIM secret volumeIn scenarios where raa.env.GCXI_GIM_DB__JSON is not specified, RAA mounts this volume to provide GIM connections details.

Alternatively, you can mount the CSI secret using secretProviderClass, in values.yaml:?'"`UNIQ--source-0000002D-QINU`"'? Config volumeRAA mounts a config volume inside the container, as the folder /genesys/raa_config. The folder is treated as a work directory, RAA reads the following files from it during startup:

RAA does not normally create any files in /genesys/raa_config at runtime, so the volume does not require a fast storage class. By default, the size limit is set to 50 MB. You can specify the storage class and size limit in values.yaml:?'"`UNIQ--source-0000002F-QINU`"'? ... RAA helm chart creates a Persistent Volume Claim (PVC). You can define a Persistent Volume (PV) separately using the gcxi-raa chart, and bind such a volume to the PVC by specifying the volume name in the raa.volumes.config.pvc.volumeName value, in values.yaml:?'"`UNIQ--source-00000031-QINU`"'? Health volumeRAA uses the Health volume to store:

By default, the volume is limited to 50MB. RAA periodically interacts with the volume at runtime, so Genesys does not recommend a slow storage class for this volume. You can specify the storage class and size limit in values.yaml:?'"`UNIQ--source-00000033-QINU`"'?RAA helm chart creates a PVC. You can define a PV separately using the gcxi-raa chart, and bind such a volume to the PVC by specifying the volume name in the raa.volumes.health.pvc.volumeName value, in values.yaml:?'"`UNIQ--source-00000035-QINU`"'? |

RAA interacts only with the Genesys Info Mart database. RAA can expose Prometheus metrics by way of Netcat. The aggregation pod has it's own IP address, and can run with one or two running containers. For Helm test, an additional IP address is required -- each test pod runs one container. Genesys recommends that RAA be located in the same region as the Genesys Info Mart database. SecretsRAA secret information is defined in the values.yaml file (line 89). For information about configuring arbitrary UID, see Configure security. |

Not applicable. | RAA interacts with Genesys Info Mart database only. | Not applicable. | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Draft:PEC-REP/Current/GIMPEGuide/PlanningGCA | Draft:PEC-REP | Before you begin GCA deployment | Find out what to do before deploying GIM Config Adapter (GCA). | Reporting | GIMPEGuide | bf21dc7c-597d-4bbe-8df2-a2a64bd3f167 | Instructions are provided for a single-tenant deployment. | To configure and deploy the GIM Config Adapter (GCA), you must obtain the Helm charts included with the GCA release. These Helm charts provision GCA plus any Kubernetes infrastructure needed for GCA to run. GCA and GCA monitoring are the only services that run in the GCA container. For information on downloading from the image repository, see Downloading your Genesys Multicloud CX containers. To find the correct Helm chart version for your release, see Helm charts and containers for Genesys Info Mart. |

For information about setting up your Genesys Multicloud CX private edition platform, see Software requirements. The following table lists the third-party prerequisites for GCA. |

GCA uses S3-compatible storage to store the GCA snapshot during processing. You must provision this S3-compatible storage in your environment. By default, GCA is configured to use Azure Blob storage, but you can also use S3-compatible storage provided by other cloud platforms. Genesys expects you to use the same storage account for GSP and GCA. If you want to provision separate storage for GCA, follow the Create S3-compatible storage instructions for GSP to create similar S3-compatible storage for GCA. |

No special network requirements. |

For detailed information about the correct order of services deployment, see Order of services deployment. |

Not applicable. GCA does not store information beyond an ephemeral snapshot. | f05492f5-52ed-490a-b0d5-c318a4a7272b | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Draft:PEC-REP/Current/GIMPEGuide/PlanningGIM | Draft:PEC-REP | Before you begin GIM deployment | Find out what to do before deploying GIM. | Reporting | GIMPEGuide | bf21dc7c-597d-4bbe-8df2-a2a64bd3f167 | Instructions are provided for a single-tenant deployment. | To configure and deploy Genesys Info Mart, you must obtain the Helm charts included with the Info Mart release. These Helm charts provision Info Mart plus any Kubernetes infrastructure Info Mart requires to run. Genesys Info Mart and GIM monitoring are the only services that run in the Info Mart container. For the correct Helm chart version for your release, see Helm charts and containers for Genesys Info Mart. For information on downloading from the image repository, see Downloading your Genesys Multicloud CX containers. |

For information about setting up your Genesys Multicloud CX private edition platform, see Software requirements. The following table lists the third-party prerequisites for GIM. |

GIM uses PostgreSQL for the Info Mart database and, optionally, uses object storage to store exported Info Mart data.

PostgreSQL — the Info Mart databaseThe Info Mart database stores data about agent and interaction activity, Outbound Contact campaigns, and usage information about other services in your contact center. A subset of tables and views created, maintained, and populated by Reporting and Analytics Aggregates (RAA) provides the aggregated data on which Genesys CX Insights (GCXI) reports are based. A sizing calculator for Genesys Multicloud CX private edition is under development. In the meantime, the interactive tool available for on-premises deployments might help you estimate the size of your Info Mart database, see Genesys Info Mart 8.5 Database Size Estimator. Genesys recommends a minimum 3 IOPS per GB. For information about creating the Info Mart database, see Before you begin GIM deployment

Create the Info Mart databaseUse any database management tool to create the Info Mart ETL database and user.

Object storage — Data Export packagesThe GIM Data Export feature enables you to export data from the GIM database so it is available for other purposes. Unless you elect to store your exported data in a local directory, GIM data is exported to an object store. GIM supports export to either Azure Blob Storage or the S3-compatible storage provided by Google Cloud Platform (GCP). If you want to use S3-compatible storage, follow the Before you begin GSP deployment instructions for GSP to create the S3-compatible storage for GIM. Important GSP and GCA use object storage to store data during processing. For safety and security reasons, Genesys strongly recommends that you use a dedicated object storage account for the GIM persistent storage, and do not share the storage account created for GSP and GCA. GSP and GCA can share an account, and this is the expected deployment.If you are not using obect storage, you can configure GIM to store exported data in a local directory. In this case, you do not need to create the object storage. |

No special network requirements. |

For detailed information about the correct order of services deployment, see Order of services deployment. |

GIM provides full support for you to comply with Right of Access ("export") or Right of Erasure ("forget") requests from consumers and employees with respect to personally identifiable information (PII) in the Info Mart database. Genesys Info Mart is designed to comply with General Data Protection Regulation (GDPR) policies. Support for GDPR includes the following:

For more information about how Genesys Info Mart implements support for GDPR requests, see Genesys Info Mart Support for GDPR. For details about the Info Mart database tables and columns that potentially contain PII, see the description of the CTL_GDPR_HISTORY table in the Genesys Info Mart on-premises documentation. |

e65e00cb-c1c8-4fb8-9614-80ac07c3a4e3 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Draft:PEC-REP/Current/GIMPEGuide/PlanningGSP | Draft:PEC-REP | Before you begin GSP deployment | Find out what to do before deploying GIM Stream Processor (GSP). | Reporting | GIMPEGuide | bf21dc7c-597d-4bbe-8df2-a2a64bd3f167 | To configure and deploy the GIM Stream Processor (GSP), you must obtain the Helm charts included with the GSP release. These Helm charts provision GSP plus any Kubernetes infrastructure GSP requires to run. GSP is the only service that runs in the GSP container. For information about how to download the Helm charts, see Downloading your Genesys Multicloud CX containers. To find the correct Helm chart version for your release, see Helm charts and containers for Genesys Info Mart for the Helm chart version you must download for your release. |

For information about setting up your Genesys Multicloud CX private edition platform, see Software requirements. The following table lists the third-party prerequisites for GSP. |

GSP maintains internal “state,” such as such as GSP checkpoints, savepoints, and high availability data, which must be persisted (stored). When it starts, GSP reads its state from storage, which allows it to continue processing data without reading data from Kafka topics from the start. GSP periodically updates its stare as it processing incoming data. GSP uses S3-compatible storage to store persisted data. You must provision this S3-compatible storage in your environment. By default, GSP is configured to use Azure Blob Storage, but you can also use S3-compatible storage provided by other cloud platforms. Genesys expects you to use the same storage account for GSP and GCA. Create S3-compatible storageGenesys Info Mart has no special requirements for the storage buckets you create. Follow the instructions provided by the storage service provider of your choice to create the S3-compatible storage.

To enable the S3-compatible storage object, you populate Helm chart override values for the service (see ). To do this, you need to know details such as the endpoint information, access key, and secret. Important Note and securely store the bucket details, particularly the access key and secret, when you create the storage bucket. Depending on the cloud storage service you choose, you may not be able to recover this information subsequently. |

No special network requirements. Network bandwidth must be sufficient to handle the volume of data to be transferred into and out of Kafka. | There are no strict dependencies between the Genesys Info Mart services, but the logic of your particular pipeline might require Genesys Info Mart services to be deployed in a particular order. Depending on the order of deployment, there might be temporary data inconsistencies until all the Genesys Info Mart services are operational. For example, GSP looks for the GCA snapshot when it starts; if GCA has not yet been deployed, GSP will encounter unknown configuration objects and resources until the snapshot becomes available. There are other private edition services you must deploy before Genesys Info Mart. For detailed information about the recommended order of services deployment, see Order of services deployment. |

Not applicable, provided your Kafka retention policies have not been set to more than 30 days. GSP does not store information beyond the ephemeral data used during processing.

GSP Kafka topicsFor GSP, topics in Kafka represent various data domains and GSP expects certain topics to be defined.

Unless Kafka has been configured to auto-create topics, you must manually ensure that all of the Kafka topics GSP requires are created in Kafka configuration. The following table shows the topic names GSP expects to be available. In this table, an entry in the Customizable GSP parameter column indicates support for customizing that topic name.

|

c39fe496-c79e-4846-b451-1bc8bedb126b | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Draft:PEC-REP/Current/PulsePEGuide/Planning | Draft:PEC-REP | Before you begin | Find out what to do before deploying Genesys Pulse. | Reporting | PulsePEGuide | bf21dc7c-597d-4bbe-8df2-a2a64bd3f167 | There are no known limitations.

|

For more information about how to download the Helm charts in Jfrog Edge, see the suite-level documentation: Downloading your Genesys Multicloud CX containers To learn what Helm chart version you must download for your release, see Helm charts and containers for Genesys Pulse Genesys Pulse Containers

Genesys Pulse Helm Charts

|

Appropriate CLI must be installed. For more information about setting up your Genesys Multicloud CX private edition platform, see Software requirements. |

Logs Volume

The logs volume stores log files:

Genesys Pulse Collector Health Volume

Genesys Pulse Collector health volume provides non-persistent storage for store Genesys Pulse Collector health state files for monitoring. Stat Server Backup Volume

Stat Server backup volume provides disk space for Stat Server's state backup. The Stat Server backup volume stores the server state between restarts of the container. |

No special requirements. | Ensure that the following services are deployed and running before you deploy Genesys Pulse:

Important All services listed must be accessible from within the cluster where Genesys Pulse will be deployed.For more information, see Order of services deployment. |

Genesys Pulse supports the General Data Protection Regulation (GDPR). See Genesys Pulse Support for GDPR for details. | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Draft:PrivateEdition/Current/TenantPEGuide/Planning | Draft:PrivateEdition | Before you begin | Find out what to do before deploying the Tenant Service. | Genesys Multicloud CX Private Edition | TenantPEGuide | bf21dc7c-597d-4bbe-8df2-a2a64bd3f167 | For information about how to download the Helm charts, see Downloading your Genesys Multicloud CX containers. See Helm charts and containers for Voice Microservices for the Helm chart version you must download for your release. ContainersThe Tenant Service has the following containers:

Helm charts

|

For information about setting up your Genesys Multicloud CX private edition platform, see Software Requirements. The following table lists the third-party prerequisites for the Tenant Service. |

For information about storage requirements for Voice Microservices, including the Tenant Service, see Storage requirements in the Voice Microservices Private Edition Guide. | For general network requirements, review the information on the suite-level Network settings page. | For detailed information about the correct order of services deployment, see Order of services deployment. The following prerequisites are required before deploying the Tenant Service:

In addition, if you expect to use Agent Setup or Workspace Web Edition after the tenant is deployed, Genesys recommends that you deploy GWS Authentication Service before proceeding with the Tenant Service deployment. Specific dependenciesThe Tenant Service is dependent on the following platform endpoints:

The Tenant Service is dependent on the following service component endpoints:

|

Not applicable. | 5a34ac72-3fae-4368-afd8-5b899e1c52ba | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Draft:STRMS/Current/STRMSPEGuide/Planning | Draft:STRMS | Before you begin | Find out what to do before deploying Event Stream. | Event Stream | STRMSPEGuide | bf21dc7c-597d-4bbe-8df2-a2a64bd3f167 | See Helm charts and containers for Event Stream for the Helm chart version you must download for your release. For information about how to download the Helm charts, see Downloading your Genesys Multicloud CX containers. |

Event Stream has dependencies on several other Genesys services. It is recommended that the provisioning and configuration of Event Stream be done after these services have been set up so that should any issues arise during the provisioning of Event Stream, it can be reasonably assured that the fault lies in how Event Stream is provisioned rather than in some downstream program.