Deploy Designer

Contents

Learn how to deploy Designer. This topic explains the deployment process for Designer and DAS.

Preparation

Before you deploy Designer and DAS using Helm charts, complete the following preparatory steps:

- Ensure the Helm client is installed.

- Set up an Ingress Controller, if not already done.

- Setup an NFS server, if not already done.

- Create Persistent Volumes - a sample YAML file is provided in the Designer manifest package.

- Download the Designer and DAS docker images and push to the local docker registry.

- Download the Designer package and extract to the current working directory.

- Configure Designer and DAS value overrides (designer-values.yaml and das-values.yaml); ensure the mandatory settings are configured. If the Blue-Green deployment process is used, Ingress settings are explained in the Blue-Green deployment section.

Set up Ingress

Given below are the requirements to set up an Ingress for the Designer UI:

- Cookie name - designer.session.

- Header requirements - client IP & redirect, passthrough.

- Session stickiness - enabled.

- Allowlisting - optional.

- TLS for ingress - optional (should be able to enable or disable TLS on the connection).

Set up Application Gateway (WAF) for Designer

Designer Ingress must be exposed to the internet using Application Gateway enabled with WAF.

When WAF is enabled, consider the following exception in the WAF rules for Designer:

- Designer sends a JSON payload with data, for example,

{profile . {} }. Sometimes, this is detected asOSFileAccessAttempt, which is a false positive detection. Disable this rule if you encounter a similar issue in your WAF setup.

Storage

Designer storage

Designer requires storage to store designer application workspaces. Designer storage is a shared file storage that will be used by the Designer and DAS services.- Capacity - 1 TiB

- Tier - Premium

- Baseline IO/s - 1424

- Burst IO/s - 4000

- Egress Rate - 121.4 MiBytes/s

- Ingress Rate - 81.0 MiBytes/s

DAS storage

If the Designer workspace is stored in a cloud storage system such as Azure Files, then the data must be synced to the DAS pods using the Designer-Sync service. In this case, DAS must use the StatefulSet deployment type. In the DAS StatefulSet pods, each pod must be attached to a premium SSD disk to store the workspace.

- Size - > 500GiB

- Max IOPS (Max IOPS w/ bursting) - 2,300 (3,500)

- Max throughput (Max throughput w/ bursting) - 150 MB/second (170 MB/second)

Permission considerations for Designer and DAS storage

NFS

For NFS RWX storages, the mount path should be owned by <code>genesys:genesys</code>, that is, <code>500:500</code> with <code>0777</code> permissions. It can be achieved by one of the below methods:

- From the NFS server, execute the chmod -R 777 <export_path> and chown -R 500:500 <export_path> commands to set the required permissions.

- Create a dummy Linux based pod that mounts the NFS storage. From the pod, execute the chmod -R 777 <mount_path> and chown -R 500:500 <mount_path> commands. This sets the required permissions. However, this method might require the Linux based dummy pods to be run as privileged pods, that is, as root.

SMB / CIFS

For SMB / CIFS based RWX storages, for instance, Azure file share, the below mountOptions must be used in the StorageClass or the PersistentVolume template using the designer.volumes.workspacePv.mountOptions helm values. The mountOptions can also be used with the storage class template though it is not recommended.

Kubernetes clusters

Usually, the Designer and DAS pods are run with UID and GID as 500.

mountOptions- dir_mode=0777

- file_mode=0777

- uid=500

- gid=500

- mfsymlinks

- cache=strictOpenShift clusters

In OpenShift, where you can use an arbitrary UID, the UID and GID in mountOptions should be replaced with the UID assigned to the project in OpenShift and GID should be 0.

mountOptions

- dir_mode=0777

- file_mode=0777

- uid=<random_uid_of_the_project>

- gid=0

- mfsymlinks

- cache=strictSet up Secrets

Secrets are required by the Designer service to connect to GWS and Redis (if you are using them).

GWS Secrets:

- GWS provides a Client ID and secrets to all clients that can be connected. You can create Secrets for the Designer client as specified in the Set up secrets for Designer section below.

Redis password:

- If Designer is connected to Redis, you must provide the Redis password to Designer to authenticate the connection.

Set up Secrets for Designer

Use the designer.designerSecrets parameter in the values.yaml file and configure Secrets as follows:

designerSecrets:

enabled: true

secrets:

DES_GWS_CLIENT_ID: xxxx

DES_GWS_CLIENT_SECRET: xxxx

DES_REDIS_PASSWORD: xxxxxDeployment strategies

Designer supports the following deployment strategies:

- Rolling Upgrade (default).

- Blue-Green (recommended).

DAS (Designer Application Server) supports the following deployment strategies:

- Rolling Upgrade (default).

- Blue-Green (recommended).

- Canary (must be used along with Blue-Green and is recommended in production).

Rolling Upgrade deployment

The rolling deployment is the standard default deployment to Kubernetes. It works slowly, one by one, replacing pods of the previous version of your application with pods of the new version without any cluster downtime. It is the default mechanism of upgrading for both Designer and DAS.

Designer

Initial deployment

To perform the initial deployment for a rolling upgrade in Designer, use the Helm command given below. The values.yaml file can be created as required.

helm upgrade --install designer -f designer-values.yaml designer-100.0.112+xxxx.tgz --set designer.image.tag=9.0.1xx.xx.xx

The values.yaml overrides passed as an argument to the above Helm upgrade command:

designer.image.tag=9.0.1xx.xx.xx - This is the new Designer version to be installed, for example, 9.0.111.05.5.

Upgrade

To perform an upgrade, the image version has to be upgraded in the designer-values.yaml file or can be set using the --set flag through the command given below. Once the designer-values.yaml file is updated, use this Helm command to perform the upgrade:

helm upgrade --install designer -f designer-values.yaml designer-100.0.112+xxxx.tgz --set designer.image.tag=9.0.1xx.xx.xx

The values.yaml overrides passed as an argument to the above Helm upgrade command:

designer.image.tag=9.0.1xx.xx.xx - This is the new Designer version to be installed, for example, 9.0.111.05.5.

Rollback

To perform a rollback, the image version in the designer-values.yaml file can be downgraded. Or you can use the --set flag through the command given below. Once the designer-values.yaml file is updated, use this Helm command to perform the rollback:

helm upgrade --install designer -f designer-values.yaml designer-100.0.112+xxxx.tgz --set designer.image.tag=9.0.1xx.xx.xx

The values.yaml overrides passed as an argument to the above Helm upgrade command:

designer.image.tag=9.0.1xx.xx.xx - This is the Designer version to be rolled back to, for example, 9.0.111.05.5.

DAS

Initial deployment

To perform the initial deployment for a rolling upgrade in DAS, use the Helm command given below. The values.yaml file can be created as required.

helm upgrade --install designer-das -f designer-das-values.yaml designer-das-100.0.112+xxxx.tgz --set das.image.tag=9.0.1xx.xx.xx

The values.yaml overrides passed as an argument to the above Helm upgrade command:

das.image.tag=9.0.1xx.xx.xx - This is the new DAS version to be installed, for example, 9.0.111.05.5.

Upgrade

To perform an upgrade, the image version has to be upgraded in the designer-das-values.yaml file or can be set using the --set flag through the command given below. Once the designer-das-values.yaml file is updated, use this Helm command to perform the upgrade:

helm upgrade --install designer-das -f designer-das-values.yaml designer-das-100.0.112+xxxx.tgz --set das.image.tag=9.0.1xx.xx.xx

The values.yaml overrides passed as an argument to the above Helm upgrade command:

das.image.tag=9.0.1xx.xx.xx - This is the new DAS version to be installed, for example, 9.0.111.05.5.

Rollback

To perform a rollback, the image version in the designer-das-values.yaml file can be downgraded. Or you can use the --set flag through the command given below. Once the designer-das-values.yaml file is updated, use this Helm command to perform the rollback:

helm upgrade --install designer-das -f designer-das-values.yaml designer-das-100.0.112+xxxx.tgz --set das.image.tag=9.0.1xx.xx.xx

The values.yaml overrides passed as an argument to the above Helm upgrade command:

das.image.tag=9.0.1xx.xx.xx - This is the DAS version to be rolled back to, for example, 9.0.111.05.5.

Blue-Green deployment

Designer

Strategy

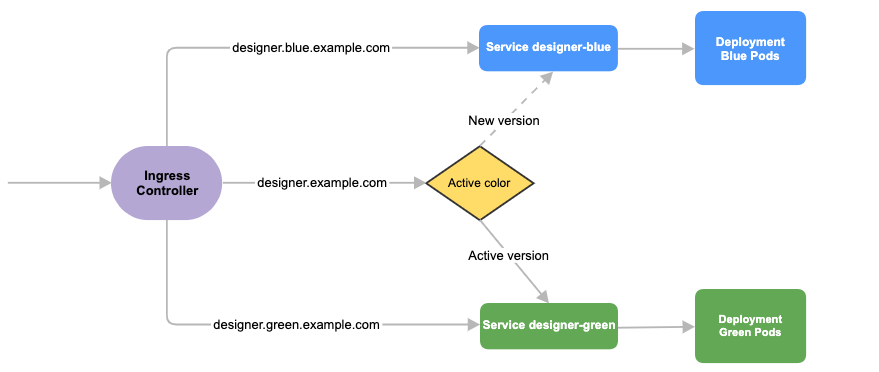

Blue-Green deployment is a release management technique that reduces risk and minimizes downtime. It uses two production environments, known as Blue and Green or active and inactive, to provide reliable testing, continuous no-outage upgrades, and instant rollbacks. When a new release needs to be rolled out, an identical deployment of the application will be created using the Helm package and after testing is completed, the traffic is moved to the newly created deployment which becomes the active environment, and the old environment becomes inactive. This ensures that a fast rollback is possible by just changing route if a new issue is found with live traffic. The old inactive deployment is removed once the new active deployment becomes stable.

Service cutover is done by updating the Ingress rules. The diagram below shows the high-level approach to how traffic can be routed to Blue and Green deployments with Ingress rules.

Preparation

Before you deploy Designer using the blue-green deployment strategy, complete the following preparatory steps:

- Create 3 hostnames as given below. The blue service hostname must contain the string blue. For example, designer.blue.example.com or designer-blue.example.com. The green service hostname must contain the string green. For example, designer.green.example.com or designer-green.example.com. The blue/green services can be accessed separately with the blue/green hostnames:

designer.example.com- For the production host URL, this is used for external access.designer.blue.example.com- For the blue service testing.designer.green.example.com- For the green service testing.

- Configure the hostnames in the designer-values.yaml file under

ingress. Annotations and paths can be modified as required.ingress: enabled: true annotations: {} paths: [/] hosts: - designer.example.com - designer.blue.example.com - designer.green.example.com

Initial deployment

The resources - ingress and persistent volume claims (PVC) - must be created initially before deploying the Designer service as these resources are shared between blue/green services and they are required to be created at the very beginning of the deployment. These resources are not required for subsequent upgrades. The required values are passed using the -- set flag in the following steps. Values can also be directly changed in the values.yaml file.

- Create Persistent Volume Claims required for the Designer service (assuming the volume service name is

designer-volume).

helm upgrade --install designer-volume -f designer-values.yaml designer-9.0.xx.tgz --set designer.deployment.strategy=blue-green-volume

The values.yaml overrides passed as an argument to the above Helm upgrade command:

designer.deployment.strategy=blue-green-volume- This denotes that the Helm install will create a persistent volume claim in the blue/green strategy. - Create Ingress rules for the Designer service (assuming the ingress service name will be designer-ingress):

helm upgrade --install designer-ingress -f designer-values.yaml designer-100.0.112+xxxx.tgz --set designer.deployment.strategy=blue-green-ingress --set designer.deployment.color=green

The values.yaml overrides passed as an argument to the above Helm upgrade command:

designer.deployment.strategy=blue-green-ingress- This denotes that the Helm install will create ingress rules for the Designer service.

designer.deployment.color=green- This denotes that the current production (active) color is green. - Deploy the Designer service color selected in step 2. In this case, green is selected and assuming the service name is

designer-green:

helm upgrade --install designer-green -f designer-values.yaml designer-100.0.112+xxxx.tgz --set designer.deployment.strategy=blue-green --set designer.image.tag=9.0.1xx.xx.xx --set designer.deployment.color=green

Upgrade

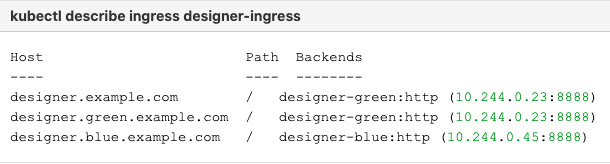

- Identify the current production color by checking the Designer ingress rules (

kubectl describe ingress designer-ingress). Green is the production color in the below example as the production host name points to the green service.

- Deploy the Designer service on to the non-production color. In the above example, blue is the non-production color and assuming the service name will be designer-blue:

helm upgrade --install designer-blue -f designer-values.yaml designer-100.0.112+xxxx.tgz --set designer.deployment.strategy=blue-green --set designer.image.tag=9.0.1xx.xx.xx --set designer.deployment.color=blue

The values.yaml overrides passed as an argument to the above Helm upgrade command:

designer.deployment.strategy=blue-green- This denotes that the Designer service is installed using the blue-green strategy.

designer.image.tag=9.0.1xx.xx.xx- This denotes the new Designer version to be installed, for example,9.0.116.08.12.

designer.deployment.color=blue- This denotes that the blue color service is installed.

The non-production color can be accessed with the non-production host name (for example,designer.blue.example.com). Testing can be done using this URL.

NodePort Service

The designer-green release creates a service called designer-green and the designer-blue release creates a service called designer-blue. If you are using NodePort services, ensure that the value of designer.service.nodePort is not the same for both the releases. In other words, you should assign dedicated node ports for the releases. The default value for designer.service.nodePort is 30180. If this was applied to designer-green, use a different value for designer-blue, for example, 30181. Use the below helm command to achieve this:

helm upgrade --install designer-blue -f designer-values.yaml designer-100.0.112+xxxx.tgz --set designer.deployment.strategy=blue-green --set designer.image.tag=9.0.1xx.xx.xx --set designer.deployment.color=blue --set designer.service.nodePort=30181

Cutover

Once testing is completed on the non-production color, traffic can be moved to the new version by updating the Ingress rules:

- Update the Designer Ingress with the new deployment color by running the following command (in this case, blue is the new deployment color, that is, the non-production color):

helm upgrade --install designer-ingress -f designer-values.yaml designer-100.0.112+xxxx.tgz --set designer.deployment.strategy=blue-green-ingress --set designer.deployment.color=blue

The values.yaml overrides passed as an argument to the above Helm upgrade command:

designer.deployment.strategy=blue-green-ingress- This denotes that the helm install will create ingress rules for the Designer service.

designer.deployment.color=blue- This denotes that the current production (active) color is blue. - Verify the ingress rules by running the following command:

kubectl describe ingress designer-ingress

The production host name must point to the new color service.

Rollback

If the upgrade must be rolled back, the ingress rules can be modified to point to the old deployment pods (green, in this example) by performing a cutover again.

- Perform a cutover using the following command:

helm upgrade --install designer-ingress -f designer-values.yaml designer-100.0.112+xxxx.tgz --set designer.deployment.strategy=blue-green-ingress --set designer.deployment.color=green

The values.yaml overrides passed as an argument to the above Helm upgrade command:

designer.deployment.strategy=blue-green-ingress- This denotes that the Helm install will create Ingress rules for the Designer service.

designer.deployment.color=green- This denotes that the the current production (active) color is green. - Verify the Ingress rules by running the following command:

kubectl describe ingress designer-ingress

The production host name must point to the green service.

DAS

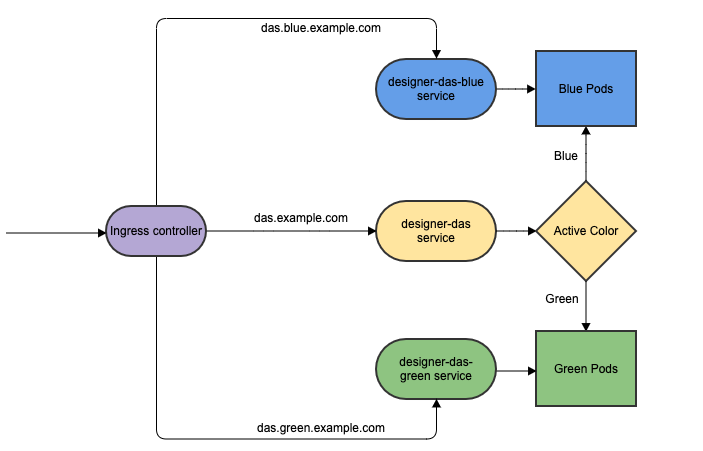

Strategy

As with Designer, the Blue-Green strategy can be adopted for DAS as well. The Blue-Green architecture used for DAS is given below. Here, the cutover mechanism is controlled by Service, the Kubernetes manifest responsible for exposing the pods. The Ingress, when enabled, will point to the appropriate service based on the URL.

Initial deployment

The Ingress must be created initially before deploying the DAS service since it is shared between blue/green services and it is required to be created at the very beginning of the deployment. The Ingress is not required for subsequent upgrades. The required values are passed using the -- set flag in the following steps. Values can also be directly changed in the values.yaml file.

- Deploy initial DAS pods and other resources by choosing an active color, in this example, green. Use the below command to create a

designer-das-greenservice:

helm upgrade --install designer-das-green -f designer-das-values.yaml designer-das-100.0.106+xxxx.tgz --set das.deployment.strategy=blue-green --set das.image.tag=9.0.1xx.xx.xx --set das.deployment.color=green

The values.yaml overrides passed as an argument to the above Helm upgrade command:

das.deployment.strategy=blue-green- This denotes that the DAS service will be installed using the blue-green deployment strategy.

das.image.tag=9.0.1xx.xx.xx- This denotes the DAS version to be installed, for example,9.0.111.04.4.

das.deployment.color=green- This denotes that the green color service is installed. - Once the initial deployment is done, the pods have to be exposed to the <code>designer-das</code> service. Execute the following command to create the <code>designer-das</code> service:

helm upgrade --install designer-das designer-das-100.0.106+xxx.tgz -f designer-das-values.yaml --set das.deployment.strategy=blue-green-service --set das.deployment.color=green

The values.yaml overrides passed as an argument to the above helm upgrade

das.deployment.strategy=blue-green-service- This denotes that the designer-das service will be installed and exposed to the active color pods.

das.deployment.color=green- This denotes that the designer-das service will point to green pods.

NodePort Service

The designer-das-green release creates a service called designer-das-green and the designer-das-blue release creates a service called designer-das-blue. If you are using NodePort services, ensure that the value of designer.service.nodePort is not the same for both the releases. In other words, you should assign dedicated node ports for the releases. The default value for designer.service.nodePort is 30280. If this was applied to designer-das-green, use a different value for designer-das-blue, for example, 30281. Use the below helm command to achieve this:

helm upgrade --install designer-das designer-das-100.0.106+xxx.tgz -f designer-das-values.yaml --set das.deployment.strategy=blue-green-service --set das.deployment.color=green --set das.service.nodePort=30281

Canary

Canary is optional and is only used along with Blue-Green. It is recommended in production. Canary pods are generally used to test new versions of images with live traffic. If you are not opting for Canary, skip the steps in this section.

Canary deployment

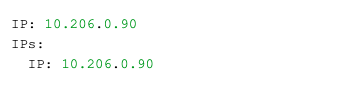

- Identify the current production color by checking the

designer-dasservice selector labels (kubectl describe service designer-das). Green is the production color in the below example as the selector label iscolor=green.

- To deploy canary pods, the

das.deployment.strategyvalue must be set tocanaryin the designer-das-values.yaml file or using the-- setflag as shown in the command below:

helm upgrade --install designer-das-canary -f das-values.yaml designer-das-100.0.106+xxxx.tgz --set das.deployment.strategy=canary --set das.image.tag=9.0.1xx.xx.xx --set das.deployment.color=green

The values.yaml overrides passed as an argument to the above Helm upgrade command:

das.deployment.strategy=canary- This denotes that the Helm install will create canary pods.

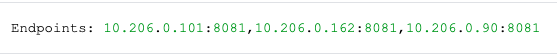

das.deployment.color=green- This denotes that the current production (active) color is green.ImportantTo make sure Canary pods receive live traffic, they have to be exposed to thedesigner-dasservice by settingdas.deployment.color=<active_color>, which is obtained from step 1. - Once canary pods are up and running, ensure that the designer-das service points to the canary pods using the

kubectl describe svc designer-dascommand.

The IP address present in the Endpoints must match the IP address of the canary pod. The canary pod's IP address is obtained using thekubectl describe pod <canary_pod_name>command.

Cleaning up

After completing canary testing, the canary pods must be cleaned up.

The das.deployment.replicaCount must be made zero and the release is upgraded. It can be changed in the designer-das-values.yaml file or through the --set flag as follows:

helm upgrade --install designer-das-canary -f das-values.yaml designer-das-100.0.106+xxxx.tgz --set das.deployment.strategy=canary --set das.image.tag=9.0.1xx.xx.xx --set das.deployment.color=blue --set das.deployment.replicaCount=0

Upgrade

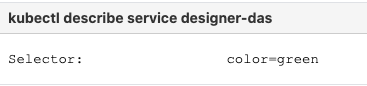

- Identify the current production color by checking the

designer-dasservice selector labels (kubectl describe service designer-das). Green is the production color in the below example as the selector label iscolor=green.

- Deploy the DAS service on to the non-production color. For the above example, blue is the non-production color and assuming the service name is

designer-das-blue):

helm upgrade --install designer-das-blue -f das-values.yaml designer-das-100.0.106+xxxx.tgz --set das.deployment.strategy=blue-green --set das.image.tag=9.0.1xx.xx.xx --set das.deployment.color=blue

The values.yaml overrides passed as an argument to the above Helm upgrade command:

das.deployment.strategy=blue-green- This denotes that the DAS service is installed using the blue-green strategy.

das.image.tag=9.0.1xx.xx.xx- This denotes the new DAS version to be installed, for example,9.0.111.05.5.

das.deployment.color=blue- This denotes that the blue color service is installed.

The non-production color can be accessed with the non-production service name.

Cutover

Once testing is completed on the non-production color, traffic can be moved to the new version by updating the designer-das service.

- Update the

designer-dasservice with the new deployment color by executing the below command. In this example, blue is the new deployment color (non-production color).

helm upgrade --install designer-das-service -f designer-das-values.yaml designer-das-100.0.106+xxxx.tgz --set das.deployment.strategy=blue-green-service --set das.deployment.color=blue - Verify the service by executing the

kubectl describe service designer-dascommand. The type label must have the active color's label, that is,color=blue.

Rollback

- If the upgrade must be rolled back, cutover has to performed again to make the service point to the old deployment (green) again. Use the below command to perform the cutover:

helm upgrade --install designer-das-service -f designer-das-values.yaml designer-das-100.0.106+xxxx.tgz --set das.deployment.strategy=blue-green-service --set das.deployment.color=green

The values.yaml overrides passed as an argument to the above Helm upgrade command:

das.deployment.strategy=blue-green-service- This denotes that the Helm install will create ingress rules for the DAS service.

das.deployment.color=green- This denotes that the current production (active) color is green. - Verify the service by executing the

kubectl describe service designer-dasthe command. The type label must have the active color's label, that is,color=green.

Validations and checks

Here are some common validations and checks that can be performed to know if the deployment was successful.

- Check if the application pods are in running state by using the

kubectl get podscommand.

- Try to connect to the Designer or DAS URL as per the ingress rules from your browser. You must be able to access the Designer and DAS webpages.

Post deployment procedures

Upgrading the Designer workspace

- It is mandatory to upgrade the Designer workspace for all Contact Center IDs.

- Genesys strongly recommends that you first back up the current workspace before performing the upgrade. This ensures that you can rollback to a previous state, if required.

Workspace resources must be upgraded after cutover. This will upgrade the system resources in the Designer workspace:

- Login to one of the Designer pods using the

kubectl exec -it <pod_name >bash command. - Execute the following migration command (this will create new directories/new files introduced in the new version):

node ./bin/cli.js workspace-upgrade -m -t <contact_center_id> - Execute the workspace resource upgrade command (this will upgrade system resources, such as system service PHP files, internal audio files and callback resources):

node ./bin/cli.js workspace-upgrade -t <contact_center_id>

In the above command,contact_center_id, is the Contact Center ID created in GWS for this tenant (workspace resources are located under the Contact Center ID folder (/workspaces/<ccid>/workspace)).

Updating the flowsettings file

Post deployment, the flowsettings.json file can be modified through a Helm install as follows:

- Extract the Designer Helm Chart and find the flowsettings.yaml file under the Designer Chart > Config folder.

- Modify the necessary settings (refer to the Post deployment configuration settings reference table for the different settings and their allowed values).

- Execute the below Helm upgrade command on the non-production color service. It can be done as part of the Designer upgrade by passing the flowsettings.yaml file using the

--valuesflag. In this case, a new Designer version can be used for the upgrade. If it is only a flowsettings.json update, the same Designer version is used.

helm upgrade --install designer-blue -f designer-values.yaml -f flowsettings.yaml designer-9.0.xx.tgz --set designer.deployment.strategy=blue-green --set designer.image.tag=9.0.1xx.xx.xx --set designer.deployment.color=blue - Once testing is completed on the non-production service, perform the cutover step as mentioned in the Cutover section (Designer Blue-Green deployment). After cutover, the production service will contain the updated settings. The non-active color Designer must also be updated with the updated settings after the cutover.