Difference between revisions of "PrivateEdition/Current/PEGuide/ConfigLog"

| Line 39: | Line 39: | ||

GKE operations suite is backed by Google '''Stack Driver''' which controls logging, monitoring, and alerting within Google Cloud Platform. By default, GKE clusters are natively integrated with Cloud Logging. When you create a GKE cluster, Cloud Logging is enabled by default. | GKE operations suite is backed by Google '''Stack Driver''' which controls logging, monitoring, and alerting within Google Cloud Platform. By default, GKE clusters are natively integrated with Cloud Logging. When you create a GKE cluster, Cloud Logging is enabled by default. | ||

| − | Refer to | + | Refer to {{Link-SomewhereInThisVersion|manual=Operations|topic=Logging_approaches|anchor=GKElogging|display text=GKE Logging}} for more details. |

|Status=No | |Status=No | ||

}} | }} | ||

}} | }} | ||

Revision as of 11:00, September 13, 2022

Provides an overview of logging architecture in Genesys Multicloud CX private edition, different types of logging mechanisms, and related configurations.

Logging approaches and configuration

This section explains the approaches of logging used by Genesys Multicloud CX services to write log files that contain the important diagnostic information for various issues that may arise. Support of Genesys services rely on access to these application logs.

OpenShift logging

OpenShift logging is backed by Fluentd/ElasticSearch/Kibana which controls scraping, indexing and UI access to an Openshift cluster. A Fluentd DaemonSet runs on each node in the cluster which scrapes logs from containers that are actively writing to a stdout stream through /var/log/pods. Output from Fluentd is piped to an Elasticsearch cluster which collects and indexes all relevant log data. Kibana provides a UI frontend for searching indexed logs generated within the cluster.

For more details, refer to Solution-level logging approaches.

Logging architecture

This section explains the logging architecture of Genesys Multicloud CX private edition in detail.

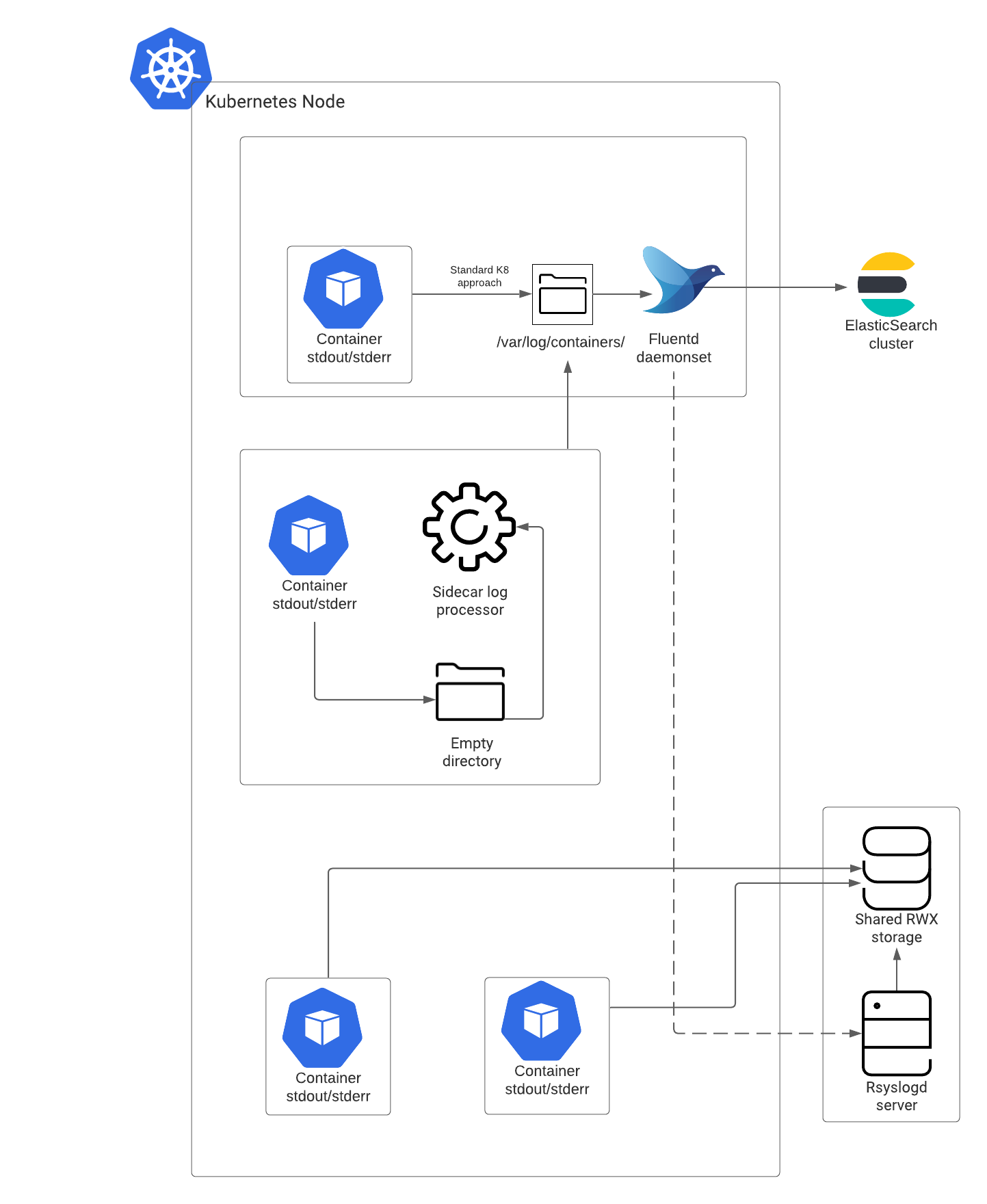

Let's explore the logging architecture, the components involved, and its functionality through the following diagram.

Logging architecture components

Elasticsearch cluster

Elasticsearch cluster deployed on multiple node aggregates the structured logs from Fluentd and indexes them. This includes the logs from services that follow Secondary and Complementary logging methods. You can use a log visualizer tool like Kibana to view, search, or filter the indexed logs from Elasticsearch.

Fluentd / Fluent-bit

This is a log collector in OpenShift Container Platform. It collects logs from the cluster and forwards them to Elasticsearch or an externally accessible storage such as Rsyslog server or both depending on your configuration. Fluentd /Fluent-bit collects the application logs of Genesys Multicloud CX services from /var/log/containers. While deploying cluster wide logging each node Fluentd /Fluent-bit will be deployed to each node.

Shared RWX storage

The unstructured logs are directly written in the RWX shared storage. For services writing unstructured logs, you must mount PVC/PV. To access logs externally, use a server like NFS or S3.

Syslog server storage

Optionally, you can implement a syslog server to store the structured logs other than the Elasticsearch log store. Syslog server writes the logs in a flat file and enables you to share them externally. Genesys recommends Rsyslog server for this purpose, however you can select any syslog server of your choice. For more information, refer the deployment procedure.

GKE logging

GKE operations suite is backed by Google Stack Driver which controls logging, monitoring, and alerting within Google Cloud Platform. By default, GKE clusters are natively integrated with Cloud Logging. When you create a GKE cluster, Cloud Logging is enabled by default.

Refer to GKE Logging for more details.