Difference between revisions of "GVP/Current/GVPPEGuide/Deploy"

(Published) |

(Undo revision 113401 by Karl abraham.abel@genesys.com (talk)) (Tag: Undo) |

||

| Line 5: | Line 5: | ||

|ComingSoon=No | |ComingSoon=No | ||

|Section={{Section | |Section={{Section | ||

| − | |sectionHeading=Deploy | + | |sectionHeading=Deploy in OpenShift |

|alignment=Vertical | |alignment=Vertical | ||

|structuredtext={{NoteFormat|Make sure to review {{Link-SomewhereInThisVersion|manual=GVPPEGuide|topic=Planning}} for the full list of prerequisites required to deploy Genesys Voice Platform.|}} | |structuredtext={{NoteFormat|Make sure to review {{Link-SomewhereInThisVersion|manual=GVPPEGuide|topic=Planning}} for the full list of prerequisites required to deploy Genesys Voice Platform.|}} | ||

| Line 19: | Line 19: | ||

===Environment setup=== | ===Environment setup=== | ||

| − | *Log in to the | + | *Log in to the OpenShift cluster from the remote host via CLI |

| − | |||

| − | |||

| − | |||

| − | + | {{{!}} class="wikitable" data-macro-name="code" data-macro-id="ab571ebf-fcfb-4a01-8b1b-6944f77b9f78" data-macro-parameters="language=bash{{!}}theme=Emacs" data-macro-schema-version="1" data-macro-body-type="PLAIN_TEXT" | |

| + | {{!}} class="wysiwyg-macro-body"{{!}} | ||

| + | oc login --token <token> --server <URL of the API server> | ||

| + | {{!}}} | ||

| − | + | *Check the cluster version | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | {{{!}} class="wikitable" data-macro-name="code" data-macro-id="214674de-64a8-4a44-b515-153092ce8d29" data-macro-parameters="language=bash{{!}}theme=Emacs" data-macro-schema-version="1" data-macro-body-type="PLAIN_TEXT" | |

| − | + | {{!}} class="wysiwyg-macro-body"{{!}} | |

| − | < | + | oc get clusterversion |

| + | {{!}}} | ||

| + | |||

| + | *Create gvp project in OpenShift cluster | ||

| + | |||

| + | {{{!}} class="wikitable" data-macro-name="code" data-macro-id="64d874a6-e297-4123-a6a5-af80bef85b93" data-macro-parameters="language=bash{{!}}theme=Emacs" data-macro-schema-version="1" data-macro-body-type="PLAIN_TEXT" | ||

| + | {{!}} class="wysiwyg-macro-body"{{!}} | ||

| + | oc new-project gvp | ||

| + | {{!}}} | ||

| + | |||

| + | *Set default project to GVP | ||

| + | |||

| + | {{{!}} class="wikitable" data-macro-name="code" data-macro-id="c54c5c76-b3b9-4509-bcba-c42ebf950235" data-macro-parameters="language=bash{{!}}theme=Emacs" data-macro-schema-version="1" data-macro-body-type="PLAIN_TEXT" | ||

| + | {{!}} class="wysiwyg-macro-body"{{!}} | ||

| + | oc project gvp | ||

| + | {{!}}} | ||

| + | |||

| + | *Bind SCC to genesys user using default service account | ||

| + | |||

| + | {{{!}} class="wikitable" data-macro-name="code" data-macro-id="f527d463-7100-4bfa-bf89-f0db5364f738" data-macro-parameters="language=bash{{!}}theme=Emacs" data-macro-schema-version="1" data-macro-body-type="PLAIN_TEXT" | ||

| + | {{!}} class="wysiwyg-macro-body"{{!}} | ||

| + | oc adm policy add-scc-to-user genesys-restricted -z default -n gvp | ||

| + | {{!}}} | ||

| + | |||

| + | *Create secret for docker-registry in order to pull image from JFrog | ||

| + | |||

| + | {{{!}} class="wikitable" data-macro-name="code" data-macro-id="3b7536c0-f7f3-4fca-917c-1ef9d8859a73" data-macro-parameters="language=bash{{!}}theme=Emacs" data-macro-schema-version="1" data-macro-body-type="PLAIN_TEXT" | ||

| + | {{!}} class="wysiwyg-macro-body"{{!}} | ||

| + | oc create secret docker-registry <credential-name> --docker-server=<docker repo> --docker-username=<username> --docker-password=<API key from jfrog> --docker-email=<emailid> | ||

| + | {{!}}} | ||

| + | |||

| + | *Link the secret to default service account with pull role | ||

| + | |||

| + | {{{!}} class="wikitable" data-macro-name="code" data-macro-id="ac36681b-3176-4f57-9817-0b97ade1d172" data-macro-parameters="language=bash{{!}}theme=Emacs" data-macro-schema-version="1" data-macro-body-type="PLAIN_TEXT" | ||

| + | {{!}} class="wysiwyg-macro-body"{{!}} | ||

| + | oc secrets link default <credential-name> --for=pull | ||

| + | {{!}}} | ||

| − | |||

| − | |||

| − | |||

| − | |||

Installation order matters with GVP. To deploy without errors, the install order should be: | Installation order matters with GVP. To deploy without errors, the install order should be: | ||

| Line 65: | Line 85: | ||

Download the GVP Helm charts from JFrog using your credentials: | Download the GVP Helm charts from JFrog using your credentials: | ||

| − | gvp-configserver : https://<jfrog artifactory/helm location>/gvp-configserver- | + | gvp-configserver : https://<jfrog artifactory/helm location>/gvp-configserver-100.0.1000017.tgz |

| − | gvp-sd : https://<jfrog artifactory/helm location>/gvp-sd- | + | gvp-sd : https://<jfrog artifactory/helm location>/gvp-sd-100.0.1000019.tgz |

| − | gvp-rs : https://<jfrog artifactory/helm location>/gvp-rs- | + | gvp-rs : https://<jfrog artifactory/helm location>/gvp-rs-100.0.1000077.tgz |

| − | gvp-rm : https://<jfrog artifactory/helm location>/gvp-rm- | + | gvp-rm : https://<jfrog artifactory/helm location>/gvp-rm-100.0.1000082.tgz |

| − | gvp-mcp : https://<jfrog artifactory/helm location>/gvp-mcp- | + | gvp-mcp : https://<jfrog artifactory/helm location>/gvp-mcp-100.0.1000040.tgz |

| − | |||

| − | |||

|Status=No | |Status=No | ||

}}{{Section | }}{{Section | ||

| Line 112: | Line 130: | ||

{{{!}} class="wikitable" data-macro-name="code" data-macro-id="587e10f0-ca8a-449f-8e91-5334c925a9ac" data-macro-parameters="language=bash{{!}}theme=Emacs" data-macro-schema-version="1" data-macro-body-type="PLAIN_TEXT" | {{{!}} class="wikitable" data-macro-name="code" data-macro-id="587e10f0-ca8a-449f-8e91-5334c925a9ac" data-macro-parameters="language=bash{{!}}theme=Emacs" data-macro-schema-version="1" data-macro-body-type="PLAIN_TEXT" | ||

{{!}} class="wysiwyg-macro-body"{{!}} | {{!}} class="wysiwyg-macro-body"{{!}} | ||

| − | + | oc create -f postgres-secret.yaml | |

{{!}}}<u>configserver-secret</u> | {{!}}}<u>configserver-secret</u> | ||

| Line 132: | Line 150: | ||

{{{!}} class="wikitable" data-macro-name="code" data-macro-id="33201d74-71dc-47ca-a0da-26714a75796f" data-macro-parameters="language=bash{{!}}theme=Emacs" data-macro-schema-version="1" data-macro-body-type="PLAIN_TEXT" | {{{!}} class="wikitable" data-macro-name="code" data-macro-id="33201d74-71dc-47ca-a0da-26714a75796f" data-macro-parameters="language=bash{{!}}theme=Emacs" data-macro-schema-version="1" data-macro-body-type="PLAIN_TEXT" | ||

{{!}} class="wysiwyg-macro-body"{{!}} | {{!}} class="wysiwyg-macro-body"{{!}} | ||

| − | + | oc create -f configserver-secret.yaml | |

{{!}}} | {{!}}} | ||

===Install Helm chart=== | ===Install Helm chart=== | ||

Download the required Helm chart release from the JFrog repository and install. Refer to {{Link-AnywhereElse|product=GVP|version=Current|manual=GVPPEGuide|topic=Deploy|anchor=HelmchaURLs|display text=Helm Chart URLs}}. | Download the required Helm chart release from the JFrog repository and install. Refer to {{Link-AnywhereElse|product=GVP|version=Current|manual=GVPPEGuide|topic=Deploy|anchor=HelmchaURLs|display text=Helm Chart URLs}}. | ||

| − | + | {{{!}} class="wikitable" data-macro-name="code" data-macro-id="be4eab56-9055-4cbc-a7d4-8da3284f51dd" data-macro-parameters="language=bash{{!}}theme=Emacs{{!}}title=Install Helm Chart" data-macro-schema-version="1" data-macro-body-type="PLAIN_TEXT" | |

| − | helm install gvp-configserver ./<gvp-configserver-helm-artifact> -f gvp-configserver-values.yaml | + | {{!}} class="wysiwyg-macro-body"{{!}} |

| − | + | helm install gvp-configserver ./<gvp-configserver-helm-artifact> -f gvp-configserver-values.yaml | |

| + | {{!}}}At minimum following values will need to be updated in your values.yaml: | ||

| − | Set | + | *<critical-priority-class> - Set to a priority class that exists on cluster (or create it instead) |

| + | *<docker-repo> - Set to your Docker Repo with Private Edition Artifacts | ||

| + | *<credential-name> - Set to your pull secret name | ||

| − | + | {{{!}} class="wikitable" data-macro-name="code" data-macro-id="3588a7f4-5ad5-458e-be58-248972275c41" data-macro-parameters="language=yml{{!}}theme=Emacs{{!}}title=gvp-configserver-values.yaml" data-macro-schema-version="1" data-macro-body-type="PLAIN_TEXT" | |

| − | + | {{!}} class="wysiwyg-macro-body"{{!}} | |

| − | + | # Default values for gvp-configserver. | |

| − | + | <nowiki>#</nowiki> This is a YAML-formatted file. | |

| − | + | <nowiki>#</nowiki> Declare variables to be passed into your templates. | |

| − | |||

| − | # Default values for gvp-configserver. | ||

| − | # This is a YAML-formatted file. | ||

| − | # Declare variables to be passed into your templates. | ||

| − | ## Global Parameters | + | <nowiki>##</nowiki> Global Parameters |

| − | ## Add labels to all the deployed resources | + | <nowiki>##</nowiki> Add labels to all the deployed resources |

| − | ## | + | <nowiki>##</nowiki> |

| − | podLabels: {} | + | podLabels: {} |

| − | ## Add annotations to all the deployed resources | + | <nowiki>##</nowiki> Add annotations to all the deployed resources |

| − | ## | + | <nowiki>##</nowiki> |

| − | podAnnotations: {} | + | podAnnotations: {} |

| − | serviceAccount: | + | serviceAccount: |

| − | + | <nowiki> </nowiki> # Specifies whether a service account should be created | |

| − | + | <nowiki> </nowiki> create: false | |

| − | + | <nowiki> </nowiki> # Annotations to add to the service account | |

| − | + | <nowiki> </nowiki> annotations: {} | |

| − | + | <nowiki> </nowiki> # The name of the service account to use. | |

| − | + | <nowiki> </nowiki> # If not set and create is true, a name is generated using the fullname template | |

| − | + | <nowiki> </nowiki> name: | |

| − | ## Deployment Configuration | + | <nowiki>##</nowiki> Deployment Configuration |

| − | ## replicaCount should be 1 for Config Server | + | <nowiki>##</nowiki> replicaCount should be 1 for Config Server |

| − | replicaCount: 1 | + | replicaCount: 1 |

| − | ## Base Labels. Please do not change these. | + | <nowiki>##</nowiki> Base Labels. Please do not change these. |

| − | serviceName: gvp-configserver | + | serviceName: gvp-configserver |

| − | component: shared | + | component: shared |

| − | # Namespace | + | <nowiki>#</nowiki> Namespace |

| − | partOf: gvp | + | partOf: gvp |

| − | ## Container image repo settings. | + | <nowiki>##</nowiki> Container image repo settings. |

| − | image: | + | image: |

| − | + | <nowiki> </nowiki> confserv: | |

| − | + | <nowiki> </nowiki> registry: <docker-repo> | |

| − | + | <nowiki> </nowiki> repository: gvp/gvp_confserv | |

| − | + | <nowiki> </nowiki> pullPolicy: IfNotPresent | |

| − | + | <nowiki> </nowiki> tag: "<nowiki>{{ .Chart.AppVersion }}</nowiki>" | |

| − | + | <nowiki> </nowiki> serviceHandler: | |

| − | + | <nowiki> </nowiki> registry: <docker-repo> | |

| − | + | <nowiki> </nowiki> repository: gvp/gvp_configserver_servicehandler | |

| − | + | <nowiki> </nowiki> pullPolicy: IfNotPresent | |

| − | + | <nowiki> </nowiki> tag: "<nowiki>{{ .Chart.AppVersion }}</nowiki>" | |

| − | + | <nowiki> </nowiki> dbInit: | |

| − | + | <nowiki> </nowiki> registry: <docker-repo> | |

| − | + | <nowiki> </nowiki> repository: gvp/gvp_configserver_configserverinit | |

| − | + | <nowiki> </nowiki> pullPolicy: IfNotPresent | |

| − | + | <nowiki> </nowiki> tag: "<nowiki>{{ .Chart.AppVersion }}</nowiki>" | |

| − | ## Config Server App Configuration | + | <nowiki>##</nowiki> Config Server App Configuration |

| − | configserver: | + | configserver: |

| − | + | <nowiki> </nowiki> ## Settings for liveness and readiness probes | |

| − | + | <nowiki> </nowiki> ## !!! THESE VALUES SHOULD NOT BE CHANGED UNLESS INSTRUCTED BY GENESYS !!! | |

| − | + | <nowiki> </nowiki> livenessValues: | |

| − | + | <nowiki> </nowiki> path: /cs/liveness | |

| − | + | <nowiki> </nowiki> initialDelaySeconds: 30 | |

| − | + | <nowiki> </nowiki> periodSeconds: 60 | |

| − | + | <nowiki> </nowiki> timeoutSeconds: 20 | |

| − | + | <nowiki> </nowiki> failureThreshold: 3 | |

| − | + | <nowiki> </nowiki> healthCheckAPIPort: 8300 | |

| − | + | <nowiki> </nowiki> | |

| − | + | <nowiki> </nowiki> readinessValues: | |

| − | + | <nowiki> </nowiki> path: /cs/readiness | |

| − | + | <nowiki> </nowiki> initialDelaySeconds: 30 | |

| − | + | <nowiki> </nowiki> periodSeconds: 30 | |

| − | + | <nowiki> </nowiki> timeoutSeconds: 20 | |

| − | + | <nowiki> </nowiki> failureThreshold: 3 | |

| − | + | <nowiki> </nowiki> healthCheckAPIPort: 8300 | |

| − | + | <nowiki> </nowiki> alerts: | |

| − | + | <nowiki> </nowiki> cpuUtilizationAlertLimit: 70 | |

| − | + | <nowiki> </nowiki> memUtilizationAlertLimit: 90 | |

| − | + | <nowiki> </nowiki> workingMemAlertLimit: 7 | |

| − | + | <nowiki> </nowiki> maxRestarts: 2 | |

| − | ## PVCs defined | + | <nowiki>##</nowiki> PVCs defined |

| − | # none | + | <nowiki>#</nowiki> none |

| − | ## Define service(s) for application | + | <nowiki>##</nowiki> Define service(s) for application |

| − | service: | + | service: |

| − | + | <nowiki> </nowiki> type: ClusterIP | |

| − | + | <nowiki> </nowiki> host: gvp-configserver-0 | |

| − | + | <nowiki> </nowiki> port: 8888 | |

| − | + | <nowiki> </nowiki> targetPort: 8888 | |

| − | ## Service Handler configuration. | + | <nowiki>##</nowiki> Service Handler configuration. |

| − | serviceHandler: | + | serviceHandler: |

| − | + | <nowiki> </nowiki> port: 8300 | |

| − | ## Secrets storage related settings - k8s secrets only | + | <nowiki>##</nowiki> Secrets storage related settings - k8s secrets only |

| − | secrets: | + | secrets: |

| − | + | <nowiki> </nowiki> # Used for pulling images/containers from the repositories. | |

| − | + | <nowiki> </nowiki> imagePull: | |

| − | + | <nowiki> </nowiki> - name: <credential-name> | |

| − | + | <nowiki> </nowiki> | |

| − | + | <nowiki> </nowiki> # Config Server secrets. If k8s is false, csi will be used, else k8s will be used. | |

| − | + | <nowiki> </nowiki> # Currently, only k8s is supported! | |

| − | + | <nowiki> </nowiki> configServer: | |

| − | + | <nowiki> </nowiki> secretName: configserver-secret | |

| − | + | <nowiki> </nowiki> secretUserKey: username | |

| − | + | <nowiki> </nowiki> secretPwdKey: password | |

| − | + | <nowiki> </nowiki> #csiSecretProviderClass: keyvault-gvp-gvp-configserver-secret | |

| − | |||

| − | + | <nowiki> </nowiki> # Config Server Postgres DB secrets and settings. | |

| − | + | <nowiki> </nowiki> postgres: | |

| − | + | <nowiki> </nowiki> dbName: gvp | |

| − | + | <nowiki> </nowiki> dbPort: 5432 | |

| − | + | <nowiki> </nowiki> secretName: postgres-secret | |

| − | + | <nowiki> </nowiki> secretAdminUserKey: db-username | |

| − | + | <nowiki> </nowiki> secretAdminPwdKey: db-password | |

| − | + | <nowiki> </nowiki> secretHostnameKey: db-hostname | |

| − | + | <nowiki> </nowiki> secretDbNameKey: db-name | |

| − | + | <nowiki> </nowiki> #secretServerNameKey: server-name | |

| − | ## Ingress configuration | + | <nowiki>##</nowiki> Ingress configuration |

| − | ingress: | + | ingress: |

| − | + | <nowiki> </nowiki> enabled: false | |

| − | + | <nowiki> </nowiki> annotations: {} | |

| − | + | <nowiki> </nowiki> # kubernetes.io/ingress.class: nginx | |

| − | + | <nowiki> </nowiki> # kubernetes.io/tls-acme: "true" | |

| − | + | <nowiki> </nowiki> hosts: | |

| − | + | <nowiki> </nowiki> - host: chart-example.local | |

| − | + | <nowiki> </nowiki> paths: [] | |

| − | + | <nowiki> </nowiki> tls: [] | |

| − | + | <nowiki> </nowiki> # - secretName: chart-example-tls | |

| − | + | <nowiki> </nowiki> # hosts: | |

| − | + | <nowiki> </nowiki> # - chart-example.local | |

| − | ## App resource requests and limits | + | <nowiki>##</nowiki> App resource requests and limits |

| − | ## ref: http://kubernetes.io/docs/user-guide/compute-resources/ | + | <nowiki>##</nowiki> ref: <nowiki>http://kubernetes.io/docs/user-guide/compute-resources/</nowiki> |

| − | ## | + | <nowiki>##</nowiki> |

| − | resources: | + | resources: |

| − | + | <nowiki> </nowiki> requests: | |

| − | + | <nowiki> </nowiki> memory: "512Mi" | |

| − | + | <nowiki> </nowiki> cpu: "500m" | |

| − | + | <nowiki> </nowiki> limits: | |

| − | + | <nowiki> </nowiki> memory: "1Gi" | |

| − | + | <nowiki> </nowiki> cpu: "1" | |

| − | ## App containers' Security Context | + | <nowiki>##</nowiki> App containers' Security Context |

| − | ## ref: https://kubernetes.io/docs/tasks/configure-pod-container/security-context/#set-the-security-context-for-a-container | + | <nowiki>##</nowiki> ref: <nowiki>https://kubernetes.io/docs/tasks/configure-pod-container/security-context/#set-the-security-context-for-a-container</nowiki> |

| − | ## | + | <nowiki>##</nowiki> |

| − | ## Containers should run as genesys user and cannot use elevated permissions | + | <nowiki>##</nowiki> Containers should run as genesys user and cannot use elevated permissions |

| − | ## | + | <nowiki>##</nowiki> |

| − | securityContext: | + | securityContext: |

| − | + | <nowiki> </nowiki> runAsUser: 500 | |

| − | + | <nowiki> </nowiki> runAsGroup: 500 | |

| − | + | <nowiki> </nowiki> # capabilities: | |

| − | + | <nowiki> </nowiki> # drop: | |

| − | + | <nowiki> </nowiki> # - ALL | |

| − | + | <nowiki> </nowiki> # readOnlyRootFilesystem: true | |

| − | + | <nowiki> </nowiki> # runAsNonRoot: true | |

| − | + | <nowiki> </nowiki> # runAsUser: 1000 | |

| − | podSecurityContext: {} | + | podSecurityContext: {} |

| − | + | <nowiki> </nowiki> # fsGroup: 2000 | |

| − | ## Priority Class | + | <nowiki>##</nowiki> Priority Class |

| − | ## ref: https://kubernetes.io/docs/concepts/configuration/pod-priority-preemption/ | + | <nowiki>##</nowiki> ref: <nowiki>https://kubernetes.io/docs/concepts/configuration/pod-priority-preemption/</nowiki> |

| − | ## NOTE: this is an optional parameter | + | <nowiki>##</nowiki> NOTE: this is an optional parameter |

| − | ## | + | <nowiki>##</nowiki> |

| − | priorityClassName: | + | priorityClassName: <critical-priority-class> |

| − | ## Affinity for assignment. | + | <nowiki>##</nowiki> Affinity for assignment. |

| − | ## Ref: https://kubernetes.io/docs/concepts/configuration/assign-pod-node/#affinity-and-anti-affinity | + | <nowiki>##</nowiki> Ref: <nowiki>https://kubernetes.io/docs/concepts/configuration/assign-pod-node/#affinity-and-anti-affinity</nowiki> |

| − | ## | + | <nowiki>##</nowiki> |

| − | affinity: {} | + | affinity: {} |

| − | ## Node labels for assignment. | + | <nowiki>##</nowiki> Node labels for assignment. |

| − | ## ref: https://kubernetes.io/docs/user-guide/node-selection/ | + | <nowiki>##</nowiki> ref: <nowiki>https://kubernetes.io/docs/user-guide/node-selection/</nowiki> |

| − | ## | + | <nowiki>##</nowiki> |

| − | nodeSelector: {} | + | nodeSelector: {} |

| − | ## Tolerations for assignment. | + | <nowiki>##</nowiki> Tolerations for assignment. |

| − | ## ref: https://kubernetes.io/docs/concepts/configuration/taint-and-toleration/ | + | <nowiki>##</nowiki> ref: <nowiki>https://kubernetes.io/docs/concepts/configuration/taint-and-toleration/</nowiki> |

| − | ## | + | <nowiki>##</nowiki> |

| − | tolerations: [] | + | tolerations: [] |

| − | ## Service/Pod Monitoring Settings | + | <nowiki>##</nowiki> Service/Pod Monitoring Settings |

| − | ## Whether to create Prometheus alert rules or not. | + | <nowiki>##</nowiki> Whether to create Prometheus alert rules or not. |

| − | prometheusRule: | + | prometheusRule: |

| − | + | <nowiki> </nowiki> create: true | |

| − | ## Grafana dashboard Settings | + | <nowiki>##</nowiki> Grafana dashboard Settings |

| − | ## Whether to create Grafana dashboard or not. | + | <nowiki>##</nowiki> Whether to create Grafana dashboard or not. |

| − | grafana: | + | grafana: |

| − | + | <nowiki> </nowiki> enabled: true | |

| − | ## Enable network policies or not | + | <nowiki>##</nowiki> Enable network policies or not |

| − | networkPolicies: | + | networkPolicies: |

| − | + | <nowiki> </nowiki> enabled: false | |

| − | ## DNS configuration options | + | <nowiki>##</nowiki> DNS configuration options |

| − | dnsConfig: | + | dnsConfig: |

| − | + | <nowiki> </nowiki> options: | |

| − | + | <nowiki> </nowiki> - name: ndots | |

| − | + | <nowiki> </nowiki> value: "3" | |

| − | + | {{!}}} | |

| − | |||

===Verify the deployed resources=== | ===Verify the deployed resources=== | ||

Verify the deployed resources from OpenShift console/CLI. | Verify the deployed resources from OpenShift console/CLI. | ||

| Line 361: | Line 376: | ||

<u>shared-consul-consul-gvp-token</u> | <u>shared-consul-consul-gvp-token</u> | ||

| − | |||

| − | |||

{{{!}} class="wikitable" data-macro-name="code" data-macro-id="7f8edbd9-46af-4fd5-8f1d-0a94107150b2" data-macro-parameters="language=yml{{!}}theme=Emacs{{!}}title=shared-consul-consul-gvp-token-secret.yaml" data-macro-schema-version="1" data-macro-body-type="PLAIN_TEXT" | {{{!}} class="wikitable" data-macro-name="code" data-macro-id="7f8edbd9-46af-4fd5-8f1d-0a94107150b2" data-macro-parameters="language=yml{{!}}theme=Emacs{{!}}title=shared-consul-consul-gvp-token-secret.yaml" data-macro-schema-version="1" data-macro-body-type="PLAIN_TEXT" | ||

{{!}} class="wysiwyg-macro-body"{{!}} | {{!}} class="wysiwyg-macro-body"{{!}} | ||

| Line 376: | Line 389: | ||

{{{!}} class="wikitable" data-macro-name="code" data-macro-id="d626b74c-4177-4c6a-80eb-5b15c2cb4b49" data-macro-parameters="language=bash{{!}}theme=Emacs" data-macro-schema-version="1" data-macro-body-type="PLAIN_TEXT" | {{{!}} class="wikitable" data-macro-name="code" data-macro-id="d626b74c-4177-4c6a-80eb-5b15c2cb4b49" data-macro-parameters="language=bash{{!}}theme=Emacs" data-macro-schema-version="1" data-macro-body-type="PLAIN_TEXT" | ||

{{!}} class="wysiwyg-macro-body"{{!}} | {{!}} class="wysiwyg-macro-body"{{!}} | ||

| − | + | oc create -f shared-consul-consul-gvp-token-secret.yaml | |

{{!}}} | {{!}}} | ||

===ConfigMap creation=== | ===ConfigMap creation=== | ||

| − | + | Creation of a '''tenant-inventory''' ConfigMap is required for service discovery deployment. | |

| − | + | {{{!}} class="wikitable" data-macro-name="warning" data-macro-id="8245f116-1e0d-4b93-b0b7-64f56059d079" data-macro-parameters="title=Caveat" data-macro-schema-version="1" data-macro-body-type="RICH_TEXT" | |

| − | + | {{!}} class="wysiwyg-macro-body"{{!}}If the tenant has not been deployed yet then you will not have the information needed to populate the config map. An empty config-map can be created using: | |

| − | + | oc create configmap tenant-inventory -n gvp | |

| − | If the tenant has not been deployed yet then you will not have the information needed to populate the config map. An empty config-map can be created using: | + | {{!}}} |

| − | + | ====Provisioning a new tenant==== | |

| − | + | Create a file (t100.json in the example) containing at minimum: '''name, id, gws-ccid,''' and '''default-application''' (should be set to '''IVRAppDefault''') from your tenant deployment. | |

| − | + | {{{!}} class="wikitable" data-macro-name="code" data-macro-id="7e030700-72f2-4cba-8684-7277d30ade46" data-macro-parameters="language=yml{{!}}theme=Emacs{{!}}title=t100.json" data-macro-schema-version="1" data-macro-body-type="PLAIN_TEXT" | |

| − | Create | + | {{!}} class="wysiwyg-macro-body"{{!}} |

| − | + | { | |

| − | + | "name": "t100", | |

| − | + | "id": "80dd", | |

| − | { | + | "gws-ccid": "9350e2fc-a1dd-4c65-8d40-1f75a2e080dd", |

| − | + | "default-application": "IVRAppDefault" | |

| − | + | } | |

| − | + | {{!}}}Execute the following command to create ConfigMap on cluster: | |

| − | + | {{{!}} class="wikitable" data-macro-name="code" data-macro-id="e1e8cef4-3c8c-42b1-86f8-44810ba0779e" data-macro-parameters="language=bash{{!}}theme=Emacs{{!}}title=Add Config Map" data-macro-schema-version="1" data-macro-body-type="PLAIN_TEXT" | |

| − | } | + | {{!}} class="wysiwyg-macro-body"{{!}} |

| − | + | oc create configmap tenant-inventory --from-file t100.json -n gvp | |

| − | + | {{!}}} | |

| − | Execute the following command: | + | ====Updating a tenant==== |

| − | + | Delete the '''tenant-inventory''' ConfigMap using: | |

| − | ''' | + | {{{!}} class="wikitable" data-macro-name="code" data-macro-id="44060763-018b-4d8f-8298-be5b15468412" data-macro-parameters="language=bash{{!}}theme=Emacs{{!}}title=Delete Config Map" data-macro-schema-version="1" data-macro-body-type="PLAIN_TEXT" |

| − | + | {{!}} class="wysiwyg-macro-body"{{!}} | |

| − | + | oc delete configmap tenant-inventory -n gvp --ignore-not-found | |

| − | + | {{!}}}Update the '''t100.json''' file and execute the following to create the new ConfigMap: | |

| + | {{{!}} class="wikitable" data-macro-name="code" data-macro-id="a21129e4-fae7-44e1-8af3-30c5a04dff34" data-macro-parameters="language=bash{{!}}theme=Emacs{{!}}title=Add Config Map" data-macro-schema-version="1" data-macro-body-type="PLAIN_TEXT" | ||

| + | {{!}} class="wysiwyg-macro-body"{{!}} | ||

| + | oc create configmap tenant-inventory --from-file t100.json -n gvp | ||

| + | {{!}}}GVP Service Discovery looks for changes to the config map every 60 seconds. | ||

| + | ====Provisioning process details==== | ||

| + | For additional details on the provisioning process, refer to {{Link-AnywhereElse|product=GVP|version=Current|manual=GVPPEGuide|topic=Provision}}. | ||

===Install Helm chart=== | ===Install Helm chart=== | ||

Download the required Helm chart release from the JFrog repository and install. Refer to {{Link-AnywhereElse|product=GVP|version=Current|manual=GVPPEGuide|topic=Deploy|anchor=HelmchaURLs|display text=Helm Chart URLs}}. | Download the required Helm chart release from the JFrog repository and install. Refer to {{Link-AnywhereElse|product=GVP|version=Current|manual=GVPPEGuide|topic=Deploy|anchor=HelmchaURLs|display text=Helm Chart URLs}}. | ||

| Line 410: | Line 429: | ||

{{!}} class="wysiwyg-macro-body"{{!}} | {{!}} class="wysiwyg-macro-body"{{!}} | ||

helm install gvp-sd ./<gvp-sd-helm-artifact> -f gvp-sd-values.yaml | helm install gvp-sd ./<gvp-sd-helm-artifact> -f gvp-sd-values.yaml | ||

| − | {{!}}} | + | {{!}}}At minimum following values will need to be updated in your values.yaml: |

| + | *<critical-priority-class> - Set to a priority class that exists on cluster (or create it instead) | ||

| + | *<docker-repo> - Set to your Docker Repo with Private Edition Artifacts | ||

| + | *<credential-name> - Set to your pull secret name | ||

| − | + | {{{!}} class="wikitable" data-macro-name="code" data-macro-id="8d8c2e7b-040d-42e3-96c5-e8f07620a22e" data-macro-parameters="language=yml{{!}}theme=Emacs{{!}}title=gvp-sd-values.yaml" data-macro-schema-version="1" data-macro-body-type="PLAIN_TEXT" | |

| − | + | {{!}} class="wysiwyg-macro-body"{{!}} | |

| − | # Default values for gvp-sd. | + | # Default values for gvp-sd. |

| − | # This is a YAML-formatted file. | + | <nowiki>#</nowiki> This is a YAML-formatted file. |

| − | # Declare variables to be passed into your templates. | + | <nowiki>#</nowiki> Declare variables to be passed into your templates. |

| − | ## Global Parameters | + | <nowiki>##</nowiki> Global Parameters |

| − | ## Add labels to all the deployed resources | + | <nowiki>##</nowiki> Add labels to all the deployed resources |

| − | ## | + | <nowiki>##</nowiki> |

| − | podLabels: {} | + | podLabels: {} |

| − | ## Add annotations to all the deployed resources | + | <nowiki>##</nowiki> Add annotations to all the deployed resources |

| − | ## | + | <nowiki>##</nowiki> |

| − | podAnnotations: {} | + | podAnnotations: {} |

| − | serviceAccount: | + | serviceAccount: |

| − | + | <nowiki> </nowiki> # Specifies whether a service account should be created | |

| − | + | <nowiki> </nowiki> create: false | |

| − | + | <nowiki> </nowiki> # Annotations to add to the service account | |

| − | + | <nowiki> </nowiki> annotations: {} | |

| − | + | <nowiki> </nowiki> # The name of the service account to use. | |

| − | + | <nowiki> </nowiki> # If not set and create is true, a name is generated using the fullname template | |

| − | + | <nowiki> </nowiki> name: | |

| − | ## Deployment Configuration | + | <nowiki>##</nowiki> Deployment Configuration |

| − | replicaCount: 1 | + | replicaCount: 1 |

| − | smtp: allowed | + | smtp: allowed |

| − | ## Name overrides | + | <nowiki>##</nowiki> Name overrides |

| − | nameOverride: "" | + | nameOverride: "" |

| − | fullnameOverride: "" | + | fullnameOverride: "" |

| − | ## Base Labels. Please do not change these. | + | <nowiki>##</nowiki> Base Labels. Please do not change these. |

| − | component: shared | + | component: shared |

| − | partOf: gvp | + | partOf: gvp |

| − | image: | + | image: |

| − | + | <nowiki> </nowiki> registry: <docker-repo> | |

| − | + | <nowiki> </nowiki> repository: gvp/gvp_sd | |

| − | + | <nowiki> </nowiki> tag: "<nowiki>{{ .Chart.AppVersion }}</nowiki>" | |

| − | + | <nowiki> </nowiki> pullPolicy: IfNotPresent | |

| − | ## PVCs defined | + | <nowiki>##</nowiki> PVCs defined |

| − | # none | + | <nowiki>#</nowiki> none |

| − | ## Define service for application. | + | <nowiki>##</nowiki> Define service for application. |

| − | service: | + | service: |

| − | + | <nowiki> </nowiki> name: gvp-sd | |

| − | + | <nowiki> </nowiki> type: ClusterIP | |

| − | + | <nowiki> </nowiki> port: 8080 | |

| − | ## Application configuration parameters. | + | <nowiki>##</nowiki> Application configuration parameters. |

| − | env: | + | env: |

| − | + | <nowiki> </nowiki> MCP_SVC_NAME: "gvp-mcp" | |

| − | + | <nowiki> </nowiki> EXTERNAL_CONSUL_SERVER: "" | |

| − | + | <nowiki> </nowiki> CONSUL_PORT: "8501" | |

| − | + | <nowiki> </nowiki> CONFIG_SERVER_HOST: "gvp-configserver" | |

| − | + | <nowiki> </nowiki> CONFIG_SERVER_PORT: "8888" | |

| − | + | <nowiki> </nowiki> CONFIG_SERVER_APP: "default" | |

| − | + | <nowiki> </nowiki> HTTP_SERVER_PORT: "8080" | |

| − | + | <nowiki> </nowiki> METRICS_EXPORTER_PORT: "9090" | |

| − | + | <nowiki> </nowiki> DEF_MCP_FOLDER: "MCP_Configuration_Unit\\MCP_LRG" | |

| − | + | <nowiki> </nowiki> TEST_MCP_FOLDER: "MCP_Configuration_Unit_Test\\MCP_LRG" | |

| − | + | <nowiki> </nowiki> SYNC_INIT_DELAY: "10000" | |

| − | + | <nowiki> </nowiki> SYNC_PERIOD: "60000" | |

| − | + | <nowiki> </nowiki> MCP_PURGE_PERIOD_MINS: "0" | |

| − | + | <nowiki> </nowiki> EMAIL_METERING_FACTOR: "10" | |

| − | + | <nowiki> </nowiki> RECORDINGS_CONTAINER: "ccerp-recordings" | |

| − | + | <nowiki> </nowiki> TENANT_KV_FOLDER: "tenants" | |

| − | + | <nowiki> </nowiki> TENANT_CONFIGMAP_FOLDER: "/etc/config" | |

| − | + | <nowiki> </nowiki> SMTP_SERVER: "smtp-relay.smtp.svc.cluster.local" | |

| − | ## Secrets storage related settings | + | <nowiki>##</nowiki> Secrets storage related settings |

| − | secrets: | + | secrets: |

| − | + | <nowiki> </nowiki> # Used for pulling images/containers from the repositories. | |

| − | + | <nowiki> </nowiki> imagePull: | |

| − | + | <nowiki> </nowiki> - name: <credential-name> | |

| − | + | <nowiki> </nowiki> | |

| − | + | <nowiki> </nowiki> # If k8s is true, k8s will be used, else vault secret will be used. | |

| − | + | <nowiki> </nowiki> configServer: | |

| − | + | <nowiki> </nowiki> k8s: true | |

| − | + | <nowiki> </nowiki> k8sSecretName: configserver-secret | |

| − | + | <nowiki> </nowiki> k8sUserKey: username | |

| − | + | <nowiki> </nowiki> k8sPasswordKey: password | |

| − | + | <nowiki> </nowiki> vaultSecretName: "/configserver-secret" | |

| − | + | <nowiki> </nowiki> vaultUserKey: "configserver-username" | |

| − | + | <nowiki> </nowiki> vaultPasswordKey: "configserver-password" | |

| − | |||

| − | + | <nowiki> </nowiki> # If k8s is true, k8s will be used, else vault secret will be used. | |

| − | + | <nowiki> </nowiki> consul: | |

| − | + | <nowiki> </nowiki> k8s: true | |

| − | + | <nowiki> </nowiki> k8sTokenName: "shared-consul-consul-gvp-token" | |

| − | + | <nowiki> </nowiki> k8sTokenKey: "consul-consul-gvp-token" | |

| − | + | <nowiki> </nowiki> vaultSecretName: "/consul-secret" | |

| − | + | <nowiki> </nowiki> vaultSecretKey: "consul-consul-gvp-token" | |

| − | + | <nowiki> </nowiki> # GTTS key, password via k8s secret, if k8s is true. If false, this data comes from tenant profile. | |

| − | + | <nowiki> </nowiki> gtts: | |

| − | + | <nowiki> </nowiki> k8s: false | |

| − | + | <nowiki> </nowiki> k8sSecretName: gtts-secret | |

| − | + | <nowiki> </nowiki> EncryptedKey: encrypted-key | |

| − | + | <nowiki> </nowiki> PasswordKey: password | |

| − | ingress: | + | ingress: |

| − | + | <nowiki> </nowiki> enabled: false | |

| − | + | <nowiki> </nowiki> annotations: {} | |

| − | + | <nowiki> </nowiki> # kubernetes.io/ingress.class: nginx | |

| − | + | <nowiki> </nowiki> # kubernetes.io/tls-acme: "true" | |

| − | + | <nowiki> </nowiki> hosts: | |

| − | + | <nowiki> </nowiki> - host: chart-example.local | |

| − | + | <nowiki> </nowiki> paths: [] | |

| − | + | <nowiki> </nowiki> tls: [] | |

| − | + | <nowiki> </nowiki> # - secretName: chart-example-tls | |

| − | + | <nowiki> </nowiki> # hosts: | |

| − | + | <nowiki> </nowiki> # - chart-example.local | |

| − | resources: | + | resources: |

| − | + | <nowiki> </nowiki> requests: | |

| − | + | <nowiki> </nowiki> memory: "2Gi" | |

| − | + | <nowiki> </nowiki> cpu: "1000m" | |

| − | + | <nowiki> </nowiki> limits: | |

| − | + | <nowiki> </nowiki> memory: "2Gi" | |

| − | + | <nowiki> </nowiki> cpu: "1000m" | |

| − | ## App containers' Security Context | + | <nowiki>##</nowiki> App containers' Security Context |

| − | ## ref: https://kubernetes.io/docs/tasks/configure-pod-container/security-context/#set-the-security-context-for-a-container | + | <nowiki>##</nowiki> ref: <nowiki>https://kubernetes.io/docs/tasks/configure-pod-container/security-context/#set-the-security-context-for-a-container</nowiki> |

| − | ## | + | <nowiki>##</nowiki> |

| − | ## Containers should run as genesys user and cannot use elevated permissions | + | <nowiki>##</nowiki> Containers should run as genesys user and cannot use elevated permissions |

| − | ## Pod level security context | + | <nowiki>##</nowiki> Pod level security context |

| − | podSecurityContext: | + | podSecurityContext: |

| − | + | <nowiki> </nowiki> fsGroup: 500 | |

| − | + | <nowiki> </nowiki> runAsUser: 500 | |

| − | + | <nowiki> </nowiki> runAsGroup: 500 | |

| − | + | <nowiki> </nowiki> runAsNonRoot: true | |

| − | ## Container security context | + | <nowiki>##</nowiki> Container security context |

| − | securityContext: | + | securityContext: |

| − | + | <nowiki> </nowiki> runAsUser: 500 | |

| − | + | <nowiki> </nowiki> runAsGroup: 500 | |

| − | + | <nowiki> </nowiki> runAsNonRoot: true | |

| − | ## Priority Class | + | <nowiki>##</nowiki> Priority Class |

| − | ## ref: https://kubernetes.io/docs/concepts/configuration/pod-priority-preemption/ | + | <nowiki>##</nowiki> ref: <nowiki>https://kubernetes.io/docs/concepts/configuration/pod-priority-preemption/</nowiki> |

| − | ## NOTE: this is an optional parameter | + | <nowiki>##</nowiki> NOTE: this is an optional parameter |

| − | ## | + | <nowiki>##</nowiki> |

| − | priorityClassName: | + | priorityClassName: <critical-priority-class> |

| − | ## Affinity for assignment. | + | <nowiki>##</nowiki> Affinity for assignment. |

| − | ## Ref: https://kubernetes.io/docs/concepts/configuration/assign-pod-node/#affinity-and-anti-affinity | + | <nowiki>##</nowiki> Ref: <nowiki>https://kubernetes.io/docs/concepts/configuration/assign-pod-node/#affinity-and-anti-affinity</nowiki> |

| − | ## | + | <nowiki>##</nowiki> |

| − | affinity: {} | + | affinity: {} |

| − | ## Node labels for assignment. | + | <nowiki>##</nowiki> Node labels for assignment. |

| − | ## ref: https://kubernetes.io/docs/user-guide/node-selection/ | + | <nowiki>##</nowiki> ref: <nowiki>https://kubernetes.io/docs/user-guide/node-selection/</nowiki> |

| − | ## | + | <nowiki>##</nowiki> |

| − | nodeSelector: {} | + | nodeSelector: {} |

| − | ## Tolerations for assignment. | + | <nowiki>##</nowiki> Tolerations for assignment. |

| − | ## ref: https://kubernetes.io/docs/concepts/configuration/taint-and-toleration/ | + | <nowiki>##</nowiki> ref: <nowiki>https://kubernetes.io/docs/concepts/configuration/taint-and-toleration/</nowiki> |

| − | ## | + | <nowiki>##</nowiki> |

| − | tolerations: [] | + | tolerations: [] |

| − | ## Service/Pod Monitoring Settings | + | <nowiki>##</nowiki> Service/Pod Monitoring Settings |

| − | prometheus: | + | prometheus: |

| − | + | <nowiki> </nowiki> # Enable for Prometheus operator | |

| − | + | <nowiki> </nowiki> podMonitor: | |

| − | + | <nowiki> </nowiki> enabled: true | |

| − | ## Enable network policies or not | + | <nowiki>##</nowiki> Enable network policies or not |

| − | networkPolicies: | + | networkPolicies: |

| − | + | <nowiki> </nowiki> enabled: false | |

| − | ## DNS configuration options | + | <nowiki>##</nowiki> DNS configuration options |

| − | dnsConfig: | + | dnsConfig: |

| − | + | <nowiki> </nowiki> options: | |

| − | + | <nowiki> </nowiki> - name: ndots | |

| − | + | <nowiki> </nowiki> value: "3" | |

| − | + | {{!}}} | |

===Verify the deployed resources=== | ===Verify the deployed resources=== | ||

Verify the deployed resources from OpenShift console/CLI. | Verify the deployed resources from OpenShift console/CLI. | ||

| Line 612: | Line 633: | ||

db_username: | db_username: | ||

| − | |||

| − | |||

{{{!}} class="wikitable" data-macro-name="code" data-macro-id="1630c47f-c10a-41bb-b1c5-b417d4ac23f0" data-macro-parameters="language=yml{{!}}theme=Emacs{{!}}title=rs-dbreader-password-secret.yaml" data-macro-schema-version="1" data-macro-body-type="PLAIN_TEXT" | {{{!}} class="wikitable" data-macro-name="code" data-macro-id="1630c47f-c10a-41bb-b1c5-b417d4ac23f0" data-macro-parameters="language=yml{{!}}theme=Emacs{{!}}title=rs-dbreader-password-secret.yaml" data-macro-schema-version="1" data-macro-body-type="PLAIN_TEXT" | ||

{{!}} class="wysiwyg-macro-body"{{!}} | {{!}} class="wysiwyg-macro-body"{{!}} | ||

| Line 630: | Line 649: | ||

{{{!}} class="wikitable" data-macro-name="code" data-macro-id="ccb59bb5-e759-47fa-b46e-113991e08ddd" data-macro-parameters="language=bash{{!}}theme=Emacs" data-macro-schema-version="1" data-macro-body-type="PLAIN_TEXT" | {{{!}} class="wikitable" data-macro-name="code" data-macro-id="ccb59bb5-e759-47fa-b46e-113991e08ddd" data-macro-parameters="language=bash{{!}}theme=Emacs" data-macro-schema-version="1" data-macro-body-type="PLAIN_TEXT" | ||

{{!}} class="wysiwyg-macro-body"{{!}} | {{!}} class="wysiwyg-macro-body"{{!}} | ||

| − | + | oc create -f rs-dbreader-password-secret.yaml | |

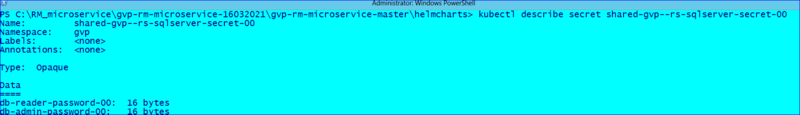

{{!}}}<u>shared-gvp-rs-sqlserer-secret</u> | {{!}}}<u>shared-gvp-rs-sqlserer-secret</u> | ||

| Line 636: | Line 655: | ||

db-reader-password: | db-reader-password: | ||

| − | |||

| − | |||

{{{!}} class="wikitable" data-macro-name="code" data-macro-id="3a80c2ed-81d5-40d5-a8b4-8e099933f371" data-macro-parameters="language=yml{{!}}theme=Emacs{{!}}title=shared-gvp-rs-sqlserer-secret.yaml" data-macro-schema-version="1" data-macro-body-type="PLAIN_TEXT" | {{{!}} class="wikitable" data-macro-name="code" data-macro-id="3a80c2ed-81d5-40d5-a8b4-8e099933f371" data-macro-parameters="language=yml{{!}}theme=Emacs{{!}}title=shared-gvp-rs-sqlserer-secret.yaml" data-macro-schema-version="1" data-macro-body-type="PLAIN_TEXT" | ||

{{!}} class="wysiwyg-macro-body"{{!}} | {{!}} class="wysiwyg-macro-body"{{!}} | ||

| Line 652: | Line 669: | ||

{{{!}} class="wikitable" data-macro-name="code" data-macro-id="8e9dd016-c876-4baa-a7b0-a58b08dbd4ac" data-macro-parameters="language=bash{{!}}theme=Emacs" data-macro-schema-version="1" data-macro-body-type="PLAIN_TEXT" | {{{!}} class="wikitable" data-macro-name="code" data-macro-id="8e9dd016-c876-4baa-a7b0-a58b08dbd4ac" data-macro-parameters="language=bash{{!}}theme=Emacs" data-macro-schema-version="1" data-macro-body-type="PLAIN_TEXT" | ||

{{!}} class="wysiwyg-macro-body"{{!}} | {{!}} class="wysiwyg-macro-body"{{!}} | ||

| − | + | oc create -f shared-gvp-rs-sqlserer-secret.yaml | |

{{!}}} | {{!}}} | ||

===Persistent Volumes creation=== | ===Persistent Volumes creation=== | ||

| + | '''Note''': The steps for PV creation can be skipped if OCS is used to auto provision the persistent volumes. | ||

| + | |||

Create the following PVs which are required for the service deployment. | Create the following PVs which are required for the service deployment. | ||

| − | + | {{{!}} class="wikitable" data-macro-name="warning" data-macro-id="8b99e091-ef72-401b-87ba-0a2fbb711686" data-macro-parameters="icon=false{{!}}title=Note Regarding Persistent Volumes" data-macro-schema-version="1" data-macro-body-type="RICH_TEXT" | |

| − | gvp-rs-0 | + | {{!}} class="wysiwyg-macro-body"{{!}}If your OpenShift deployment is capable of self-provisioning of Persistent Volumes, then this step can be skipped. Volumes will be created by provisioner. |

| − | + | {{!}}}<u>gvp-rs-0</u> | |

| − | + | {{{!}} class="wikitable" data-macro-name="code" data-macro-id="913a9e32-bc41-4a67-b0ef-f4dde848bf06" data-macro-parameters="language=yml{{!}}theme=Emacs{{!}}title=gvp-rs-pv.yaml" data-macro-schema-version="1" data-macro-body-type="PLAIN_TEXT" | |

| − | + | {{!}} class="wysiwyg-macro-body"{{!}} | |

| − | apiVersion: v1 | + | apiVersion: v1 |

| − | kind: PersistentVolume | + | kind: PersistentVolume |

| − | metadata: | + | metadata: |

| − | name: gvp-rs-0 | + | name: gvp-rs-0 |

| − | namespace: gvp | + | namespace: gvp |

| − | spec: | + | spec: |

| − | capacity: | + | capacity: |

| − | storage: 30Gi | + | storage: 30Gi |

| − | accessModes: | + | accessModes: |

| − | - ReadWriteOnce | + | - ReadWriteOnce |

| − | persistentVolumeReclaimPolicy: Retain | + | persistentVolumeReclaimPolicy: Retain |

| − | storageClassName: gvp | + | storageClassName: gvp |

| − | nfs: | + | nfs: |

| − | path: /export/vol1/PAT/gvp/rs-01 | + | path: /export/vol1/PAT/gvp/rs-01 |

| − | server: 192.168.30.51 | + | server: 192.168.30.51 |

| − | + | {{!}}}Execute the following command: | |

| − | + | {{{!}} class="wikitable" data-macro-name="code" data-macro-id="fda31e0a-a32f-4991-ba43-35cfd17ef2e7" data-macro-parameters="language=bash{{!}}theme=Emacs" data-macro-schema-version="1" data-macro-body-type="PLAIN_TEXT" | |

| − | Execute the following command: | + | {{!}} class="wysiwyg-macro-body"{{!}} |

| − | + | oc create -f gvp-rs-pv.yaml | |

| − | + | {{!}}} | |

| − | |||

| − | |||

===Install Helm chart=== | ===Install Helm chart=== | ||

Download the required Helm chart release from the JFrog repository and install. Refer to {{Link-AnywhereElse|product=GVP|version=Current|manual=GVPPEGuide|topic=Deploy|anchor=HelmchaURLs|display text=Helm Chart URLs}}. | Download the required Helm chart release from the JFrog repository and install. Refer to {{Link-AnywhereElse|product=GVP|version=Current|manual=GVPPEGuide|topic=Deploy|anchor=HelmchaURLs|display text=Helm Chart URLs}}. | ||

| Line 688: | Line 705: | ||

{{!}} class="wysiwyg-macro-body"{{!}} | {{!}} class="wysiwyg-macro-body"{{!}} | ||

helm install gvp-rs ./<gvp-rs-helm-artifact> -f gvp-rs-values.yaml | helm install gvp-rs ./<gvp-rs-helm-artifact> -f gvp-rs-values.yaml | ||

| − | {{!}}} | + | {{!}}}At minimum following values will need to be updated in your values.yaml: |

| + | *<docker-repo> - Set to your Docker Repo with Private Edition Artifacts | ||

| + | *<credential-name> - Set to your pull secret name | ||

| − | + | {{{!}} class="wikitable" data-macro-name="code" data-macro-id="53ece46e-756d-4559-8c47-1480f902ecbe" data-macro-parameters="language=yml{{!}}theme=Emacs{{!}}title=gvp-rs-values.yaml" data-macro-schema-version="1" data-macro-body-type="PLAIN_TEXT" | |

| − | + | {{!}} class="wysiwyg-macro-body"{{!}} | |

| − | + | ## Global Parameters | |

| − | + | ## Add labels to all the deployed resources | |

| − | + | ## | |

| − | + | labels: | |

| − | + | enabled: true | |

| − | + | serviceGroup: "gvp" | |

| − | + | componentType: "shared" | |

| − | ## Global Parameters | ||

| − | ## Add labels to all the deployed resources | ||

| − | ## | ||

| − | labels: | ||

| − | |||

| − | |||

| − | |||

| − | serviceAccount: | + | serviceAccount: |

| − | + | # Specifies whether a service account should be created | |

| − | + | create: false | |

| − | + | # Annotations to add to the service account | |

| − | + | annotations: {} | |

| − | + | # The name of the service account to use. | |

| − | + | # If not set and create is true, a name is generated using the fullname template | |

| − | + | name: | |

| − | ## Primary App Configuration | + | ## Primary App Configuration |

| − | ## | + | ## |

| − | # primaryApp: | + | # primaryApp: |

| − | # type: ReplicaSet | + | # type: ReplicaSet |

| − | # Should include the defaults for replicas | + | # Should include the defaults for replicas |

| − | deployment: | + | deployment: |

| − | + | replicaCount: 1 | |

| − | + | strategy: Recreate | |

| − | + | namespace: gvp | |

| − | nameOverride: "" | + | nameOverride: "" |

| − | fullnameOverride: "" | + | fullnameOverride: "" |

| − | image: | + | image: |

| − | + | registry: <docker-repo> | |

| − | + | gvprsrepository: gvp/gvp_rs | |

| − | + | snmprepository: gvp/gvp_snmp | |

| − | + | rsinitrepository: gvp/gvp_rs_init | |

| − | + | rstag: | |

| − | + | rsinittag: | |

| − | + | snmptag: v9.0.040.07 | |

| − | + | pullPolicy: Always | |

| − | + | imagePullSecrets: | |

| − | + | - name: "<credential-name>" | |

| − | ## liveness and readiness probes | + | ## liveness and readiness probes |

| − | ## !!! THESE OPTIONS SHOULD NOT BE CHANGED UNLESS INSTRUCTED BY GENESYS !!! | + | ## !!! THESE OPTIONS SHOULD NOT BE CHANGED UNLESS INSTRUCTED BY GENESYS !!! |

| − | livenessValues: | + | livenessValues: |

| − | + | path: /ems-rs/components | |

| − | + | initialDelaySeconds: 30 | |

| − | + | periodSeconds: 120 | |

| − | + | timeoutSeconds: 3 | |

| − | + | failureThreshold: 3 | |

| − | readinessValues: | + | readinessValues: |

| − | + | path: /ems-rs/components | |

| − | + | initialDelaySeconds: 10 | |

| − | + | periodSeconds: 60 | |

| − | + | timeoutSeconds: 3 | |

| − | + | failureThreshold: 3 | |

| − | ## PVCs defined | + | ## PVCs defined |

| − | volumes: | + | volumes: |

| − | + | pvc: | |

| − | + | storageClass: managed-premium | |

| − | + | claimSize: 20Gi | |

| − | + | activemqAndLocalConfigPath: "/billing/gvp-rs" | |

| − | ## Define service(s) for application. Fields many need to be modified based on `type` | + | ## Define service(s) for application. Fields many need to be modified based on `type` |

| − | service: | + | service: |

| − | + | type: ClusterIP | |

| − | + | restapiport: 8080 | |

| − | + | activemqport: 61616 | |

| − | + | envinjectport: 443 | |

| − | + | dnsport: 53 | |

| − | + | configserverport: 8888 | |

| − | + | snmpport: 1705 | |

| − | ## ConfigMaps with Configuration | + | ## ConfigMaps with Configuration |

| − | ## Use Config Map for creating environment variables | + | ## Use Config Map for creating environment variables |

| − | context: | + | context: |

| − | + | env: | |

| − | + | CFGAPP: default | |

| − | + | GVP_RS_SERVICE_HOSTNAME: gvp-rs.gvp.svc.cluster.local | |

| − | + | #CFGPASSWORD: password | |

| − | + | #CFGUSER: default | |

| − | + | CFG_HOST: gvp-configserver.gvp.svc.cluster.local | |

| − | + | CFG_PORT: '8888' | |

| − | + | CMDLINE: ./rs_startup.sh | |

| − | + | DBNAME: gvp_rs | |

| − | + | #DBPASS: 'jbIKfoS6LpfgaU$E' | |

| − | + | DBUSER: openshiftadmin | |

| − | + | rsDbSharedUsername: openshiftadmin | |

| − | + | DBPORT: 1433 | |

| − | + | ENVTYPE: "" | |

| − | + | GenesysIURegion: "" | |

| − | + | localconfigcachepath: /billing/gvp-rs/data/cache | |

| − | + | HOSTFOLDER: Hosts | |

| − | + | HOSTOS: CFGRedHatLinux | |

| − | + | LCAPORT: '4999' | |

| − | + | MSSQLHOST: mssqlserveropenshift.database.windows.net | |

| − | + | RSAPP: azure_rs | |

| − | + | RSJVM_INITIALHEAPSIZE: 500m | |

| − | + | RSJVM_MAXHEAPSIZE: 1536m | |

| − | + | RSFOLDER: Applications | |

| − | + | RS_VERSION: 9.0.032.22 | |

| − | + | STDOUT: 'true' | |

| − | + | WRKDIR: /usr/local/genesys/rs/ | |

| − | + | SNMPAPP: azure_rs_snmp | |

| − | + | SNMP_WORKDIR: /usr/sbin | |

| − | + | SNMP_CMDLINE: snmpd | |

| − | + | SNMPFOLDER: Applications | |

| − | + | RSCONFIG: | |

| − | + | messaging: | |

| − | + | activemq.memoryUsageLimit: "256 mb" | |

| − | + | activemq.dataDirectory: "/billing/gvp-rs/data/activemq" | |

| − | + | log: | |

| − | + | verbose: "trace" | |

| − | + | trace: "stdout" | |

| − | + | dbmp: | |

| − | + | rs.db.retention.operations.daily.default: "40" | |

| − | + | rs.db.retention.operations.monthly.default: "40" | |

| − | + | rs.db.retention.operations.weekly.default: "40" | |

| − | + | rs.db.retention.var.daily.default: "40" | |

| − | + | rs.db.retention.var.monthly.default: "40" | |

| − | + | rs.db.retention.var.weekly.default: "40" | |

| − | + | rs.db.retention.cdr.default: "40" | |

| − | # Default secrets storage to k8s secrets with csi able to be optional | + | # Default secrets storage to k8s secrets with csi able to be optional |

| − | secret: | + | secret: |

| − | + | # keyVaultSecret will be a flag to between secret types(k8's or CSI). If keyVaultSecret was set to false k8's secret will be used | |

| − | + | keyVaultSecret: false | |

| − | + | #RS SQL server secret | |

| − | + | rsSecretName: shared-gvp-rs-sqlserver-secret | |

| − | + | # secretProviderClassName will not be used used when keyVaultSecret set to false | |

| − | + | secretProviderClassName: keyvault-gvp-rs-sqlserver-secret-00 | |

| − | + | dbreadersecretFileName: db-reader-password | |

| − | + | dbadminsecretFileName: db-admin-password | |

| − | + | #Configserver secret | |

| − | + | #If keyVaultSecret set to false the below parameters will not be used. | |

| − | + | configserverProviderClassName: gvp-configserver-secret | |

| − | + | cfgSecretFileNameForCfgUsername: configserver-username | |

| − | + | cfgSecretFileNameForCfgPassword: configserver-password | |

| − | + | #If keyVaultSecret set to true the below parameters will not be used. | |

| − | + | cfgServerSecretName: configserver-secret | |

| − | + | cfgSecretKeyNameForCfgUsername: username | |

| − | + | cfgSecretKeyNameForCfgPassword: password | |

| − | ## Ingress configuration | + | ## Ingress configuration |

| − | ingress: | + | ingress: |

| − | + | enabled: false | |

| − | + | annotations: {} | |

| − | + | # kubernetes.io/ingress.class: nginx | |

| − | + | # kubernetes.io/tls-acme: "true" | |

| − | + | hosts: | |

| − | + | - host: chart-example.local | |

| − | + | paths: [] | |

| − | + | tls: [] | |

| − | + | # - secretName: chart-example-tls | |

| − | + | # hosts: | |

| − | + | # - chart-example.local | |

| − | networkPolicies: | + | networkPolicies: |

| − | + | enabled: false | |

| − | ## primaryAppresource requests and limits | + | ## primaryAppresource requests and limits |

| − | ## ref: http://kubernetes.io/docs/user-guide/compute-resources/ | + | ## ref: <nowiki>http://kubernetes.io/docs/user-guide/compute-resources/</nowiki> |

| − | ## | + | ## |

| − | resourceForRS: | + | resourceForRS: |

| − | + | # We usually recommend not to specify default resources and to leave this as a conscious | |

| − | + | # choice for the user. This also increases chances charts run on environments with little | |

| − | + | # resources, such as Minikube. If you do want to specify resources, uncomment the following | |

| − | + | # lines, adjust them as necessary, and remove the curly braces after 'resources:'. | |

| − | + | requests: | |

| − | + | memory: "500Mi" | |

| − | + | cpu: "200m" | |

| − | + | limits: | |

| − | + | memory: "1Gi" | |

| − | + | cpu: "300m" | |

| − | resoueceForSnmp: | + | resoueceForSnmp: |

| − | + | requests: | |

| − | + | memory: "500Mi" | |

| − | + | cpu: "100m" | |

| − | + | limits: | |

| − | + | memory: "1Gi" | |

| − | + | cpu: "150m" | |

| − | ## primaryApp containers' Security Context | + | ## primaryApp containers' Security Context |

| − | ## ref: https://kubernetes.io/docs/tasks/configure-pod-container/security-context/#set-the-security-context-for-a-container | + | ## ref: <nowiki>https://kubernetes.io/docs/tasks/configure-pod-container/security-context/#set-the-security-context-for-a-container</nowiki> |

| − | ## | + | ## |

| − | ## Containers should run as genesys user and cannot use elevated permissions | + | ## Containers should run as genesys user and cannot use elevated permissions |

| − | securityContext: | + | securityContext: |

| − | + | runAsNonRoot: true | |

| − | + | runAsUser: 500 | |

| − | + | runAsGroup: 500 | |

| − | podSecurityContext: | + | podSecurityContext: |

| − | + | fsGroup: 500 | |

| − | ## Priority Class | + | ## Priority Class |

| − | ## ref: https://kubernetes.io/docs/concepts/configuration/pod-priority-preemption/ | + | ## ref: <nowiki>https://kubernetes.io/docs/concepts/configuration/pod-priority-preemption/</nowiki> |

| − | ## | + | ## |

| − | priorityClassName: "" | + | priorityClassName: "" |

| − | ## Affinity for assignment. | + | ## Affinity for assignment. |

| − | ## Ref: https://kubernetes.io/docs/concepts/configuration/assign-pod-node/#affinity-and-anti-affinity | + | ## Ref: <nowiki>https://kubernetes.io/docs/concepts/configuration/assign-pod-node/#affinity-and-anti-affinity</nowiki> |

| − | ## | + | ## |

| − | affinity: | + | affinity: |

| − | ## Node labels for assignment. | + | ## Node labels for assignment. |

| − | ## ref: https://kubernetes.io/docs/user-guide/node-selection/ | + | ## ref: <nowiki>https://kubernetes.io/docs/user-guide/node-selection/</nowiki> |

| − | ## | + | ## |

| − | nodeSelector: | + | nodeSelector: |

| − | ## Tolerations for assignment. | + | ## Tolerations for assignment. |

| − | ## ref: https://kubernetes.io/docs/concepts/configuration/taint-and-toleration/ | + | ## ref: <nowiki>https://kubernetes.io/docs/concepts/configuration/taint-and-toleration/</nowiki> |

| − | ## | + | ## |

| − | tolerations: [] | + | tolerations: [] |

| − | ## Extra labels | + | ## Extra labels |

| − | ## ref: https://kubernetes.io/docs/concepts/overview/working-with-objects/labels/ | + | ## ref: <nowiki>https://kubernetes.io/docs/concepts/overview/working-with-objects/labels/</nowiki> |

| − | ## | + | ## |

| − | # labels: {} | + | # labels: {} |

| − | ## Extra Annotations | + | ## Extra Annotations |

| − | ## ref: https://kubernetes.io/docs/concepts/overview/working-with-objects/annotations/ | + | ## ref: <nowiki>https://kubernetes.io/docs/concepts/overview/working-with-objects/annotations/</nowiki> |

| − | ## | + | ## |

| − | # annotations: {} | + | # annotations: {} |

| − | ## Service/Pod Monitoring Settings | + | ## Service/Pod Monitoring Settings |

| − | + | prometheus: | |

| − | + | enabled: true | |

| − | prometheusRulesEnabled: true | + | metric: |

| − | + | port: 9116 | |

| + | |||

| + | # Enable for Prometheus operator | ||

| + | podMonitor: | ||

| + | enabled: true | ||

| + | metric: | ||

| + | path: /snmp | ||

| + | module: [ if_mib ] | ||

| + | target: [ 127.0.0.1:1161 ] | ||

| + | |||

| + | monitoring: | ||

| + | prometheusRulesEnabled: true | ||

| + | grafanaEnabled: true | ||

| + | |||

| + | monitor: | ||

| + | monitorName: gvp-monitoring | ||

| − | + | ##DNS Settings | |

| − | + | dnsConfig: | |

| − | + | options: | |

| − | + | - name: ndots | |

| − | + | value: "3" | |

| − | + | {{!}}} | |

| − | ##DNS Settings | ||

| − | dnsConfig: | ||

| − | |||

| − | |||

| − | |||

| − | |||

===Verify the deployed resources=== | ===Verify the deployed resources=== | ||

Verify the deployed resources from OpenShift console/CLI. | Verify the deployed resources from OpenShift console/CLI. | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | ====Deployment validation - Success==== | |

| − | gvp- | + | 1. Log into console and check if gvp-rs pod is ready and running "oc get pods -o wide".[[File:RS_Deploy_success_1.png|none|800px|RM_Deploy_success_1|link=https://all.docs.genesys.com/File:RS_Deploy_success_1.png]]2. Do a pod describe and check if both liveness and Readiness probes are passing "oc describe gvp-rs" |

| − | + | 3. Check the RS applications and Db details are properly configured in GVP Configuration Server. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | 4. Check secrets are created in kubernetes cluster.[[File:RS_Deploy_success_2.png|none|800px|RM_Deploy_success_2|link=https://all.docs.genesys.com/File:RS_Deploy_success_2.png]]5. Check Db creation and configuration is successful. | |

| − | + | ====Deployment validation - Failure==== | |

| − | + | To debug deployment failure, follow the below steps: | |

| − | |||

| − | gvp- | + | 1. Log into console and check if gvp-rs pod is ready and running "oc get pods -o wide". |

| − | + | 2. If the RS container is continuously restarting, you need to check the liveness and readiness probe status. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | 3. Do RS pod describe to check the liveness and readiness probe status "oc describe gvp-rs". | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | 4. If probe failures are observed, check if PVC is attached properly and check RS logs if Config data is read properly. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | 5. If rs-init container is failing, check RS and configserver/DB connectivity. | |

| − | + | |Status=No | |

| − | + | }}{{Section | |

| − | + | |sectionHeading=4. GVP Resource Manager | |

| − | + | |anchor=GVPRM | |

| − | + | |alignment=Vertical | |

| − | + | |structuredtext=<br /> | |

| − | + | {{{!}} class="wikitable" data-macro-name="warning" data-macro-id="73364891-e552-4c1e-93b4-beac108d3e78" data-macro-parameters="title=Note" data-macro-schema-version="1" data-macro-body-type="RICH_TEXT" | |

| − | + | {{!}} class="wysiwyg-macro-body"{{!}}Resource Manager will not pass readiness checks until an MCP has registered properly. This is because service is not available without MCPs. | |

| − | + | {{!}}} | |

| − | + | ===Persistent Volumes creation=== | |

| − | + | '''Note''': The steps for PV creation can be skipped if OCS is used to auto provision the persistent volumes. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | Execute the following command: | + | Create the following PVs which are required for the service deployment. |

| − | < | + | {{{!}} class="wikitable" data-macro-name="warning" data-macro-id="6f74f414-0e53-4c9f-bb6c-babd5adf14b2" data-macro-parameters="icon=false{{!}}title=Note Regarding Persistent Volumes" data-macro-schema-version="1" data-macro-body-type="RICH_TEXT" |

| − | + | {{!}} class="wysiwyg-macro-body"{{!}}If your OpenShift deployment is capable of self-provisioning of Persistent Volumes, then this step can be skipped. Volumes will be created by provisioner. | |

| − | </ | + | {{!}}}<u>gvp-rm-01</u> |

| + | {{{!}} class="wikitable" data-macro-name="code" data-macro-id="e41390d2-1784-4d9a-8773-e78bab94395d" data-macro-parameters="language=yml{{!}}theme=Emacs{{!}}title=gvp-rm-01-pv.yaml" data-macro-schema-version="1" data-macro-body-type="PLAIN_TEXT" | ||

| + | {{!}} class="wysiwyg-macro-body"{{!}} | ||

| + | apiVersion: v1 | ||

| + | kind: PersistentVolume | ||

| + | metadata: | ||

| + | name: gvp-rm-01 | ||

| + | spec: | ||

| + | capacity: | ||

| + | storage: 30Gi | ||

| + | accessModes: | ||

| + | - ReadWriteOnce | ||

| + | persistentVolumeReclaimPolicy: Retain | ||

| + | storageClassName: gvp | ||

| + | nfs: | ||

| + | path: /export/vol1/PAT/gvp/rm-01 | ||

| + | server: 192.168.30.51 | ||

| + | {{!}}}Execute the following command: | ||

| + | {{{!}} class="wikitable" data-macro-name="code" data-macro-id="e5887dce-583f-40fa-a048-93e7d5181941" data-macro-parameters="language=bash{{!}}theme=Emacs" data-macro-schema-version="1" data-macro-body-type="PLAIN_TEXT" | ||

| + | {{!}} class="wysiwyg-macro-body"{{!}} | ||

| + | oc create -f gvp-rm-01-pv.yaml | ||

| + | {{!}}}<u>gvp-rm-02</u> | ||

| + | {{{!}} class="wikitable" data-macro-name="code" data-macro-id="a578b363-4be7-4f31-adf2-250aa32e50a8" data-macro-parameters="language=yml{{!}}theme=Emacs{{!}}title=gvp-rm-02-pv.yaml" data-macro-schema-version="1" data-macro-body-type="PLAIN_TEXT" | ||

| + | {{!}} class="wysiwyg-macro-body"{{!}} | ||

| + | apiVersion: v1 | ||

| + | kind: PersistentVolume | ||

| + | metadata: | ||

| + | name: gvp-rm-02 | ||

| + | spec: | ||

| + | capacity: | ||

| + | storage: 30Gi | ||

| + | accessModes: | ||

| + | - ReadWriteOnce | ||

| + | persistentVolumeReclaimPolicy: Retain | ||

| + | storageClassName: gvp | ||

| + | nfs: | ||

| + | path: /export/vol1/PAT/gvp/rm-02 | ||

| + | server: 192.168.30.51 | ||

| + | {{!}}}Execute the following command: | ||

| + | {{{!}} class="wikitable" data-macro-name="code" data-macro-id="979e11e1-9e95-49b7-898b-6be134d81e38" data-macro-parameters="language=bash{{!}}theme=Emacs" data-macro-schema-version="1" data-macro-body-type="PLAIN_TEXT" | ||

| + | {{!}} class="wysiwyg-macro-body"{{!}} | ||

| + | oc create -f gvp-rm-02-pv.yaml | ||

| + | {{!}}}<u>gvp-rm-logs-01</u> | ||

| + | {{{!}} class="wikitable" data-macro-name="code" data-macro-id="e65b20dd-32cd-4a01-b206-40349434e269" data-macro-parameters="language=yml{{!}}theme=Emacs{{!}}title=gvp-rm-logs-01-pv.yaml" data-macro-schema-version="1" data-macro-body-type="PLAIN_TEXT" | ||

| + | {{!}} class="wysiwyg-macro-body"{{!}} | ||

| + | apiVersion: v1 | ||

| + | kind: PersistentVolume | ||

| + | metadata: | ||

| + | name: gvp-rm-logs-01 | ||

| + | spec: | ||

| + | capacity: | ||

| + | storage: 10Gi | ||

| + | accessModes: | ||

| + | - ReadWriteOnce | ||

| + | persistentVolumeReclaimPolicy: Recycle | ||

| + | storageClassName: gvp | ||

| + | nfs: | ||

| + | path: /export/vol1/PAT/gvp/rm-logs-01 | ||

| + | server: 192.168.30.51 | ||

| + | {{!}}}Execute the following command: | ||

| + | {{{!}} class="wikitable" data-macro-name="code" data-macro-id="ca609291-3587-45f2-8aa9-3a234715ef48" data-macro-parameters="language=bash{{!}}theme=Emacs" data-macro-schema-version="1" data-macro-body-type="PLAIN_TEXT" | ||

| + | {{!}} class="wysiwyg-macro-body"{{!}} | ||

| + | oc create -f gvp-rm-logs-01-pv.yaml | ||

| + | {{!}}}<u>gvp-rm-logs-02</u> | ||

| + | {{{!}} class="wikitable" data-macro-name="code" data-macro-id="51d6d4d5-4b16-4a54-a582-e8ad0eca64c4" data-macro-parameters="language=yml{{!}}theme=Emacs{{!}}title=gvp-rm-logs-02-pv.yaml" data-macro-schema-version="1" data-macro-body-type="PLAIN_TEXT" | ||

| + | {{!}} class="wysiwyg-macro-body"{{!}} | ||

| + | apiVersion: v1 | ||

| + | kind: PersistentVolume | ||

| + | metadata: | ||

| + | name: gvp-rm-logs-02 | ||

| + | spec: | ||

| + | capacity: | ||

| + | storage: 10Gi | ||

| + | accessModes: | ||

| + | - ReadWriteOnce | ||

| + | persistentVolumeReclaimPolicy: Recycle | ||

| + | storageClassName: gvp | ||

| + | nfs: | ||

| + | path: /export/vol1/PAT/gvp/rm-logs-02 | ||

| + | server: 192.168.30.51 | ||

| + | {{!}}}Execute the following command: | ||

| + | {{{!}} class="wikitable" data-macro-name="code" data-macro-id="0e81e8e1-e57c-446a-ade3-b0de9b2e2c66" data-macro-parameters="language=bash{{!}}theme=Emacs" data-macro-schema-version="1" data-macro-body-type="PLAIN_TEXT" | ||

| + | {{!}} class="wysiwyg-macro-body"{{!}} | ||

| + | oc create -f gvp-rm-logs-02-pv.yaml | ||

| + | {{!}}} | ||

===Install Helm chart=== | ===Install Helm chart=== | ||

Download the required Helm chart release from the JFrog repository and install. Refer to {{Link-AnywhereElse|product=GVP|version=Current|manual=GVPPEGuide|topic=Deploy|anchor=HelmchaURLs|display text=Helm Chart URLs}}. | Download the required Helm chart release from the JFrog repository and install. Refer to {{Link-AnywhereElse|product=GVP|version=Current|manual=GVPPEGuide|topic=Deploy|anchor=HelmchaURLs|display text=Helm Chart URLs}}. | ||

| − | + | {{{!}} class="wikitable" data-macro-name="code" data-macro-id="18d7d1d8-bad3-4ebc-851c-10bf3d232edb" data-macro-parameters="language=bash{{!}}theme=Emacs{{!}}title=Install Helm Chart" data-macro-schema-version="1" data-macro-body-type="PLAIN_TEXT" | |

| − | helm install gvp-rm ./<gvp-rm-helm-artifact> -f gvp-rm-values.yaml | + | {{!}} class="wysiwyg-macro-body"{{!}} |

| − | + | helm install gvp-rm ./<gvp-rm-helm-artifact> -f gvp-rm-values.yaml | |

| + | {{!}}}At minimum following values will need to be updated in your values.yaml: | ||

| − | + | *<docker-repo> - Set to your Docker Repo with Private Edition Artifacts | |

| + | *<credential-name> - Set to your pull secret name | ||

| − | + | {{{!}} class="wikitable" data-macro-name="code" data-macro-id="f7c33da8-5ba3-41fb-8f92-cc804bfb7809" data-macro-parameters="language=yml{{!}}theme=Emacs{{!}}title=gvp-rm-values.yaml" data-macro-schema-version="1" data-macro-body-type="PLAIN_TEXT" | |

| − | + | {{!}} class="wysiwyg-macro-body"{{!}} | |

| − | + | ## Global Parameters | |

| − | + | ## Add labels to all the deployed resources | |

| − | + | ## | |

| − | + | labels: | |

| − | ## Global Parameters | + | enabled: true |

| − | ## Add labels to all the deployed resources | + | serviceGroup: "gvp" |

| − | ## | + | componentType: "shared" |

| − | labels: | ||

| − | |||

| − | |||

| − | |||

| − | ## Primary App Configuration | + | ## Primary App Configuration |

| − | ## | + | ## |

| − | # primaryApp: | + | # primaryApp: |

| − | # type: ReplicaSet | + | # type: ReplicaSet |

| − | # Should include the defaults for replicas | + | # Should include the defaults for replicas |

| − | deployment: | + | deployment: |

| − | + | replicaCount: 2 | |

| − | + | deploymentEnv: "UPDATE_ENV" | |

| − | + | namespace: gvp | |

| − | + | clusterDomain: "svc.cluster.local" | |

| − | nameOverride: "" | + | nameOverride: "" |

| − | fullnameOverride: "" | + | fullnameOverride: "" |

| − | image: | + | image: |

| − | + | registry: <docker-repo> | |

| − | + | gvprmrepository: gvp/gvp_rm | |

| − | + | cfghandlerrepository: gvp/gvp_rm_cfghandler | |

| − | + | snmprepository: gvp/gvp_snmp | |

| − | + | gvprmtestrepository: gvp/gvp_rm_test | |

| − | + | cfghandlertag: | |

| − | + | rmtesttag: | |

| − | + | rmtag: | |

| − | + | snmptag: v9.0.040.07 | |

| − | + | pullPolicy: Always | |

| − | + | imagePullSecrets: | |

| − | + | - name: "<credential-name>" | |

| − | dnsConfig: | + | dnsConfig: |

| − | + | options: | |

| − | + | - name: ndots | |

| − | + | value: "3" | |

| − | # Pod termination grace period 15 mins. | + | # Pod termination grace period 15 mins. |

| − | gracePeriodSeconds: 900 | + | gracePeriodSeconds: 900 |

| − | ## liveness and readiness probes | + | ## liveness and readiness probes |

| − | ## !!! THESE OPTIONS SHOULD NOT BE CHANGED UNLESS INSTRUCTED BY GENESYS !!! | + | ## !!! THESE OPTIONS SHOULD NOT BE CHANGED UNLESS INSTRUCTED BY GENESYS !!! |

| − | livenessValues: | + | livenessValues: |

| − | + | path: /rm/liveness | |

| − | + | initialDelaySeconds: 60 | |

| − | + | periodSeconds: 90 | |

| − | + | timeoutSeconds: 20 | |

| − | + | failureThreshold: 3 | |

| − | readinessValues: | + | readinessValues: |

| − | + | path: /rm/readiness | |

| − | + | initialDelaySeconds: 10 | |

| − | + | periodSeconds: 60 | |

| − | + | timeoutSeconds: 20 | |

| − | + | failureThreshold: 3 | |

| − | ## PVCs defined | + | ## PVCs defined |

| − | volumes: | + | volumes: |

| − | + | billingpvc: | |

| − | + | storageClass: managed-premium | |

| − | + | claimSize: 20Gi | |

| − | + | mountPath: "/rm" | |

| − | + | ||

| − | + | ## Define RM log storage volume type | |

| − | + | rmLogStorage: | |

| − | + | volumeType: | |

| − | + | persistentVolume: | |

| − | + | enabled: false | |

| − | + | storageClass: disk-premium | |

| − | + | claimSize: 50Gi | |

| + | accessMode: ReadWriteOnce | ||

| + | hostPath: | ||

| + | enabled: true | ||

| + | path: /mnt/log | ||

| + | emptyDir: | ||

| + | enabled: false | ||

| + | containerMountPath: | ||

| + | path: /mnt/log | ||

| + | |||

| + | ## FluentBit Settings | ||

| + | fluentBitSidecar: | ||

| + | enabled: false | ||

| + | |||

| + | ## Define service(s) for application. Fields many need to be modified based on `type` | ||

| + | service: | ||

| + | type: ClusterIP | ||

| + | port: 5060 | ||

| + | rmHealthCheckAPIPort: 8300 | ||

| − | ## | + | ## ConfigMaps with Configuration |

| − | + | ## Use Config Map for creating environment variables | |

| − | + | context: | |

| − | + | env: | |

| − | + | cfghandler: | |

| + | CFGSERVER: gvp-configserver.gvp.svc.cluster.local | ||

| + | CFGSERVERBACKUP: gvp-configserver.gvp.svc.cluster.local | ||

| + | CFGPORT: "8888" | ||

| + | CFGAPP: "default" | ||

| + | RMAPP: "azure_rm" | ||

| + | RMFOLDER: "Applications\\RM_MicroService\\RM_Apps" | ||

| + | HOSTFOLDER: "Hosts\\RM_MicroService" | ||

| + | MCPFOLDER: "MCP_Configuration_Unit\\MCP_LRG" | ||

| + | SNMPFOLDER: "Applications\\RM_MicroService\\SNMP_Apps" | ||

| + | EnvironmentType: "prod" | ||

| + | CONFSERVERAPP: "confserv" | ||

| + | RSAPP: "azure_rs" | ||

| + | SNMPAPP: "azure_rm_snmp" | ||

| + | STDOUT: "true" | ||

| + | VOICEMAILSERVICEDIDNUMBER: "55551111" | ||

| − | + | RMCONFIG: | |

| − | + | rm: | |

| − | + | sip-header-for-dnis: "Request-Uri" | |

| − | + | ignore-gw-lrg-configuration: "true" | |

| − | + | ignore-ruri-tenant-dbid: "true" | |

| − | + | log: | |

| − | + | verbose: "trace" | |

| − | + | subscription: | |

| − | + | sip.transport.dnsharouting: "true" | |

| − | + | sip.headerutf8verification: "false" | |

| − | + | sip.transport.setuptimer.tcp: "5000" | |

| − | + | sip.threadpoolsize: "1" | |

| − | + | registrar: | |

| − | + | sip.transport.dnsharouting: "true" | |

| − | + | sip.headerutf8verification: "false" | |

| − | + | sip.transport.setuptimer.tcp: "5000" | |

| − | + | sip.threadpoolsize: "1" | |

| − | + | proxy: | |

| − | + | sip.transport.dnsharouting: "true" | |

| − | + | sip.headerutf8verification: "false" | |

| + | sip.transport.setuptimer.tcp: "5000" | ||

| + | sip.threadpoolsize: "16" | ||

| + | sip.maxtcpconnections: "1000" | ||

| + | monitor: | ||

| + | sip.transport.dnsharouting: "true" | ||

| + | sip.maxtcpconnections: "1000" | ||

| + | sip.headerutf8verification: "false" | ||

| + | sip.transport.setuptimer.tcp: "5000" | ||

| + | sip.threadpoolsize: "1" | ||

| + | ems: | ||

| + | rc.cdr.local_queue_path: "/rm/ems/data/cdrQueue_rm.db" | ||

| + | rc.ors.local_queue_path: "/rm/ems/data/orsQueue_rm.db" | ||

| − | + | # Default secrets storage to k8s secrets with csi able to be optional | |

| − | + | secret: | |

| − | + | # keyVaultSecret will be a flag to between secret types(k8's or CSI). If keyVaultSecret was set to false k8's secret will be used | |

| − | + | keyVaultSecret: false | |

| − | + | #If keyVaultSecret set to false the below parameters will not be used. | |

| − | + | configserverProviderClassName: gvp-configserver-secret | |

| − | + | cfgSecretFileNameForCfgUsername: configserver-username | |

| − | + | cfgSecretFileNameForCfgPassword: configserver-password | |

| − | + | #If keyVaultSecret set to true the below parameters will not be used. | |

| − | + | cfgServerSecretName: configserver-secret | |

| − | + | cfgSecretKeyNameForCfgUsername: username | |

| − | + | cfgSecretKeyNameForCfgPassword: password | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | # | + | ## Ingress configuration |

| − | + | ingress: | |

| − | + | enabled: false | |

| − | + | annotations: {} | |

| − | + | # kubernetes.io/ingress.class: nginx | |

| − | + | # kubernetes.io/tls-acme: "true" | |

| − | + | paths: [] | |

| − | + | hosts: | |

| − | + | - chart-example.local | |

| − | + | tls: [] | |

| − | + | # - secretName: chart-example-tls | |

| − | + | # hosts: | |

| + | # - chart-example.local | ||

| + | networkPolicies: | ||

| + | enabled: false | ||

| + | sip: | ||

| + | serviceName: sipnode | ||

| − | ## | + | ## primaryAppresource requests and limits |

| − | + | ## ref: <nowiki>http://kubernetes.io/docs/user-guide/compute-resources/</nowiki> | |

| − | + | ## | |

| − | + | resourceForRM: | |

| − | + | # We usually recommend not to specify default resources and to leave this as a conscious | |

| − | + | # choice for the user. This also increases chances charts run on environments with little | |

| − | + | # resources, such as Minikube. If you do want to specify resources, uncomment the following | |

| − | + | # lines, adjust them as necessary, and remove the curly braces after 'resources:'. | |