Difference between revisions of "PEC-REP/Current/GIMPEGuide/PlanningGSP"

(Published) |

|||

| Line 2: | Line 2: | ||

|DisplayName=Before you begin GSP deployment | |DisplayName=Before you begin GSP deployment | ||

|Context=Find out what to do before deploying GIM Stream Processor (GSP). | |Context=Find out what to do before deploying GIM Stream Processor (GSP). | ||

| + | |ServiceId=3b2bdfd2-9eb1-4a99-a181-356f2704bc02 | ||

|IncludedServiceId=c39fe496-c79e-4846-b451-1bc8bedb126b | |IncludedServiceId=c39fe496-c79e-4846-b451-1bc8bedb126b | ||

|LimitationsStatus=No | |LimitationsStatus=No | ||

| Line 16: | Line 17: | ||

|SectionThirdPartyItem={{SectionThirdPartyItem | |SectionThirdPartyItem={{SectionThirdPartyItem | ||

|ThirdPartyItem=2f69518e-33af-4c0f-adcc-a98e5427e5e0 | |ThirdPartyItem=2f69518e-33af-4c0f-adcc-a98e5427e5e0 | ||

| + | |Notes=The Kafka topics that GSP will consume and produce must exist in the Kafka configuration. See more details [[{{FULLPAGENAME}}#Kafka|below]]. | ||

| + | }}{{SectionThirdPartyItem | ||

| + | |ThirdPartyItem=9ae24d71-5263-4a2e-8d1b-f65660f1cdc0 | ||

| + | |Notes=Both GSP and GCA require persistent storage to store data during processing. You can use the same storage account for both services. | ||

| + | }}{{SectionThirdPartyItem | ||

| + | |ThirdPartyItem=3e732261-b78e-4921-b6a6-08ee0a322ca1 | ||

| + | }}{{SectionThirdPartyItem | ||

| + | |ThirdPartyItem=9196f7d6-6782-467f-a664-627a2c293000 | ||

| + | |Variation=No | ||

}} | }} | ||

|StorageStatus=No | |StorageStatus=No | ||

| − | |StorageText= | + | |StorageText=Like GCA, GSP uses S3-compatible storage to store data during processing. GSP stores data such as GSP checkpoints, savepoints, and high availability data. By default, GSP is configured to use Azure Blob Storage, but you can also use S3-compatible storage provided by other cloud platforms. Genesys expects you to use the same storage account for GSP and GCA. |

| + | {{Section | ||

| + | |sectionHeading= | ||

| + | |anchor=S3 | ||

| + | |alignment=Vertical | ||

| + | |structuredtext=To create S3-compatible storage, do one of the following: | ||

| − | {{ | + | *[[{{FULLPAGENAME}}#s3OpenShift|OpenShift: Create an Object Bucket Claim]] |

| + | *[[{{FULLPAGENAME}}#s3GKE|GKE: Create bucket storage]] | ||

| + | |||

| + | {{AnchorDiv|s3OpenShift}} | ||

| + | ===OpenShift: Create an Object Bucket Claim=== | ||

| + | Create an S3 Object Bucket Claim (OBC) if none exists. | ||

| + | |||

| + | #Create a '''gsp-obc.yaml''' file: | ||

| + | |||

| + | #:<source lang="bash">apiVersion: objectbucket.io/v1alpha1 | ||

| + | kind: ObjectBucketClaim | ||

| + | metadata: | ||

| + | name: gim | ||

| + | namespace: gsp | ||

| + | spec: | ||

| + | generateBucketName: gim | ||

| + | storageClassName: openshift-storage.noobaa.io</source> | ||

| + | #Execute the command to create the OBC: | ||

| + | #:<source lang="text">oc create -f gsp-obc.yaml -n gsp</source> | ||

| + | #: The following Kubernetes resources are created automatically: | ||

| + | #*:An ObjectBucket (OB), which contains the bucket endpoint information, a reference to the OBC, and a reference to the storage class. | ||

| + | #*A ConfigMap in the same namespace as the OBC, which contains the endpoint to which applications connect in order to consume the object interface | ||

| + | #*A Secret in the same namespace as the OBC, which contains the key-pairs needed to access the bucket. | ||

| + | #: Note the following: | ||

| + | #*The name of the secret and the configMap are the same as the OBC name. | ||

| + | #*The bucket name is created with a randomized suffix. | ||

| + | #Get S3 data. | ||

| + | #: You need to know details of your S3 object in order to populate Helm chart override values for the service. | ||

| + | ##: Execute the following command, where <tt>gim</tt> is the name of the configMap associated with the OBC: | ||

| + | ##: <source lang="text">oc get cm gim -n gsp -o yaml -o jsonpath={.data}</source> | ||

| + | ##: The result shows data such as BUCKET_HOST, BUCKET_NAME, BUCKET_PORT, and so on. | ||

| + | ## Execute the following commands to get the values of the keys you require for access, where <tt>gim</tt> is the name of the secret associated with the OBC: | ||

| + | ##*To get the value of the access key: | ||

| + | ##*:<source lang="text">oc get secret gim -n gsp -o yaml -o jsonpath={.data.AWS_ACCESS_KEY_ID} | base64 --decode</source> | ||

| + | ##*To get the value of the secret key: | ||

| + | ##*:<source lang="text">oc get secret gim -n gsp -o yaml -o jsonpath={.data.AWS_SECRET_ACCESS_KEY} | base64 --decode</source> | ||

| + | #: Use the S3 data to populate storage-related Helm chart override values for the service (for GSP, see {{Link-SomewhereInThisVersion|manual=GIMPEGuide|topic=ConfigureGSP|anchor=Storage|display text=Configure S3-compatible storage}}). | ||

| + | #: {{NoteFormat|You can also obtain the S3 data from the OpenShift console: Go to the '''Object bucket claims''' section under the '''Storage''' menu, and click on the required OBC resource. The data will be at the bottom of the page.|2}} | ||

| + | |||

| + | {{AnchorDiv|s3GKE}} | ||

| + | ===GKE: Create bucket storage=== | ||

| + | |Status=No | ||

| + | }}{{Section | ||

| + | |alignment=Horizontal | ||

| + | |Media=Image | ||

| + | |image=Pe_gsp_gcp-console_cloudstorage.png | ||

| + | |AltText=A screenshot of the GCP Cloud Console showing the Cloud Storage menu | ||

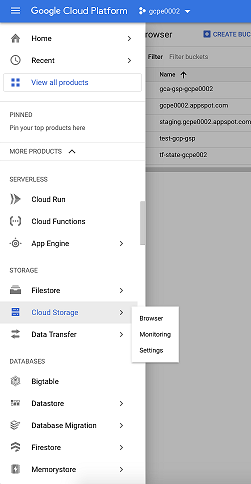

| + | |structuredtext=In the Google Cloud Platform (GCP) Cloud Console, select '''Cloud Storage''' and then choose '''Browser''' from the drop-down menu. | ||

| + | |Status=No | ||

| + | }}{{Section | ||

| + | |alignment=Horizontal | ||

| + | |Media=Image | ||

| + | |image=Pe_gsp_gcp-console_cloudstoragebrowser.png | ||

| + | |AltText=A screenshot of the GCP Cloud Console showing the Cloud Storage Browser screen | ||

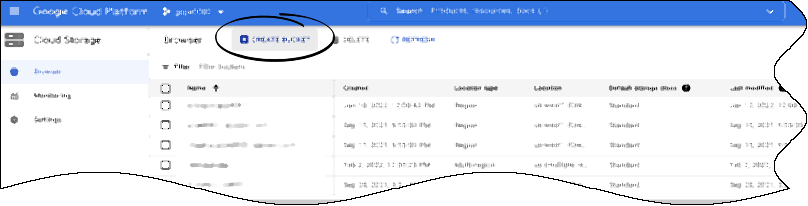

| + | |structuredtext=On the '''Cloud Storage > Browser''' screen, click '''CREATE BUCKET'''. | ||

| + | |Status=No | ||

| + | }}{{Section | ||

| + | |alignment=Horizontal | ||

| + | |Media=Image | ||

| + | |image=Pe_gsp_gcp-console_cloudstoragecreatebucket.png | ||

| + | |AltText=A screenshot of the GCP Cloud Console showing the Cloud Storage Create Bucket screen | ||

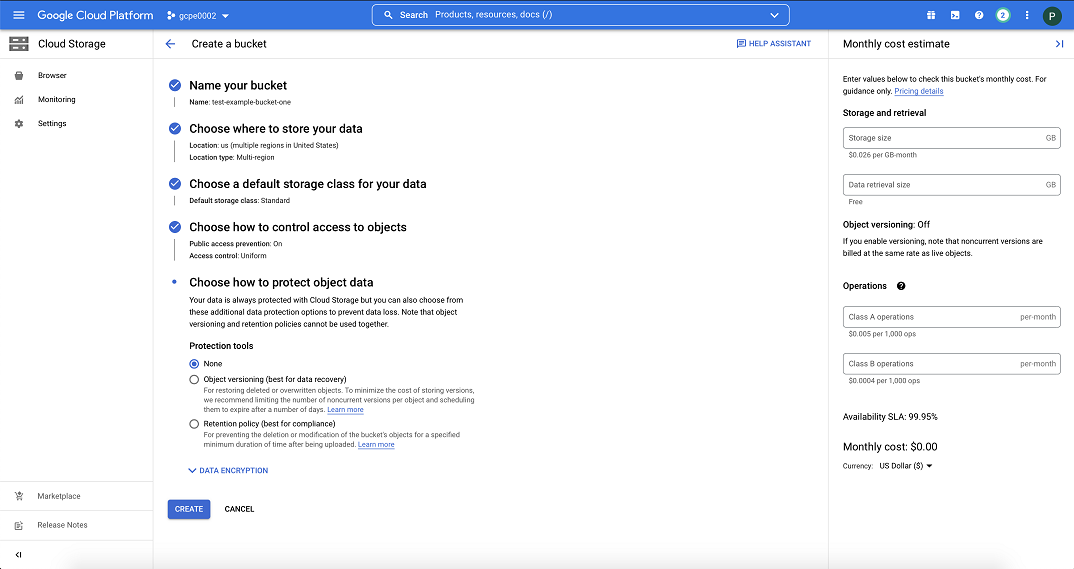

| + | |structuredtext=On the '''Create a bucket''' screen, specify the bucket details: | ||

| + | *'''Name your bucket''' — Enter a unique name for the bucket. | ||

| + | *'''Choose where to store your data''' — Select the geo-redundancy type (multi-region, dual-region, or region) and location where the data will be stored. <!--{{Editgrn_open}}<font color=red>'''Writer's note:''' Can we provide a recommendation for type? The screenshot in the PAT team instructions shows Multi-region selected, but from the description on https://cloud.google.com/storage/docs/locations, wouldn't Region be better?</font>{{Editgrn_close}}--> | ||

| + | *:{{NoteFormat|You cannot change the location after the bucket is created.}} | ||

| + | *'''Choose a default storage class for your data''' — Select the Standard storage class. | ||

| + | *'''Choose how to control access to objects''' — Check the box to enforce public access prevention on this bucket, to protect the bucket from being accidentally exposed to the public. When you enforce public access prevention, no one can make data in applicable buckets public through IAM policies or ACLs. | ||

| + | *'''Choose how to protect object data''' — GSP does not require any protection tools. | ||

| + | |||

| + | Click '''CREATE BUCKET''' to create the bucket. | ||

| + | |||

| + | The '''Bucket details''' screen displays. Verify and, if necessary, edit the details. | ||

| + | |||

| + | <nowiki/> | ||

| + | |Status=No | ||

| + | }}{{Section | ||

| + | |alignment=Horizontal | ||

| + | |Media=Image | ||

| + | |image=Pe_gsp_gcp-console_cloudstoragesettings_interop.png | ||

| + | |AltText=A screenshot of the GCP Cloud Console showing the Cloud Storage Settings screen | ||

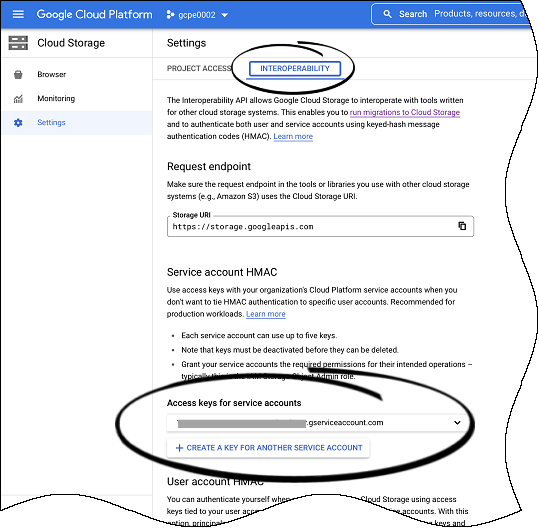

| + | |structuredtext=It is important to create a storage access key. | ||

| + | |||

| + | <!--{{Editgrn_open}}<font color=red>'''Writer's note:''' (1) Please esp. confirm step 2 because it doesn't exactly match the screenshot. Highlights flag other additions I made. (2) Given that GSP is not one of the first services to be deployed in a PE instance, it's surely likely that one or more service accounts will already exist. Do we have a recommendation about whether to use an existing service account or create a new one?</font>{{Editgrn_close}}--> | ||

| + | # Go to '''Settings > Interoperability'''. | ||

| + | # If the service account you want to use does not already exist, click '''CREATE A KEY FOR SERVICE ACCOUNT'''. Otherwise, click '''CREATE A KEY FOR ANOTHER SERVICE ACCOUNT,''' select the service account in the dialog box that displays, and click '''CREATE KEY'''. | ||

| + | #: '''Note:'''The service account is the GCP service account associated with the Google Cloud project. The GCP service account enables applications to authenticate and access Google Cloud resources and services. It is not related to the Kubernetes service account created by the Helm chart. Depending on how you want to organize your Google Cloud resources, you can have multiple GCP projects and service accounts. | ||

| + | |Status=No | ||

| + | }}{{Section | ||

| + | |alignment=Horizontal | ||

| + | |Media=Image | ||

| + | |image=Pe_gsp_gcp-console_cloudstoragesettings_accesskey.png | ||

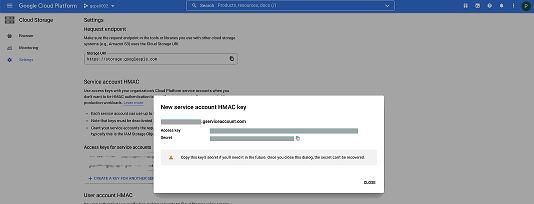

| + | |AltText=A screenshot of the GCP Cloud Console showing the Cloud Storage New Service Account HMAC screen | ||

| + | |structuredtext=The Access Key and Secret are generated and displayed in a dialog box. Copy and securely save these details, which you use to populate storage-related Helm chart override values for the applicable service (see {{Link-SomewhereInThisVersion|manual=GIMPEGuide|topic=ConfigureGSP|anchor=Storage|display text=Configure S3-compatible storage}}). | ||

| + | {{NoteFormat|You cannot recover the Secret once the dialog box is closed.}} | ||

| + | |Status=No | ||

| + | }} | ||

|NetworkStatus=No | |NetworkStatus=No | ||

| − | |NetworkText= | + | |NetworkText=No special network requirements. Network bandwidth must be sufficient to handle the volume of data to be transferred into and out of Kafka. |

|BrowserStatus=No | |BrowserStatus=No | ||

|DependenciesStatus=No | |DependenciesStatus=No | ||

| − | |DependenciesText= | + | |DependenciesText=There are no strict dependencies between the Genesys Info Mart services, but the logic of your particular pipeline might require Genesys Info Mart services to be deployed in a particular order. Depending on the order of deployment, there might be temporary data inconsistencies until all the Genesys Info Mart services are operational. For example, GSP looks for the GCA snapshot when it starts; if GCA has not yet been deployed, GSP will encounter unknown configuration objects and resources until the snapshot becomes available. |

| + | |||

| + | There are other private edition services you must deploy before Genesys Info Mart. For detailed information about the recommended order of services deployment, see {{SuiteLevelLink|deployorder}}. | ||

|GDPRStatus=No | |GDPRStatus=No | ||

| − | |GDPRText={{ | + | |GDPRText=Not applicable, provided your Kafka retention policies have not been set to more than 30 days. GSP does not store information beyond ephemeral data used during processing. |

| + | {{AnchorDiv|Kafka}} | ||

| + | ==Kafka configuration== | ||

| + | Unless Kafka has been configured to auto-create topics, ensure that the Kafka topics GSP requires have been created in the Kafka configuration. The following table shows the topic names GSP expects to use. An entry in the '''Customizable GSP parameter''' column indicates that GSP supports using a customized topic name. If you use customized topic names, you must override the applicable values in the '''values.yaml''' file (see {{Link-SomewhereInThisVersion|manual=GIMPEGuide|topic=ConfigureGSP|anchor=OverrideValues|display text=Override Helm chart values}}). | ||

| + | |||

| + | The topics represent various data domains. If a topic does not exist, GSP will never receive data for that domain. If the topic exists but the customizable parameter value is empty in the GSP configuration, data from that domain will be discarded. | ||

| + | {{{!}} | ||

| + | !'''Topic name''' | ||

| + | !'''Customizable GSP parameter''' | ||

| + | !'''Description''' | ||

| + | {{!}}- | ||

| + | {{!}} colspan="3"{{!}}'''GSP consumes the following topics:''' | ||

| + | {{!}}- | ||

| + | {{!}}voice-callthread | ||

| + | {{!}} | ||

| + | {{!}}Name of the input topic with voice interactions | ||

| + | {{!}}- | ||

| + | {{!}}voice-agentstate | ||

| + | {{!}} | ||

| + | {{!}}Name of the input topic with voice agent states | ||

| + | {{!}}- | ||

| + | {{!}}voice-outbound | ||

| + | {{!}} | ||

| + | {{!}}Name of the input topic with outbound (CX Contact) activity | ||

| + | {{!}}- | ||

| + | {{!}}digital-itx | ||

| + | {{!}}digitalItx | ||

| + | {{!}}Name of the input topic with digital interactions | ||

| + | {{!}}- | ||

| + | {{!}}digital-agentstate | ||

| + | {{!}}digitalAgentStates | ||

| + | {{!}}Name of the input topic with digital agent states | ||

| + | {{!}}- | ||

| + | {{!}}gca-cfg | ||

| + | {{!}}cfg | ||

| + | {{!}}Name of the input topic with configuration data | ||

| + | {{!}}- | ||

| + | {{!}} colspan="3"{{!}}'''GSP produces the following topics:''' | ||

| + | {{!}}- | ||

| + | {{!}}gsp-ixn | ||

| + | {{!}}interactions | ||

| + | {{!}}Name of the output topic for interactions | ||

| + | {{!}}- | ||

| + | {{!}}gsp-sm | ||

| + | {{!}}agentStates | ||

| + | {{!}}Name of the output topic for agent states | ||

| + | {{!}}- | ||

| + | {{!}}gsp-outbound | ||

| + | {{!}}outbound | ||

| + | {{!}}Name of the output topic for outbound (CX Contact) activity | ||

| + | {{!}}- | ||

| + | {{!}}gsp-custom | ||

| + | {{!}}custom | ||

| + | {{!}}Name of the output topic for custom reporting | ||

| + | {{!}}- | ||

| + | {{!}}gsp-cfg | ||

| + | {{!}}cfg | ||

| + | {{!}}Name of the output topic for configuration reporting | ||

| + | {{!}}- | ||

| + | <!--{{!}}gsp-mn {{Editgrn_open}}<font color=red>'''Writer's note:''' This row will be commented out until media-neutral is officially supported.</font>{{Editgrn_close}} | ||

| + | {{!}}mediaNeutral | ||

| + | {{!}}Name of the output topic for media-neutral agent states--> | ||

| + | {{!}}} | ||

|PEPageType=bf21dc7c-597d-4bbe-8df2-a2a64bd3f167 | |PEPageType=bf21dc7c-597d-4bbe-8df2-a2a64bd3f167 | ||

}} | }} | ||

Revision as of 19:53, March 30, 2022

Contents

Find out what to do before deploying GIM Stream Processor (GSP).

Limitations and assumptions

Not applicable

Download the Helm charts

GIM Stream Processor (GSP) is the only service that runs in the GSP Docker container. The Helm charts included with the GSP release provision GSP and any Kubernetes infrastructure necessary for GSP to run.

See Helm charts and containers for Genesys Info Mart for the Helm chart version you must download for your release.

For information about how to download the Helm charts, see Downloading your Genesys Multicloud CX containers.

Third-party prerequisites

For information about setting up your Genesys Multicloud CX private edition platform, see Software requirements.

The following table lists the third-party prerequisites for GSP.

| Name | Version | Purpose | Notes |

|---|---|---|---|

| Kafka | 2.x | Message bus. | The Kafka topics that GSP will consume and produce must exist in the Kafka configuration. See more details below. |

| Object storage | Persistent or shared data storage, such as Amazon S3, Azure Blob Storage, or Google Cloud Storage. | Both GSP and GCA require persistent storage to store data during processing. You can use the same storage account for both services. | |

| A container image registry and Helm chart repository | Used for downloading Genesys containers and Helm charts into the customer's repository to support a CI/CD pipeline. You can use any Docker OCI compliant registry. | ||

| Command Line Interface | The command line interface tools to log in and work with the Kubernetes clusters. |

Storage requirements

Like GCA, GSP uses S3-compatible storage to store data during processing. GSP stores data such as GSP checkpoints, savepoints, and high availability data. By default, GSP is configured to use Azure Blob Storage, but you can also use S3-compatible storage provided by other cloud platforms. Genesys expects you to use the same storage account for GSP and GCA.

To create S3-compatible storage, do one of the following:

OpenShift: Create an Object Bucket Claim

Create an S3 Object Bucket Claim (OBC) if none exists.

- Create a gsp-obc.yaml file:

apiVersion: objectbucket.io/v1alpha1 kind: ObjectBucketClaim metadata: name: gim namespace: gsp spec: generateBucketName: gim storageClassName: openshift-storage.noobaa.io

- Execute the command to create the OBC:

oc create -f gsp-obc.yaml -n gsp

- The following Kubernetes resources are created automatically:

- An ObjectBucket (OB), which contains the bucket endpoint information, a reference to the OBC, and a reference to the storage class.

- A ConfigMap in the same namespace as the OBC, which contains the endpoint to which applications connect in order to consume the object interface

- A Secret in the same namespace as the OBC, which contains the key-pairs needed to access the bucket.

- Note the following:

- The name of the secret and the configMap are the same as the OBC name.

- The bucket name is created with a randomized suffix.

- Get S3 data.

- You need to know details of your S3 object in order to populate Helm chart override values for the service.

- Execute the following command, where gim is the name of the configMap associated with the OBC:

oc get cm gim -n gsp -o yaml -o jsonpath={.data}- The result shows data such as BUCKET_HOST, BUCKET_NAME, BUCKET_PORT, and so on.

- Execute the following commands to get the values of the keys you require for access, where gim is the name of the secret associated with the OBC:

- To get the value of the access key:

oc get secret gim -n gsp -o yaml -o jsonpath={.data.AWS_ACCESS_KEY_ID} | base64 --decode

- To get the value of the secret key:

oc get secret gim -n gsp -o yaml -o jsonpath={.data.AWS_SECRET_ACCESS_KEY} | base64 --decode

- To get the value of the access key:

- Use the S3 data to populate storage-related Helm chart override values for the service (for GSP, see Configure S3-compatible storage).

- TipYou can also obtain the S3 data from the OpenShift console: Go to the Object bucket claims section under the Storage menu, and click on the required OBC resource. The data will be at the bottom of the page.

GKE: Create bucket storage

On the Create a bucket screen, specify the bucket details:

- Name your bucket — Enter a unique name for the bucket.

- Choose where to store your data — Select the geo-redundancy type (multi-region, dual-region, or region) and location where the data will be stored.

- ImportantYou cannot change the location after the bucket is created.

- Choose a default storage class for your data — Select the Standard storage class.

- Choose how to control access to objects — Check the box to enforce public access prevention on this bucket, to protect the bucket from being accidentally exposed to the public. When you enforce public access prevention, no one can make data in applicable buckets public through IAM policies or ACLs.

- Choose how to protect object data — GSP does not require any protection tools.

Click CREATE BUCKET to create the bucket.

The Bucket details screen displays. Verify and, if necessary, edit the details.

It is important to create a storage access key.

- Go to Settings > Interoperability.

- If the service account you want to use does not already exist, click CREATE A KEY FOR SERVICE ACCOUNT. Otherwise, click CREATE A KEY FOR ANOTHER SERVICE ACCOUNT, select the service account in the dialog box that displays, and click CREATE KEY.

- Note:The service account is the GCP service account associated with the Google Cloud project. The GCP service account enables applications to authenticate and access Google Cloud resources and services. It is not related to the Kubernetes service account created by the Helm chart. Depending on how you want to organize your Google Cloud resources, you can have multiple GCP projects and service accounts.

The Access Key and Secret are generated and displayed in a dialog box. Copy and securely save these details, which you use to populate storage-related Helm chart override values for the applicable service (see Configure S3-compatible storage).

Network requirements

No special network requirements. Network bandwidth must be sufficient to handle the volume of data to be transferred into and out of Kafka.

Browser requirements

Not applicable

Genesys dependencies

There are no strict dependencies between the Genesys Info Mart services, but the logic of your particular pipeline might require Genesys Info Mart services to be deployed in a particular order. Depending on the order of deployment, there might be temporary data inconsistencies until all the Genesys Info Mart services are operational. For example, GSP looks for the GCA snapshot when it starts; if GCA has not yet been deployed, GSP will encounter unknown configuration objects and resources until the snapshot becomes available.

There are other private edition services you must deploy before Genesys Info Mart. For detailed information about the recommended order of services deployment, see Order of services deployment.

GDPR support

Not applicable, provided your Kafka retention policies have not been set to more than 30 days. GSP does not store information beyond ephemeral data used during processing.

Kafka configuration

Unless Kafka has been configured to auto-create topics, ensure that the Kafka topics GSP requires have been created in the Kafka configuration. The following table shows the topic names GSP expects to use. An entry in the Customizable GSP parameter column indicates that GSP supports using a customized topic name. If you use customized topic names, you must override the applicable values in the values.yaml file (see Override Helm chart values).

The topics represent various data domains. If a topic does not exist, GSP will never receive data for that domain. If the topic exists but the customizable parameter value is empty in the GSP configuration, data from that domain will be discarded.

| Topic name | Customizable GSP parameter | Description |

|---|---|---|

| GSP consumes the following topics: | ||

| voice-callthread | Name of the input topic with voice interactions | |

| voice-agentstate | Name of the input topic with voice agent states | |

| voice-outbound | Name of the input topic with outbound (CX Contact) activity | |

| digital-itx | digitalItx | Name of the input topic with digital interactions |

| digital-agentstate | digitalAgentStates | Name of the input topic with digital agent states |

| gca-cfg | cfg | Name of the input topic with configuration data |

| GSP produces the following topics: | ||

| gsp-ixn | interactions | Name of the output topic for interactions |

| gsp-sm | agentStates | Name of the output topic for agent states |

| gsp-outbound | outbound | Name of the output topic for outbound (CX Contact) activity |

| gsp-custom | custom | Name of the output topic for custom reporting |

| gsp-cfg | cfg | Name of the output topic for configuration reporting |