Difference between revisions of "PE-GPR/9.0.0/Help/ModelAccuracy"

(Published) |

(Published) |

||

| Line 3: | Line 3: | ||

|DisplayName=View model accuracy | |DisplayName=View model accuracy | ||

|TocName=View model accuracy | |TocName=View model accuracy | ||

| − | |Context=View how well your model is performs over time | + | |Context=View how well your model is performs over time, how accurate your model was when trained, and feature coverage. |

|ComingSoon=No | |ComingSoon=No | ||

|Platform=GenesysEngage-cloud | |Platform=GenesysEngage-cloud | ||

| Line 15: | Line 15: | ||

This tab is on the '''Predictors''' window: [[File:GPRPredictorsWindow.png|200 px|link=https://all.docs.genesys.com/File:GPRPredictorsWindow.png]] | This tab is on the '''Predictors''' window: [[File:GPRPredictorsWindow.png|200 px|link=https://all.docs.genesys.com/File:GPRPredictorsWindow.png]] | ||

| − | |structuredtextwide='''Prerequisites:''' To use this tab, | + | |structuredtextwide='''Prerequisites:''' To use this tab, you must fulfill the following conditions: |

| + | |||

| + | *Your account must include an Agent Profile dataset, an interaction dataset with the Interaction ID field set, one or more Predictors, and have scoring data available. | ||

| + | *You must configure Data Loader to extract from the Genesys Info Mart database the necessary data to derive outcome results for the metric in your predictor, and append this data to the interaction dataset used to create the selected predictor. | ||

===Configure the view=== | ===Configure the view=== | ||

| Line 33: | Line 36: | ||

|Media=Image | |Media=Image | ||

|image=GPRModelAccuracyGraph.png | |image=GPRModelAccuracyGraph.png | ||

| − | |structuredtext=The Model Accuracy graph shows the mean absolute error value, indicating the difference over time between the results the model predicts and the actual outcomes. This value, and how to interpret it, varies depending on the type of KPI your model optimizes. | + | |structuredtext=The Model Accuracy graph shows the mean absolute error value, indicating the difference over time between the results the model predicts and the actual outcomes. This value, and how to interpret it, varies depending on the type of key performance indicator (KPI) your model optimizes. |

|structuredtextwide=The graph also includes a horizontal line marking the accuracy level when the model was trained, enabling you to compare the training result with real-world performance. The gray bars show the feature coverage level, since feature coverage issues are often the reason for degradations in model accuracy. | |structuredtextwide=The graph also includes a horizontal line marking the accuracy level when the model was trained, enabling you to compare the training result with real-world performance. The gray bars show the feature coverage level, since feature coverage issues are often the reason for degradations in model accuracy. | ||

| − | '''Note:''' | + | '''Note:''' If feature coverage does seem to be the source of a model's drift away from accuracy, use the '''Feature Coverage''' tab to troubleshoot issues. To view the five features having the strongest impact on the KPI value, see the '''Top Features''' horizontal bar graph on {{Link-SomewhereInThisVersion|manual=Help|topic=Analysis|display text=the Simulator tab}}. |

'''Example''' | '''Example''' | ||

| − | When you view the graph, keep in mind that the | + | When you view the graph, keep in mind that the vertical axis on the right shows the mean absolute error rate, not the actual KPI value. The example image on this page shows the graph for a model designed to optimize CSAT. The horizontal red training line shows that when you created the model, it had an error rate of 1.26. That is, the average difference between the predicted CSAT and the actual CSAT was 1.26 points. As you have used this model over time, this difference between the predicted CSAT and the actual CSAT has ranged from 1.40 to 1.56. |

| − | To export the model accuracy data as a | + | To export the model accuracy data as a .csv file, click the '''Export to CSV''' button. |

|Status=No | |Status=No | ||

}} | }} | ||

}} | }} | ||

Revision as of 17:24, September 23, 2020

View how well your model is performs over time, how accurate your model was when trained, and feature coverage.

The Model Accuracy tab

The Model Accuracy tab provides a view into how well your model performs over time.

This tab is on the Predictors window:Prerequisites: To use this tab, you must fulfill the following conditions:

- Your account must include an Agent Profile dataset, an interaction dataset with the Interaction ID field set, one or more Predictors, and have scoring data available.

- You must configure Data Loader to extract from the Genesys Info Mart database the necessary data to derive outcome results for the metric in your predictor, and append this data to the interaction dataset used to create the selected predictor.

Configure the view

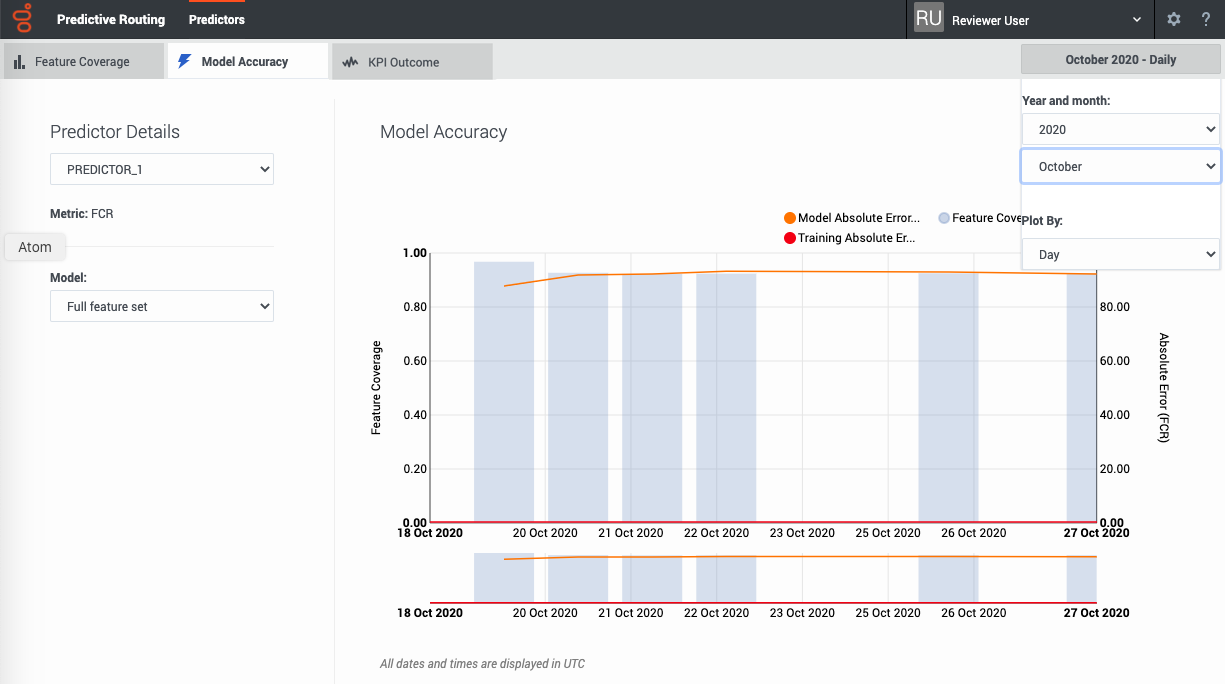

The Model Accuracy tab enables you to select the following information:

- The name and ID of the predictor. Use the selector to specify which predictor to view.

- The name and ID of the model. Use the selector to specify which model to view.

- The displayed date range. Use the year and month selectors to specify the month to view.

- The Plot By selector enables you to set the granularity with which the graph displays data. You can choose to view data by Day, Hour, or 15-Minute interval.

Note: This page does not display real-time updates. GPR refreshes the data displayed once per day, at 3:00 am UTC.

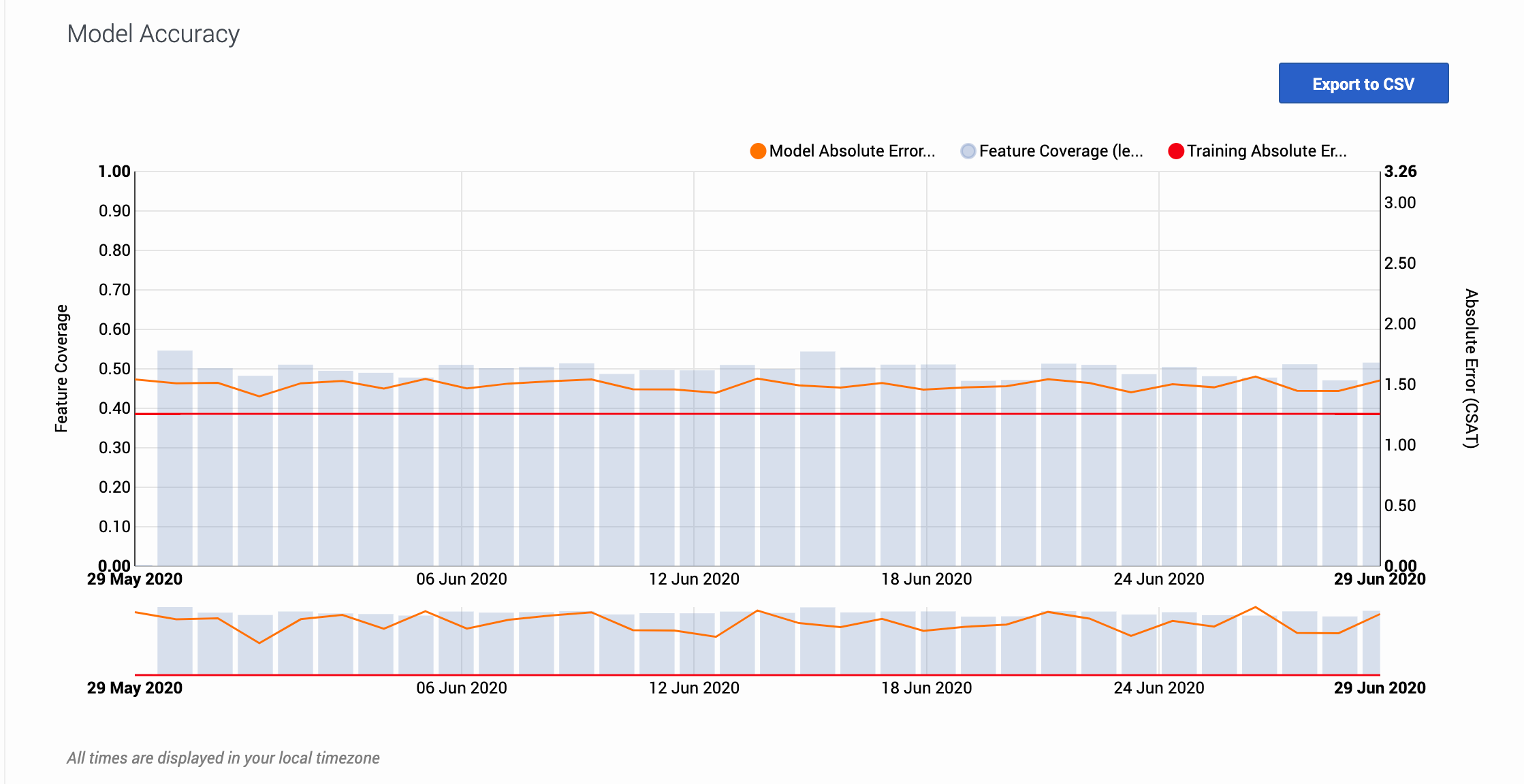

How to read the graph

The graph also includes a horizontal line marking the accuracy level when the model was trained, enabling you to compare the training result with real-world performance. The gray bars show the feature coverage level, since feature coverage issues are often the reason for degradations in model accuracy.

Note: If feature coverage does seem to be the source of a model's drift away from accuracy, use the Feature Coverage tab to troubleshoot issues. To view the five features having the strongest impact on the KPI value, see the Top Features horizontal bar graph on the Simulator tab.

Example

When you view the graph, keep in mind that the vertical axis on the right shows the mean absolute error rate, not the actual KPI value. The example image on this page shows the graph for a model designed to optimize CSAT. The horizontal red training line shows that when you created the model, it had an error rate of 1.26. That is, the average difference between the predicted CSAT and the actual CSAT was 1.26 points. As you have used this model over time, this difference between the predicted CSAT and the actual CSAT has ranged from 1.40 to 1.56.

To export the model accuracy data as a .csv file, click the Export to CSV button.