Difference between revisions of "VM/Current/VMPEGuide/Deploy"

(Published) |

(Published) |

||

| Line 23: | Line 23: | ||

*{{Link-SomewhereInThisVersion|manual=VMPEGuide|topic=DeployVoicemail|display text=Voicemail Service}} | *{{Link-SomewhereInThisVersion|manual=VMPEGuide|topic=DeployVoicemail|display text=Voicemail Service}} | ||

| + | {{AnchorDiv|DeployConsul}} | ||

===Deploy Consul=== | ===Deploy Consul=== | ||

| − | Consul is required for multiple | + | Consul is required for multiple services in the Genesys package. |

| − | + | In addition to any other Consul configuration, the following Consul features are required for Voice Services: | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | *connectinject – To deploy sidecar containers in Voice pods. | |

| + | *controller – To provide service intention functionality. | ||

| + | *openshift – To set OpenShift-specific permissions. | ||

| + | *syncCatalog – To sync Kubernetes services to Consul. Set '''toK8S: false''' and '''addK8SNamespaceSuffix: false''' for syncing services from Kubernetes to Consul. | ||

| + | *AccessControlList – To enable ACL, set '''manageSystemACLs: true'''. | ||

| + | *storageclass – To set the storage class to a predefined storage class. | ||

| + | *TLS – To enable TLS, set '''enabled: true''' and follow the steps/commands described below to set up TLS. | ||

| + | |||

| + | The file content for the Consul configuration is the following: | ||

<source lang="text"> | <source lang="text"> | ||

# config.yaml | # config.yaml | ||

| Line 64: | Line 67: | ||

addK8SNamespaceSuffix: false | addK8SNamespaceSuffix: false | ||

</source> | </source> | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | ==== | + | ====Creation of the Consul bootstrap token==== |

| + | When you enable an Access Control List in Consul, you must ensure that Voice services have access to read and write to Consul. To provide access, you create a token for Voice services in the Consul UI. You can create the necessary Consul bootstrap token when you deploy Consul, although it is possible to do this configuration later, as part of the Voice Services deployment. | ||

| + | |||

| + | When Access Control List (ACL) is enabled in Consul, the Voice services must have the required access for reading and writing into Consul. For that, you must create a token in the Consul UI with the following permissions for the Voice services. | ||

| + | |||

<source lang="text"> | <source lang="text"> | ||

| − | + | service_prefix "" { | |

| − | + | policy = "read" | |

| − | + | intentions = "read" | |

| − | + | } | |

| − | + | service_prefix "" { | |

| + | policy = "write" | ||

| + | intentions = "write" | ||

| + | } | ||

| + | node_prefix "" { | ||

| + | policy = "read" | ||

| + | } | ||

| + | node_prefix "" { | ||

| + | policy = "write" | ||

| + | } | ||

| + | agent_prefix "" { | ||

| + | policy = "read" | ||

| + | } | ||

| + | agent_prefix "" { | ||

| + | policy = "write" | ||

| + | } | ||

| + | session_prefix "" { | ||

| + | policy = "write" | ||

| + | } | ||

| + | session_prefix "" { | ||

| + | policy = "read" | ||

| + | } | ||

| + | namespace_prefix "" { | ||

| + | key_prefix "" { | ||

| + | policy = "write" | ||

| + | } | ||

| + | session_prefix "" { | ||

| + | policy = "write" | ||

| + | } | ||

| + | } | ||

| + | key_prefix "" { | ||

| + | policy = "read" | ||

| + | } | ||

| + | key_prefix "" { | ||

| + | policy = "write" | ||

| + | } | ||

</source> | </source> | ||

| − | + | ||

| − | + | To log into the Consul UI and to create a new ACL, you use a bootstrap token. Use the following command to get the bootstrap token: | |

| − | + | ||

<source lang="text"> | <source lang="text"> | ||

| − | kubectl get | + | kubectl get secret consul-bootstrap-acl-token -n <consul namespace> -o go-template='{{.data.token | base64decode}} |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

</source> | </source> | ||

| − | + | ||

| − | + | Create a new token and create a policy (voice-policy) with the preceding list of permissions and assign it to this token. For example a token is created with a value of <tt>a7529f8a-1146-e398-8bd7-367894c4b37b</tt>. We can create a Kubernetes secret with this token as shown below: | |

| − | + | ||

| − | |||

| − | |||

| − | |||

<source lang="text"> | <source lang="text"> | ||

| − | kubectl create | + | kubectl create secret generic consul-voice-token -n voice --from-literal='consul-consul-voice-token=a7529f8a-1146-e398-8bd7-367894c4b37b' |

| − | |||

| − | |||

| − | |||

| − | |||

</source> | </source> | ||

| − | ==== | + | ====Creating Intentions in the Consul UI==== |

| − | + | Voice services use the Consul service mesh to connect between services. Consul has provision to either allow or deny the connection between services. This is done using ''intentions''. Log into the '''Intentions''' tab using the bootstrap token and create a new intention to allow all source services to all destination services as shown in the following screenshot. | |

| − | + | ||

| − | + | [[File:voicemcs_config_consul_intentions.png|center]] | |

| − | + | |Status=No | |

| − | |Status= | ||

}}{{Section | }}{{Section | ||

|alignment=Vertical | |alignment=Vertical | ||

| Line 130: | Line 140: | ||

kubectl create ns voice | kubectl create ns voice | ||

</source> | </source> | ||

| − | In all Voice Services and their dependencies | + | In all Voice Services and the configuration files of their dependencies, the namespace is '''voice'''. If you want a specific, custom namespace, create the namespace (using the preceding command) and remember to change the namespace in files, as required. |

|Status=No | |Status=No | ||

}}{{Section | }}{{Section | ||

| − | |sectionHeading=Deploy Voice | + | |sectionHeading=Deploy Voice Services |

|anchor=DeployVoice | |anchor=DeployVoice | ||

|alignment=Vertical | |alignment=Vertical | ||

| Line 140: | Line 150: | ||

====Kubernetes Service and endpoint creation==== | ====Kubernetes Service and endpoint creation==== | ||

| − | The Redis registration should be done for all of the following Redis service names. The Voice services | + | The Redis registration should be done for all of the following Redis service names. The Voice services use these service names for connecting to the Redis cluster. |

<source lang="text"> | <source lang="text"> | ||

redis-agent-state | redis-agent-state | ||

| Line 153: | Line 163: | ||

</source> | </source> | ||

| − | + | ====Manifest file==== | |

| − | For all the Redis service names | + | For all the preceding Redis service names, create a separate service and endpoint using the following example: |

<source lang="text"> | <source lang="text"> | ||

apiVersion: v1 | apiVersion: v1 | ||

| Line 180: | Line 190: | ||

</source> | </source> | ||

| − | + | In addition, get the Redis primary IP using the following command: | |

<source lang="text"> | <source lang="text"> | ||

kubectl get pods infra-redis-redis-cluster-0 -n infra -o jsonpath='{.status.podIP}' (get IPaddress of the master redis pod) | kubectl get pods infra-redis-redis-cluster-0 -n infra -o jsonpath='{.status.podIP}' (get IPaddress of the master redis pod) | ||

| Line 186: | Line 196: | ||

|Status=No | |Status=No | ||

}}{{Section | }}{{Section | ||

| + | |anchor=DeploytheVoiceServices | ||

|alignment=Vertical | |alignment=Vertical | ||

| − | |structuredtext====Deploy Voice Services=== | + | |structuredtext====Deploy the Voice Services=== |

| − | + | Voice Services require a Persistent Volume Claim (PVC); the Voice SIP Cluster Service uses a persistent volume to store traditional SIP Server logs. Before deploying Voice Services, create the PVC. | |

| − | ==== | + | ====Storage class and Claim name==== |

| − | + | The created persistent volume must be configured in the '''sip_node_override_values.yaml''' file as shown below: | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | The created persistent volume must be configured in '''sip_node_override_values.yaml''' as shown below: | ||

<source lang="text"> | <source lang="text"> | ||

# pvc will be created for logs | # pvc will be created for logs | ||

| Line 237: | Line 220: | ||

====Configure the DNS Server for voice-sip==== | ====Configure the DNS Server for voice-sip==== | ||

| − | The Voice SIP Cluster Service requires | + | The Voice SIP Cluster Service requires the DNS server to be configured in its '''sip_node_override_values.yaml''' file. |

| − | + | Follow the steps in the [https://kubernetes.io/docs/tasks/administer-cluster/dns-debugging-resolution/ Kubernetes documentation] to install a '''dnsutils''' pod. Using the '''dnsutils''' pod, get the '''dnsserver''' that's used in the environment. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | The default value in the SIP Helm chart is <tt>10.0.0.10</tt>. If the '''dnsserver''' address is different, update it in the '''sip_node_override_values.yaml''' file as shown below: | |

<source lang="text"> | <source lang="text"> | ||

# update dns server ipaddress | # update dns server ipaddress | ||

| Line 253: | Line 230: | ||

dnsServer: "10.202.0.10" | dnsServer: "10.202.0.10" | ||

</source> | </source> | ||

| − | + | |Status=No | |

| − | + | }}{{Section | |

| − | Deploy the Voice Services using the provided Helm charts. | + | |sectionHeading=Voice Service Helm chart deployment |

| − | <source lang="text"> | + | |anchor=HelmChartDeployment |

| + | |alignment=Vertical | ||

| + | |structuredtext=Deploy the Voice Services using the provided Helm charts.<source lang="text"> | ||

helm upgrade --install --force --wait --timeout 300s -n voice -f ./voice_helm_values/agent_override_values.yaml voice-agent <helm-repo>/voice-agent-<helmchart-version>.tgz --set version=<container-version> --username "$JFROG_USER" --password "$JFROG_PASSWORD" | helm upgrade --install --force --wait --timeout 300s -n voice -f ./voice_helm_values/agent_override_values.yaml voice-agent <helm-repo>/voice-agent-<helmchart-version>.tgz --set version=<container-version> --username "$JFROG_USER" --password "$JFROG_PASSWORD" | ||

| Line 276: | Line 255: | ||

helm upgrade --install --force --wait --timeout 300s -n voice -f ./voice_helm_values/sipproxy_override_values.yaml voice-sipproxy <helm-repo>/voice-sipproxy-<helmchart-version>.tgz --set version=<container-version> --username "$JFROG_USER" --password "$JFROG_PASSWORD" | helm upgrade --install --force --wait --timeout 300s -n voice -f ./voice_helm_values/sipproxy_override_values.yaml voice-sipproxy <helm-repo>/voice-sipproxy-<helmchart-version>.tgz --set version=<container-version> --username "$JFROG_USER" --password "$JFROG_PASSWORD" | ||

| − | </source> | + | </source>The following table contains a list of the minimum recommended Helm chart versions that should be used: |

| − | The following table contains a list of the minimum recommended Helm chart versions that should be used: | ||

{{{!}} class="wikitable" | {{{!}} class="wikitable" | ||

!Service name | !Service name | ||

| Line 314: | Line 292: | ||

|Status=No | |Status=No | ||

}}{{Section | }}{{Section | ||

| − | |sectionHeading=Deploy | + | |sectionHeading=Deploy in OpenShift |

| + | |anchor=DeployInOpenShift | ||

|alignment=Vertical | |alignment=Vertical | ||

| − | |structuredtext= | + | |structuredtext====Add a rule for Consul DNS forwarding=== |

| + | In the OpenShift Container Platform (OCP), as part of the general deployment prerequisites, you must add a rule for Consul DNS forwarding. OpenShift sends DNS requests to the DNS server in the '''openshift-dns''' namespace. To forward Consul FQDN resolution to a Consul DNS server, add the forwarding rule to the '''configmap''' of the default DNS operator. Save the Consul DNS IP address using the following command: | ||

| + | <source lang="text"> | ||

| + | kubectl get svc consul-dns -n <consul namespace> -o jsonpath={.spec.clusterIP} (Internal IP of consul-dns service) | ||

| + | > oc edit dns.operator/default | ||

| + | Add the below specs: | ||

| + | spec: | ||

| + | servers: | ||

| + | - name: consul-dns | ||

| + | zones: | ||

| + | - consul | ||

| + | forwardPlugin: | ||

| + | upstreams: | ||

| + | - <Internal IP of consul-dns service> | ||

| + | </source> | ||

| + | |||

| + | {{AnchorDiv|OCPSIPClusterLogsPVC}} | ||

| + | ===Persistent volumes=== | ||

| + | The general Voice Services deployment is described in {{Link-SomewhereInThisVersion|manual=VMPEGuide|topic=Deploy|anchor=DeployVoice|display text=Deploy Voice Services}}. There are some differences when creating PVCs in the OpenShift Container Platform (OCP). This section describes the configuration for OCP. | ||

| + | |||

| + | ====Persistent Volume in OCS Storage Type==== | ||

| + | A Storage Class might have been created already in the OCP. This Storage Class is used for creating PVCs and must be set in the override values of the '''sip_node_override_values.yaml''' file. | ||

| + | |||

| + | For an OpenShift cluster with OpenShift Container Storage (OCS), configure the Storage Class to be used for creating the persistent volume. In the case of OCS, the PV is created automatically when the PVC is claimed. For such clusters, the '''volumeName''' parameter in the '''sip_node_override_values.yaml''' file must be empty. | ||

| + | <source lang="text"> | ||

| + | # PVC's section | ||

| + | ## This section defines about creating PVCs | ||

| + | volumes: | ||

| + | pvcLog: | ||

| + | create: true # create defines whether a PVC needs to be created with the chart. | ||

| + | claim: sip-log-pvc # Name of PVC | ||

| + | volumeName: # To bind this PVC to specified Persistent Volume.In case of Openshift, this is required only for NFS mounting and not needed for OCS. | ||

| + | claimSize: 10Gi # This field sets the storage size requested by PVC | ||

| + | storageClass: voice # This field sets the storage class requested by PVC | ||

| + | mountPath: /opt/genesys/logs/volume # Volume mount path for PV | ||

| + | |||

| + | pvcJsonLog: | ||

| + | create: true # create defines whether a PVC needs to be created with the chart. | ||

| + | claim: sip-json-log-pvc # Name of PVC | ||

| + | volumeName: # To bind this PVC to specified Persistent Volume.In case of Openshift, this is required only for NFS mounting and not needed for OCS. | ||

| + | claimSize: 10Gi # This field sets the storage size requested by PVC | ||

| + | storageClass: voice # This field sets the storage class requested by PVC | ||

| + | mountPath: /opt/genesys/logs/sip_node/JSON # Volume mount path for PV | ||

| + | </source> | ||

| + | <!-- | ||

| + | =====Persistent Volume in NFS Storage Type===== | ||

| + | For the OpenShift cluster with Network File System (NFS) storage, the persistent volume must be created manually: | ||

| + | <source lang="text"> | ||

| + | kubectl apply -f ./voice_helm_values/sip_node_log_pv.yaml | ||

| + | </source> | ||

| + | --> | ||

| + | |||

| + | ====Configure the DNS Server for voice-sip==== | ||

| + | The Voice SIP Cluster Service requires the DNS server to be configured in its '''sip_node_override_values.yaml''' file. | ||

| + | |||

| + | In the OCP environment, you can find the Kubernetes DNS server name using the following command: | ||

| + | <source lang="text"> | ||

| + | oc get dns.operator/default -o jsonpath={.status.clusterIP} | ||

| + | </source> | ||

| + | |||

| + | The default value in the SIP Helm chart is "10.0.0.10"; if the DNS server address is different, update it in the '''sip_node_override_values.yaml''' file as shown below: | ||

| + | <source lang="text"> | ||

| + | # update dns server ipaddress | ||

| + | context: | ||

| + | envs: | ||

| + | dnsServer: "10.202.0.10" | ||

| + | </source> | ||

|Status=No | |Status=No | ||

}}{{Section | }}{{Section | ||

| − | |sectionHeading=Deploy | + | |sectionHeading=Deploy the Tenant service |

|alignment=Vertical | |alignment=Vertical | ||

| − | |structuredtext=To deploy | + | |structuredtext=The Tenant Service is included with the Voice Microservices, but has its own deployment procedure. To deploy the Tenant Service, see {{Link-AnywhereElse|product=PrivateEdition|version=Current|manual=TenantPEGuide|topic=Deploy|display text=Deploy the Tenant Service}}. |

|Status=No | |Status=No | ||

}}{{Section | }}{{Section | ||

| − | |sectionHeading=Validate the deployment | + | |sectionHeading=Validate the deployment |

|anchor=Validate | |anchor=Validate | ||

|alignment=Vertical | |alignment=Vertical | ||

Revision as of 18:11, November 1, 2021

Contents

Learn how to deploy Voice Microservices.

General deployment prerequisites

Before you deploy the Voice Services, you must deploy the infrastructure services. See Third-party prerequisites for the list of required infrastructure services.

To override values for both the infrastructure services and voice services, see Override Helm chart values.

Genesys recommends the following order of deployment for the Voice Microservices:

Deploy Consul

Consul is required for multiple services in the Genesys package.

In addition to any other Consul configuration, the following Consul features are required for Voice Services:

- connectinject – To deploy sidecar containers in Voice pods.

- controller – To provide service intention functionality.

- openshift – To set OpenShift-specific permissions.

- syncCatalog – To sync Kubernetes services to Consul. Set toK8S: false and addK8SNamespaceSuffix: false for syncing services from Kubernetes to Consul.

- AccessControlList – To enable ACL, set manageSystemACLs: true.

- storageclass – To set the storage class to a predefined storage class.

- TLS – To enable TLS, set enabled: true and follow the steps/commands described below to set up TLS.

The file content for the Consul configuration is the following:

# config.yaml

global:

name: consul

tls:

enabled: true

caCert:

secretName: consul-ca-cert

# The key of the Kubernetes secret.

secretKey: tls.crt

caKey:

# The name of the Kubernetes secret.

secretName: consul-ca-key

# The key of the Kubernetes secret.

secretKey: tls.key

acls:

manageSystemACLs: true

openshift:

enabled: true

connectInject:

enabled: true

controller:

enabled: true

syncCatalog:

enabled: true

toConsul: true

toK8S: false

addK8SNamespaceSuffix: falseCreation of the Consul bootstrap token

When you enable an Access Control List in Consul, you must ensure that Voice services have access to read and write to Consul. To provide access, you create a token for Voice services in the Consul UI. You can create the necessary Consul bootstrap token when you deploy Consul, although it is possible to do this configuration later, as part of the Voice Services deployment.

When Access Control List (ACL) is enabled in Consul, the Voice services must have the required access for reading and writing into Consul. For that, you must create a token in the Consul UI with the following permissions for the Voice services.

service_prefix "" {

policy = "read"

intentions = "read"

}

service_prefix "" {

policy = "write"

intentions = "write"

}

node_prefix "" {

policy = "read"

}

node_prefix "" {

policy = "write"

}

agent_prefix "" {

policy = "read"

}

agent_prefix "" {

policy = "write"

}

session_prefix "" {

policy = "write"

}

session_prefix "" {

policy = "read"

}

namespace_prefix "" {

key_prefix "" {

policy = "write"

}

session_prefix "" {

policy = "write"

}

}

key_prefix "" {

policy = "read"

}

key_prefix "" {

policy = "write"

}To log into the Consul UI and to create a new ACL, you use a bootstrap token. Use the following command to get the bootstrap token:

kubectl get secret consul-bootstrap-acl-token -n <consul namespace> -o go-template='{{.data.token | base64decode}}Create a new token and create a policy (voice-policy) with the preceding list of permissions and assign it to this token. For example a token is created with a value of a7529f8a-1146-e398-8bd7-367894c4b37b. We can create a Kubernetes secret with this token as shown below:

kubectl create secret generic consul-voice-token -n voice --from-literal='consul-consul-voice-token=a7529f8a-1146-e398-8bd7-367894c4b37b'Creating Intentions in the Consul UI

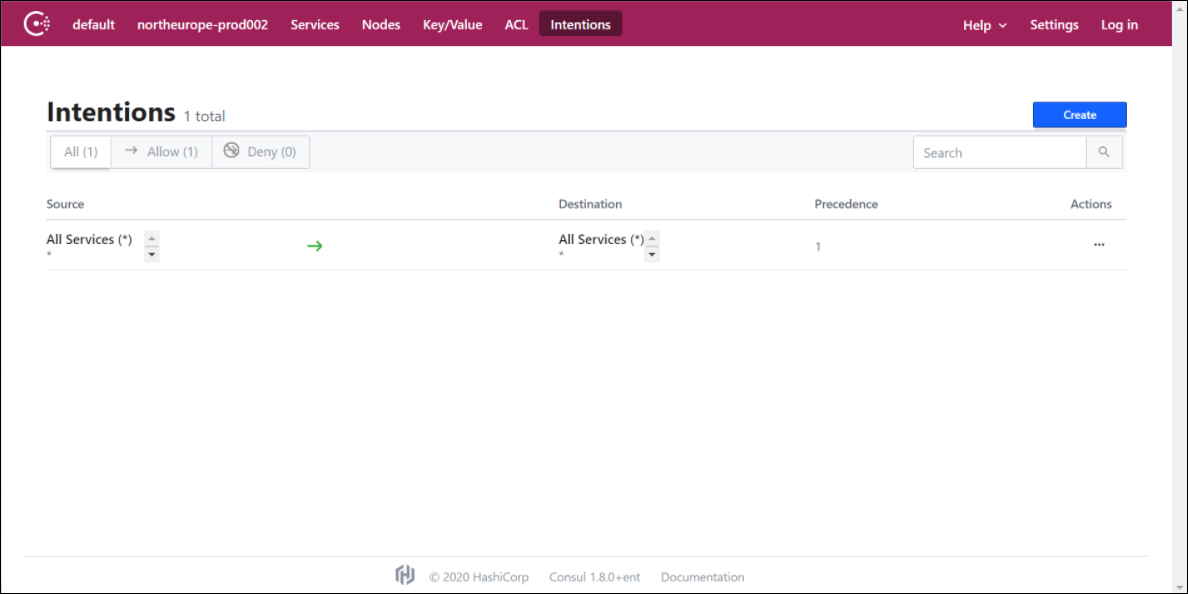

Voice services use the Consul service mesh to connect between services. Consul has provision to either allow or deny the connection between services. This is done using intentions. Log into the Intentions tab using the bootstrap token and create a new intention to allow all source services to all destination services as shown in the following screenshot.

Create the Voice namespace

Before deploying Voice Services and their dependencies, create a namespace using the following command:

kubectl create ns voiceIn all Voice Services and the configuration files of their dependencies, the namespace is voice. If you want a specific, custom namespace, create the namespace (using the preceding command) and remember to change the namespace in files, as required.

Deploy Voice Services

Register the Redis service in Consul

After the creation of the Redis cluster, the Redis IP address should be registered with Consul. Cluster information needs to be created for the Kubernetes services and endpoints with Redis. Once they are created, Consul will automatically sync those Kubernetes services and register them in Consul.

Kubernetes Service and endpoint creation

The Redis registration should be done for all of the following Redis service names. The Voice services use these service names for connecting to the Redis cluster.

redis-agent-state

redis-call-state

redis-config-state

redis-ors-state

redis-ors-stream

redis-registrar-state

redis-rq-state

redis-sip-state

redis-tenant-streamManifest file

For all the preceding Redis service names, create a separate service and endpoint using the following example:

apiVersion: v1

kind: Service

metadata:

name: <redis-service-name> (ex, redis-agent-state)

namespace: <namespace> (ex, voice)

annotations:

"consul.hashicorp.com/service-sync": "true"

spec:

clusterIP: None

---

apiVersion: v1

kind: Endpoints

metadata:

name: <redis-service-name> (ex, redis-agent-state)

namespace: <namespace> (ex, voice)

subsets:

- addresses:

- ip: <redis MASTER IP> (ex, 51.143.122.147)

ports:

- port: <redis port> (ex, 6379)

name: redisport

protocol: <redis transport> (ex, TCP)In addition, get the Redis primary IP using the following command:

kubectl get pods infra-redis-redis-cluster-0 -n infra -o jsonpath='{.status.podIP}' (get IPaddress of the master redis pod)Deploy the Voice Services

Voice Services require a Persistent Volume Claim (PVC); the Voice SIP Cluster Service uses a persistent volume to store traditional SIP Server logs. Before deploying Voice Services, create the PVC.

Storage class and Claim name

The created persistent volume must be configured in the sip_node_override_values.yaml file as shown below:

# pvc will be created for logs

volumes:

pvcLog:

create: true

claim: sip-log-pvc

storageClass: voice

volumeName: <pv name> (ex sip-log-pv)

pvcJsonLog:

create: true

claim: sip-json-log-pvc

storageClass: voice

volumeName: <pv name> (ex sip-log-pv)Configure the DNS Server for voice-sip

The Voice SIP Cluster Service requires the DNS server to be configured in its sip_node_override_values.yaml file. Follow the steps in the Kubernetes documentation to install a dnsutils pod. Using the dnsutils pod, get the dnsserver that's used in the environment.

The default value in the SIP Helm chart is 10.0.0.10. If the dnsserver address is different, update it in the sip_node_override_values.yaml file as shown below:

# update dns server ipaddress

context:

envs:

dnsServer: "10.202.0.10"Voice Service Helm chart deployment

helm upgrade --install --force --wait --timeout 300s -n voice -f ./voice_helm_values/agent_override_values.yaml voice-agent <helm-repo>/voice-agent-<helmchart-version>.tgz --set version=<container-version> --username "$JFROG_USER" --password "$JFROG_PASSWORD"

helm upgrade --install --force --wait --timeout 300s -n voice -f ./voice_helm_values/callthread_override_values.yaml voice-callthread <helm-repo>/voice-callthread-<helmchart-version>.tgz --set version=<container-version> --username "$JFROG_USER" --password "$JFROG_PASSWORD"

helm upgrade --install --force --wait --timeout 200s -n voice -f ./voice_helm_values/config_override_values.yaml voice-config <helm-repo>/voice-config-<helmchart-version>.tgz --set version=<container-version> --username "$JFROG_USER" --password "$JFROG_PASSWORD"

helm upgrade --install --force --wait --timeout 300s -n voice -f ./voice_helm_values/dialplan_override_values.yaml voice-dialplan <helm-repo>/voice-dialplan-<helmchart-version>.tgz --set version=<container-version> --username "$JFROG_USER" --password "$JFROG_PASSWORD"

helm upgrade --install --force --wait --timeout 200s -n voice -f ./voice_helm_values/ors_node_override_values.yaml voice-ors <helm-repo>/voice-ors-<helmchart-version>.tgz --set version=<container-version> --username "$JFROG_USER" --password "$JFROG_PASSWORD"

helm upgrade --install --force --wait --timeout 300s -n voice -f ./voice_helm_values/registrar_override_values.yaml voice-registrar <helm-repo>/voice-registrar-<helmchart-version>.tgz --set version=<container-version> --username "$JFROG_USER" --password "$JFROG_PASSWORD"

helm upgrade --install --force --wait --timeout 200s -n voice -f ./voice_helm_values/rq_node_override_values.yaml voice-rq <helm-repo>/voice-rq-<helmchart-version>.tgz --set version=<container-version> --username "$JFROG_USER" --password "$JFROG_PASSWORD"

helm upgrade --install --force --wait --timeout 200s -n voice -f ./voice_helm_values/sip_node_override_values.yaml voice-sip <helm-repo>/voice-sip-<helmchart-version>.tgz --set version=<container-version> --username "$JFROG_USER" --password "$JFROG_PASSWORD"

helm upgrade --install --force --wait --timeout 300s -n voice -f ./voice_helm_values/sipfe_override_values.yaml voice-sipfe <helm-repo>/voice-sipfe-<helmchart-version>.tgz --set version=<container-version> --username "$JFROG_USER" --password "$JFROG_PASSWORD"

helm upgrade --install --force --wait --timeout 300s -n voice -f ./voice_helm_values/sipproxy_override_values.yaml voice-sipproxy <helm-repo>/voice-sipproxy-<helmchart-version>.tgz --set version=<container-version> --username "$JFROG_USER" --password "$JFROG_PASSWORD"| Service name | Helm chart version |

|---|---|

| voice-config | voice-config-9.0.11.tgz |

| voice-dialplan | voice-dialplan-9.0.08.tgz |

| voice-registrar | voice-registrar-9.0.14.tgz |

| voice-agent | voice-agent-9.0.10.tgz |

| voice-callthread | voice-callthread-9.0.12.tgz |

| voice-sip | voice-sip-9.0.22.tgz |

| voice-sipfe | voice-sipfe-9.0.06.tgz |

| voice-sipproxy | voice-sipproxy-9.0.09.tgz |

| voice-rq | voice-rq-9.0.08.tgz |

| voice-ors | voice-ors-9.0.08.tgz |

Deploy in OpenShift

Add a rule for Consul DNS forwarding

In the OpenShift Container Platform (OCP), as part of the general deployment prerequisites, you must add a rule for Consul DNS forwarding. OpenShift sends DNS requests to the DNS server in the openshift-dns namespace. To forward Consul FQDN resolution to a Consul DNS server, add the forwarding rule to the configmap of the default DNS operator. Save the Consul DNS IP address using the following command:

kubectl get svc consul-dns -n <consul namespace> -o jsonpath={.spec.clusterIP} (Internal IP of consul-dns service)

> oc edit dns.operator/default

Add the below specs:

spec:

servers:

- name: consul-dns

zones:

- consul

forwardPlugin:

upstreams:

- <Internal IP of consul-dns service>Persistent volumes

The general Voice Services deployment is described in Deploy Voice Services. There are some differences when creating PVCs in the OpenShift Container Platform (OCP). This section describes the configuration for OCP.

Persistent Volume in OCS Storage Type

A Storage Class might have been created already in the OCP. This Storage Class is used for creating PVCs and must be set in the override values of the sip_node_override_values.yaml file.

For an OpenShift cluster with OpenShift Container Storage (OCS), configure the Storage Class to be used for creating the persistent volume. In the case of OCS, the PV is created automatically when the PVC is claimed. For such clusters, the volumeName parameter in the sip_node_override_values.yaml file must be empty.

# PVC's section

## This section defines about creating PVCs

volumes:

pvcLog:

create: true # create defines whether a PVC needs to be created with the chart.

claim: sip-log-pvc # Name of PVC

volumeName: # To bind this PVC to specified Persistent Volume.In case of Openshift, this is required only for NFS mounting and not needed for OCS.

claimSize: 10Gi # This field sets the storage size requested by PVC

storageClass: voice # This field sets the storage class requested by PVC

mountPath: /opt/genesys/logs/volume # Volume mount path for PV

pvcJsonLog:

create: true # create defines whether a PVC needs to be created with the chart.

claim: sip-json-log-pvc # Name of PVC

volumeName: # To bind this PVC to specified Persistent Volume.In case of Openshift, this is required only for NFS mounting and not needed for OCS.

claimSize: 10Gi # This field sets the storage size requested by PVC

storageClass: voice # This field sets the storage class requested by PVC

mountPath: /opt/genesys/logs/sip_node/JSON # Volume mount path for PVConfigure the DNS Server for voice-sip

The Voice SIP Cluster Service requires the DNS server to be configured in its sip_node_override_values.yaml file.

In the OCP environment, you can find the Kubernetes DNS server name using the following command:

oc get dns.operator/default -o jsonpath={.status.clusterIP}The default value in the SIP Helm chart is "10.0.0.10"; if the DNS server address is different, update it in the sip_node_override_values.yaml file as shown below:

# update dns server ipaddress

context:

envs:

dnsServer: "10.202.0.10"Deploy the Tenant service

The Tenant Service is included with the Voice Microservices, but has its own deployment procedure. To deploy the Tenant Service, see Deploy the Tenant Service.